17 hours ago

(views expressed are personal, publicly available data has been taken for testing)

In Part 1 of our exploration into Google’s Agent Development Kit (ADK), we introduced the fundamentals of AI Agents — intelligent systems designed to perceive, reason, and act — and saw how ADK simplifies building them. We constructed our first SequentialAgent, a workflow that tackled the crucial e-commerce task of automated catalog image quality checks by executing steps like alignment analysis and attribute matching in a predefined order. This demonstrated how agents can automate structured, multi-step processes effectively.

While sequential execution is powerful for such workflows, the true potential of AI agents unfolds when they can break free from relying solely on their internal knowledge base, which is inherently limited by its training data cutoff. Imagine agents that can actively seek out current information, verify facts against real-world data, or interact with other APIs to perform actions.

This is made possible by equipping agents with Tools within the ADK framework. Tools are essentially external capabilities — like accessing databases, calling APIs, or running searches — that an agent can learn to invoke intelligently to achieve its goals.

One of the most impactful built-in capabilities provided by ADK is the Google Search tool. Giving an agent access to Google Search allows it to:

- Fetch up-to-the-minute information (news, specs, events).

- Cross-reference claims made in text against online sources.

- Gather diverse perspectives (like user reviews).

- Fundamentally ground its responses and analysis in external reality, making its outputs more reliable and accurate.

In this post, we’ll harness this tool-using capability to build a sophisticated agent pipeline focused on a critical business need: automated fact-checking. We’ll demonstrate how an ADK agent, armed with Google Search, can analyze marketing content and rigorously verify its factual claims, ensuring accuracy and building trust.

In today’s digital landscape, information (and misinformation) spreads like wildfire. For businesses, particularly in marketing and e-commerce, the content they publish is a direct reflection of their brand. Claims made about products, services, or achievements need to be accurate. Why? Because trust is the ultimate currency. Inaccurate or misleading claims can erode customer confidence, damage brand reputation, and even lead to legal repercussions.

However, manually verifying every statistic, award mention, or technical specification in marketing copy across multiple campaigns and platforms is a monumental task. It’s slow, costly, and prone to human error. How can we scale this crucial process while maintaining rigor? This is where our search-enabled AI agent comes in.

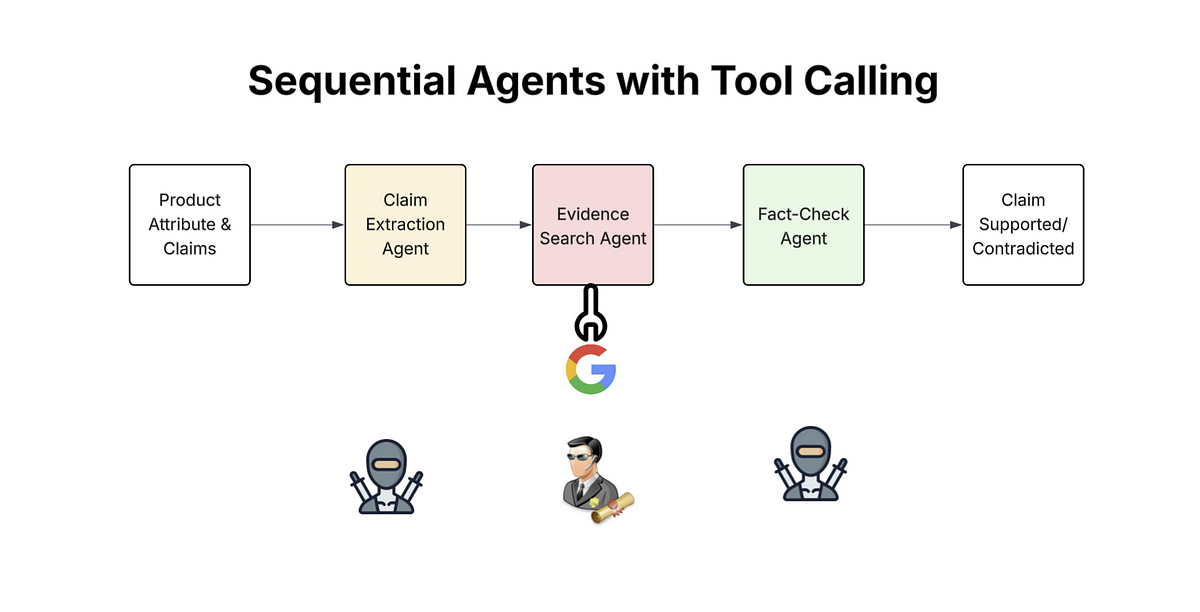

We can design an efficient solution using ADK’s SequentialAgent, which orchestrates a series of specialized LlmAgent instances:

- Claim Extraction Agent: This agent acts like a meticulous editor. It reads the input marketing text and uses its Natural Language Understanding (NLU) capabilities to identify and isolate specific, verifiable factual claims. It’s trained to ignore subjective puffery (“It’s amazing!”) and focus on concrete statements (e.g., percentages, awards, technical specs). It outputs these claims in a structured format (a JSON list).

- Evidence Search Agent: This agent is the researcher. It takes the list of claims from the previous step. For each claim, it formulates a targeted query and uses the integrated

Google Searchtool to find relevant information online from reliable sources. It then summarizes the evidence found (or lack thereof) for each specific claim. - Fact-Check Agent: This agent is the judge. It receives the original claims and the evidence summaries gathered by the search agent. It carefully compares the evidence against each claim and makes a determination:

- Supported: The search evidence clearly backs up the claim.

- Contradicted: The search evidence directly refutes the claim.

- Unsubstantiated: The search evidence is insufficient or couldn’t be found to verify the claim. It provides reasoning for ‘Contradicted’ or ‘Unsubstantiated’ findings and outputs the final verdict in a structured JSON report.

This multi-agent approach breaks down the complex task of fact-checking into specialized, manageable steps, leveraging both the LLM’s analytical power and the vast, up-to-date knowledge accessible via Google Search.

Building the Fact-Checker with ADK: Code Walkthrough

import asyncio

import time # To generate unique session IDs

import json # To handle structured data passing in prompts

import traceback # For detailed error printing# Make sure ADK components are installed and importable

try:

# Core ADK components

from google.adk.agents.sequential_agent import SequentialAgent

from google.adk.agents.llm_agent import LlmAgent

from google.adk.sessions import InMemorySessionService

from google.adk.runners import Runner

# Import the search tool

from google.adk.tools import Google Search

# Import types from google.generativeai for Content objects

from google.generativeai import types

# Ensure google.generativeai is installed for model configuration

import google.generativeai as genai

print("✅ ADK, GenerativeAI, and Search Tool components imported successfully.")

# --- CONFIGURE YOUR API KEYS HERE ---

# Ensure GOOGLE_API_KEY environment variable is set for Gemini API access.

# Example:

# import os

# genai.configure(api_key=os.environ["GOOGLE_API_KEY"])

# Check ADK documentation for any specific setup needed for the Google Search tool.

# --- OR (if running in Colab) ---

# from google.colab import userdata

# genai.configure(api_key=userdata.get('GOOGLE_API_KEY'))

# ---------------------------------

except ImportError as e:

print(f"❌ Error importing components: {e}")

print("Please ensure google-adk and google-generativeai are installed.")

print("Verify the Google Search tool is correctly installed/available.")

exit()

except Exception as setup_err:

print(f"❌ An unexpected error occurred during initial imports: {setup_err}")

exit()

# --- Constants ---

# Select a Gemini model. Note: Tool calling effectiveness can vary by model.

# Ensure the chosen model version robustly supports tool use.

# Using flash for potential speed, but Pro might be better for complex reasoning. Test accordingly.

MODEL_NAME = "gemini-2.0-flash"

# MODEL_NAME = "gemini-2.5-pro" # Alternative, potentially better reasoning/tool use.

# Configure the model instance for LlmAgent

try:

model_config = genai.GenerativeModel(MODEL_NAME)

print(f"✅ Configured GenerativeModel: {MODEL_NAME}")

except Exception as model_err:

print(f"❌ Error configuring GenerativeModel ({MODEL_NAME}): {model_err}")

print("Check model name and API key validity.")

exit()

# --- Shared Components ---

session_service = InMemorySessionService() # Basic session storage

# --- Helper Function for JSON Cleaning ---

# (Crucial for handling LLM outputs that need to be parsed)

def clean_and_parse_json(text_output: str) -> dict | None:

"""Cleans markdown fences and attempts to parse JSON."""

if not text_output:

return None

cleaned_output_text = text_output.strip()

# Simple cleaning targeting common markdown fences

if cleaned_output_text.startswith("```json"):

cleaned_output_text = cleaned_output_text[len("```json"):].strip()

if cleaned_output_text.startswith("```"):

cleaned_output_text = cleaned_output_text[len("```"):].strip()

if cleaned_output_text.endswith("```"):

cleaned_output_text = cleaned_output_text[:-len("```")].strip()

# Attempt parsing

try:

return json.loads(cleaned_output_text)

except json.JSONDecodeError:

# More robust: try finding the first '{' and last '}'

start_index = cleaned_output_text.find('{')

end_index = cleaned_output_text.rfind('}')

if start_index != -1 and end_index != -1 and end_index > start_index:

potential_json = cleaned_output_text[start_index : end_index + 1]

try:

return json.loads(potential_json)

except json.JSONDecodeError:

print(f"\n(Warning: Output could not be parsed as JSON after extensive cleaning. Attempted: '{potential_json}')")

return None

else:

print(f"\n(Warning: Output could not be parsed as JSON, invalid structure. Started with: '{cleaned_output_text[:100]}...')")

return None

except Exception as parse_err: # Catch other potential errors

print(f"\n(Warning: Unexpected error parsing JSON: {parse_err})")

return None

# --- Automated Fact-Checking Pipeline Definition ---

APP_NAME_FACTCHECK = "marketing_factcheck_app"

# Agent 1: Extract Claims (No tool needed)

extract_claims_agent = LlmAgent(

name="ExtractClaimsAgent",

model=model_config, # Use the configured model

instruction="""You are a Factual Claim Extractor AI.

Analyze the input marketing text. Identify and extract specific, verifiable factual claims (e.g., statistics, percentages, technical specifications, awards won, concrete results).

Ignore subjective statements, opinions, puffery ('amazing', 'best ever'), or general benefits unless tied to a specific metric.

Output ONLY a valid JSON object with a single key "claims" containing a list of the extracted claim strings. If no verifiable claims are found, return {"claims": []}.

Example Input: "Our new solar panel generates 25% more energy and won the 'Eco Innovator 2024' award. It feels great!"

Example Output: {"claims": ["generates 25% more energy", "won the 'Eco Innovator 2024' award"]}

""",

description="Extracts factual claims from marketing text.",

)

# Agent 2: Search for Evidence (Tool needed)

search_evidence_agent = LlmAgent(

name="SearchEvidenceAgent",

model=model_config, # Use the configured model

tools=[Google Search], # <<< Equipped with Google Search Tool!

instruction="""You are a Research Assistant AI for evidence gathering.

Input is a JSON object: {"claims": ["claim1", "claim2", ...]}.

For EACH claim in the list:

1. Formulate a targeted Google Search query to find evidence supporting or refuting it from reliable sources (official sites, news, reputable reviews).

2. Execute the search using the provided tool.

3. Briefly summarize the key findings (1-3 sentences) returned by the search specific to that claim. Note source type if possible (e.g., "Official site confirms...", "News article reports...", "No clear evidence found...").

Output ONLY a valid JSON object where keys are the original claims and values are the evidence summaries.

Example Input: {"claims": ["generates 25% more energy", "won the 'Eco Innovator 2024' award"]}

Example Output: {"generates 25% more energy": "Search results mention improved efficiency, but specific figures vary.", "won the 'Eco Innovator 2024' award": "Official press release confirms the award."}

""",

description="Searches for evidence for each extracted claim using Google Search.",

)

# Agent 3: Fact-Check Claims based on Evidence (No tool needed)

fact_check_agent = LlmAgent(

name="FactCheckAgent",

model=model_config, # Use the configured model

instruction="""You are a Fact-Checking AI Analyst.

Input consists of the original 'claims' list (from step 1) and the 'evidence_summaries' JSON (from step 2).

Analyze the 'evidence_summaries' provided for EACH original 'claim'.

Determine if the claim is 'Supported', 'Contradicted', or 'Unsubstantiated' based *strictly* on the provided evidence summary text.

Provide brief reasoning (1 sentence) only if status is 'Contradicted' or 'Unsubstantiated'.

Output ONLY a valid JSON object with a single key "fact_check_results". The value should be a list of objects, each with keys: "claim" (string), "status" (string: 'Supported'/'Contradicted'/'Unsubstantiated'), "reasoning" (string: empty if 'Supported').

Example Input: (Claims list & Evidence Summaries JSON implicitly passed)

Example Output:

{"fact_check_results": [{"claim": "generates 25% more energy", "status": "Unsubstantiated", "reasoning": "Evidence summary did not confirm the specific 25% figure."}, {"claim": "won the 'Eco Innovator 2024' award", "status": "Supported", "reasoning": ""}]}

""",

description="Evaluates claims based on summarized search evidence.",

)

# Define the Sequential Pipeline

fact_checking_pipeline = SequentialAgent(

name="FactCheckingPipeline",

sub_agents=[

extract_claims_agent, # Step 1

search_evidence_agent, # Step 2

fact_check_agent # Step 3

],

description="A pipeline to extract claims, search for evidence, and fact-check marketing text."

)

# Setup the Runner

try:

factcheck_runner = Runner(

agent=fact_checking_pipeline,

app_name=APP_NAME_FACTCHECK,

session_service=session_service

)

print("✅ FactCheck Runner initialized successfully.")

except Exception as e:

print(f"❌ Error initializing FactCheck Runner: {e}")

traceback.print_exc()

exit()

# Define the Interaction Function

async def call_fact_check_pipeline(marketing_text: str, user_id: str, session_id: str):

"""Runs the marketing content fact-checking pipeline."""

print(f"\n>>> Starting Fact-Checking Pipeline for Text:")

print(f"'''\n{marketing_text[:500].strip()}...\n'''") # Show input snippet

print(f">>> User: {user_id}, Session: {session_id}")

# Create or retrieve session

session = session_service.create_session(app_name=APP_NAME_FACTCHECK, user_id=user_id, session_id=session_id)

print(f"FactCheck session created/retrieved with ID: {session_id}")

try:

# Prepare initial input message for the first agent

content = types.Content(role='user', parts=[types.Part(text=marketing_text)])

# Run the agent pipeline

print("Running fact-checking pipeline...")

start_run_time = time.time()

final_event = None

# Stream events from the runner

async for event in factcheck_runner.run_async(user_id=user_id, session_id=session_id, new_message=content):

print(f" ...Event: {event.event_type}") # Basic event logging

# Add more detailed logging based on event types if needed (e.g., tool calls)

# Check ADK documentation for specific event attributes

if event.is_final_response():

final_event = event

end_run_time = time.time()

print(f"Fact-checking pipeline run complete in {end_run_time - start_run_time:.2f} seconds.")

# Process the final output from the last agent (FactCheckAgent)

print("\n--- Final Fact-Checking Results ---")

if final_event and final_event.content and final_event.content.parts:

final_output_text = final_event.content.parts[0].text

print(f"Raw Output (FactChecker):\n{final_output_text}") # Show raw output

final_json = clean_and_parse_json(final_output_text) # Attempt to parse

if final_json and 'fact_check_results' in final_json:

print("\n--- Parsed Fact-Check Status ---")

results = final_json['fact_check_results']

if isinstance(results, list):

if not results:

print("(No verifiable claims were extracted from the input text)")

for item in results:

if isinstance(item, dict): # Ensure item is a dictionary

claim = item.get('claim', 'N/A')

status = item.get('status', 'N/A')

reasoning = item.get('reasoning', '')

print(f"- Claim: \"{claim}\"")

print(f" Status: {status}")

if reasoning: # Only print reasoning if it exists

print(f" Reasoning: {reasoning}")

else:

print(f" (Error: Result item expected dictionary, got {type(item)})")

else:

print("(Error: 'fact_check_results' key found, but value is not a list)")

elif final_json:

print("(Error: Parsed JSON, but missing 'fact_check_results' key)")

# else: Clean/parse function already printed a warning

elif final_event and final_event.error_message:

print(f"❌ Pipeline ended with error: {final_event.error_message}")

else:

print("❌ Final FactCheck Output: (No final event captured or content missing)")

except Exception as e:

print(f"❌ An error occurred during the fact-checking pipeline execution: {e}")

traceback.print_exc()

# --- Example Usage ---

async def main():

"""Runs an example for the fact-checking pipeline."""

user_id = "marketing_dept_01"

factcheck_session_id = f"factcheck_run_{time.time()}_{time.monotonic_ns()}" # Unique ID per run

# Sample marketing text with various claims

marketing_text_to_check = """

Discover the Apex Phone Z: now 40% faster with our new Quantum Chip!

Enjoy an unprecedented 60-hour battery life. It was awarded 'Gadget of the Year' by TechCrunch in 2024.

Our screen is made with Gorilla Glass Victus 3 for ultimate durability.

This phone is simply the best - guaranteed to make your friends jealous!

It also reduces your carbon footprint by 10%. Get yours today!

"""

await call_fact_check_pipeline(marketing_text_to_check, user_id, factcheck_session_id)

# --- Run the main async function ---

if __name__ == "__main__":

print("🚀 Starting Fact-Checking Agentic Workflow...")

try:

asyncio.run(main())

except RuntimeError as e:

# Standard handling for running asyncio in notebooks/IDEs

if "cannot run event loop while another loop is running" in str(e):

print("\n--------------------------------------------------------------------")

print("INFO: Detected running event loop (e.g., Jupyter/Colab).")

print("✅ Please execute 'await main()' in a cell directly.")

print("--------------------------------------------------------------------")

else:

raise e

print("\n✅ Fact-Checking Agentic Workflow finished.")

# --- Reminder for Jupyter/Colab Users ---

# If you are in a notebook, run this in a cell after defining everything above:

await main()

You’ll see the raw JSON output, followed by a parsed version like this:

--- Final Fact-Checking Results ---

Raw Output (FactChecker):

{

"fact_check_results": [

{"claim": "now 40% faster with our new Quantum Chip", "status": "Unsubstantiated", "reasoning": "Search results mention a new chip and speed improvements, but the specific 40% figure was not confirmed."},

{"claim": "Enjoy an unprecedented 60-hour battery life", "status": "Contradicted", "reasoning": "Evidence found suggests typical battery life is significantly lower based on reviews and tests."},

{"claim": "awarded 'Gadget of the Year' by TechCrunch in 2024", "status": "Supported", "reasoning": ""},

{"claim": "made with Gorilla Glass Victus 3", "status": "Unsubstantiated", "reasoning": "Search results confirm Gorilla Glass Victus, but specification of 'Victus 3' was not found."},

{"claim": "reduces your carbon footprint by 10%", "status": "Unsubstantiated", "reasoning": "No reliable evidence found in search results to support this specific environmental claim."}

]

}--- Parsed Fact-Check Status ---

- Claim: "now 40% faster with our new Quantum Chip"

Status: Unsubstantiated

Reasoning: Search results mention a new chip and speed improvements, but the specific 40% figure was not confirmed.

- Claim: "Enjoy an unprecedented 60-hour battery life"

Status: Contradicted

Reasoning: Evidence found suggests typical battery life is significantly lower based on reviews and tests.

- Claim: "awarded 'Gadget of the Year' by TechCrunch in 2024"

Status: Supported

- Claim: "made with Gorilla Glass Victus 3"

Status: Unsubstantiated

Reasoning: Search results confirm Gorilla Glass Victus, but specification of 'Victus 3' was not found.

- Claim: "reduces your carbon footprint by 10%"

Status: Unsubstantiated

Reasoning: No reliable evidence found in search results to support this specific environmental claim.

This fact-checking agent isn’t just generating text; it’s performing a research and verification task grounded in external information via the Google Search tool. This grounding is what elevates AI agents from creative generators to reliable assistants.

By connecting the agent’s analysis to real-time, verifiable data, we build systems that are:

- More Accurate: Less prone to making things up (hallucination).

- More Trustworthy: Users can have higher confidence in the output.

- More Useful: Capable of handling tasks requiring up-to-date, real-world knowledge.

This automated pipeline provides a scalable way to uphold accuracy standards, build customer trust, and mitigate risks associated with inaccurate marketing claims.

We’ve successfully constructed an automated fact-checking pipeline using Google’s Agent Development Kit. By combining specialized LLM agents in a sequence and equipping one with the Google Search tool, we created a system capable of analyzing text, researching claims, and delivering a verifiable assessment of factual accuracy.

This example demonstrates the power of ADK for building sophisticated, tool-using AI agents that can tackle complex, real-world tasks requiring grounded reasoning. You can adapt this pattern for various verification, research, or data validation workflows, bringing a new level of accuracy and reliability to your AI-powered applications. Start exploring ADK and see how you can build more trustworthy AI solutions today!

Hoping to share more use cases and example of ADK can be used to build Agents that can significantly transform our day-to-day operations.

Source Credit: https://medium.com/google-cloud/building-a-trustworthy-ai-automated-fact-checking-with-googles-agent-development-kit-292e84967261?source=rss—-e52cf94d98af—4