I built this open-source service with one goal in mind: To make BigQuery backups&restores simple, scalable, and accessible to everyone.

Whether you’re trying to meet compliance requirements, refresh dev/test/prod environments or just have peace of mind, this tool is there to help. It provides an out-of-the-box way to automate backups and restores of your BigQuery datasets and tables, supporting formats like Avro, CSV, Parquet, and JSON.

The solution which discards the need to reinvent the wheel.

You can

- 🛡️ Backup entire datasets or selected tables

- 🔄 Restore them with ease, even across projects

- 💾 Export to portable formats

- ⚡ Run everything on Cloud Run or your container platform of choice

🔗 Ready to skip the story and dive into code?

Check out the source code here and get started.

Companies that use Google BigQuery for their data warehousing requirements frequently run into the same sort of problem. Although BigQuery has great built-in features, such as time travel, cross-region replication, and multi-region deployments, these features may not be sufficient in some situations

- Regulations: Many sectors must keep backups entirely outside of the Google Cloud ecosystem, sometimes on-prem or with other cloud service providers.

- Data Recovery windows: The 7 day time travel limit of BigQuery tables might not be enough to meet long term backup needs.

- Cross-Environment Data Refresh: Without the right tools, it might be difficult to refresh development environments with production data.

I decided to develop an open-source, reusable service that meets all of these objectives as it was obvious that other teams are also developing more or less same functionalities repeatedly.

The BigQuery Backup and Restore Automation service provides a complete solution for

- Extracting data from BigQuery tables

- Storing it in portable formats (AVRO, CSV, JSON, Parquet)

- Restoring it when needed, even to different projects or datasets

The service is containerized, scalable, and designed to run on various compute platforms in Google Cloud, with Cloud Run being the default deployment option.

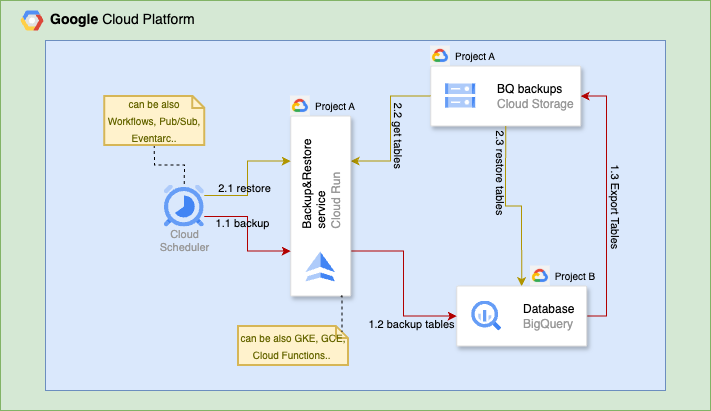

Above is sample implementation architecture of mine. As you can see this architecture is used to build the image running in cloud run which is the part I have open-sourced. You can use that image in any conainairized platform of your choice, it can be also GKE. For me using cloud run was just easier. I have also used cloud scheduler to take regular backups and restore operations, you might use other gcp components to intiate the process or just hit it from your localhost.

- Discovery: The service can discover all datasets in a project or work with specific datasets and tables by using table filters.

- Snapshots: For each eligible table, a point-in-time snapshot is created. This ensures backup consistency even if data is changing during the backup process.

- Export: The snapshots are exported to Google Cloud Storage in your chosen format (AVRO, CSV, JSON, or Parquet).

- Parallelization: Operations can be parallelized for large-scale backups with configurable thread pools.

- Dataset Creation: For each dataset being restored, a new dataset with prefix “restored_” is created.

- Table Discovery: The service discovers the tables to restore from your GCS backup location.

- Import: Tables are imported into the new datasets, with configurable options for how to handle existing data.

- Multi Threading: Restores can also be parallelized for faster processing.

The service is available as a Docker image from both Google Artifact Registry and GitHub Packages. Here’s a simplified deployment path

- Pull the image from on of the above mentioned public registries.

- Deploy to Cloud Run and Configure with appropriate service account permissions

- Set up a GCS bucket for your backups

- Hit the backup and restore endpoints with your backup/restore json requests.

{

"bigQuery": {

"projectId": "my-gcp-project"

},

"backupStorage": {

"gcsOptions": {

"uri": "gs://my-backup-bucket",

"exportOptions": {

"format": "AVRO",

"overwrite": true

}

}

}

}

{

"bigQuery": {

"projectId": "my-restore-project",

"datasetsToRestore": [

"sales_data", "analytics_dataset"

]

},

"restoreStorage": {

"gcsOptions": {

"uri": "gs://my-backup-bucket",

"backupTimestamp": "20240101_120000",

"projectIdOfBackup": "source-project-id",

"importOptions": {

"format": "AVRO",

"writeDisposition": "WRITE_TRUNCATE"

}

}

}

}

More detailed usage guide can be found in project readme file.

to make our service to operate properly, several permissions are needed:

- Bigquery Data Editor (either project level or dataset lever) and Bigquery User roles for the service account of your conatiner runner choice (for me it was sa of cloud run)

- Storage Admin role for GCS bucket operations, if you have custom role which contains storage.buckets.get, storage.objects.list, storage.objects.create permissions than that would be also enough.

For large datasets

- Remember increasing thread pool size for parallel processing

- Use AVRO format (which is default format for the service) for best performance and compatibility

A gap in native GCP capabilities is filled by BigQuery Backup and Restore Automation by helping you meet compliance needs, refresh environments or just sleep better at night knowing your data is safe.

The purpose of making this an open-source solution is to provide a reliable, tried-and-true method of handling BigQuery backups while assisting teams in avoiding having to rewrite the same logic. If you want to contribute make sure you also check open feature requests.

Source Credit: https://medium.com/google-cloud/your-open-source-bigquery-backup-restore-buddy-6ecf85d20c26?source=rss—-e52cf94d98af—4