11 hours ago

A step-by-step guide to setting up a RAG agent in under 2 minutes and querying across multiple corpora (knowledge bases) using an ADK agent.

In my previous article, Building Vertex AI RAG Engine with Gemini 2 Flash LLM, we explored how to build an RAG agent programmatically using the Vertex AI RAG engine with Gemini 2 flash LLM. Vertex AI Rag engine is a fully managed service that enables us to build and deploy RAG implementation using our data and methods.

In this article, we will build an RAG agent using the same principles as the retrieval-augmented generation (RAG) process, utilizing the Agent Development Kit. We will upload a document to GCS (Google Cloud Storage) through the ADK Web, create a corpus, import the document into the corpus, and then use retrieval using an RAG query across all corpora.

We will utilize documents from the recent Google and Kaggle Gen AI Intensive 5-day course as part of our data ingestion into GCS and subsequently into Vertex AI Rag Corpus, retrieving them using a simple prompt.

- Foundation Models & Prompt Engineering: Resources on large language models and effective prompt design

2. Embeddings & Vector Stores: Details on text embeddings and vector databases

3. Generative AI Agents: Information on agent design, implementation, and usage

4. Domain-Specific LLMs: Techniques for applying LLMs to solve domain-specific problems

5.MLOps for Generative AI: Deployment and production considerations for GenAI systems

The Vertex AI RAG Engine, part of the Vertex AI Platform, enables Retrieval-Augmented Generation (RAG) by providing a robust data framework for building context-aware large language model (LLM) applications. It allows us to enhance model responses by applying large language models (LLMs) to our data, a process known as context augmentation, which is core to implementing RAG.

A Corpus is known as an Index, a collection of documents or a source of information that is queried to retrieve relevant context for response generation.

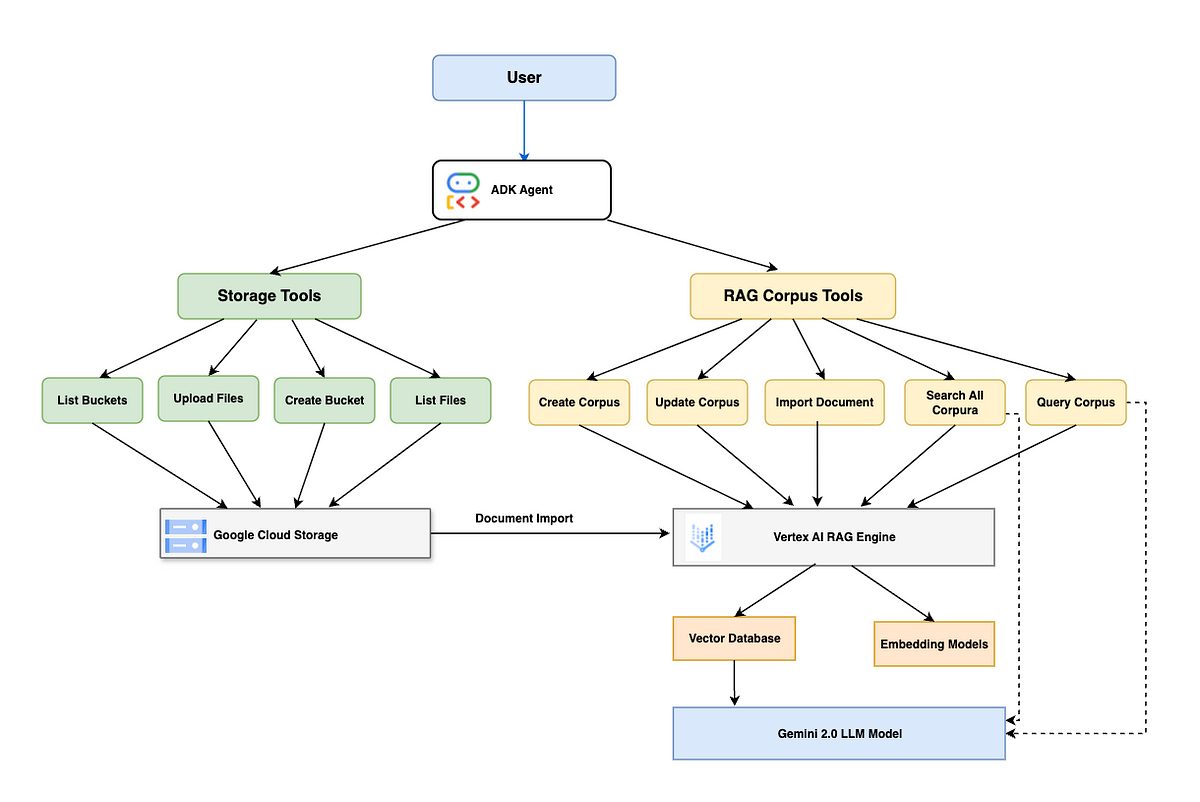

Below is a detailed explanation of the components and their interactions, enabling complex RAG operations to be performed through a simple, natural language interface, while maintaining a clean and maintainable codebase.

Clear Separation of Concerns:

- Storage operations focused on GCS.

- RAG operations focused on Vertex AI

- The ADK agent handles tool orchestration

Core Components

1. User Interface Layer

Accepts natural language commands and queries from users using ADK Web and CLI.

2. Agent Orchestration Layer

ADK agent as orchestrator and routes to FunctionTool based on user input

3. Storage Tools:

A collection of functions for managing Google Cloud Storage

- create_bucket_tool: Creates new GCS buckets

- list_buckets_tool: Lists available GCS buckets

- upload_file_gcs_tool: Uploads files to GCS buckets

- list_blobs_tool: Lists files within GCS buckets

4. RAG Corpus Tools — Corpus Management:

Functions for managing Vertex AI RAG corpora

- create_corpus_tool: Creates new RAG corpora

- update_corpus_tool: Updates corpus metadata

- list_corpora_tool: Lists all available corpora

- get_corpus_tool: Gets details of a specific corpus

- delete_corpus_tool: Deletes a corpus

5. RAG Corpus Tools — File Management:

- import_document_tool: Imports documents from GCS into a corpus

- list_files_tool: Lists files in a corpus

- get_file_tool: Gets details of a specific file

- delete_file_tool: Deletes a file from a corpus

6. RAG Corpus Tools — Query Tools:

- query_rag_corpus_tool: Queries a specific corpus for relevant information

- search_all_corpora_tool: Searches across all corpora

7. Google Cloud Services Layer

- Google Cloud Storage (GCS): Stores the original document files

- Organizes files in buckets by topic or domain

- Provides secure, scalable object storage

2. Vertex AI RAG Engine: Manages the RAG pipeline

- Corpora: Collections of indexed documents

- Embedding Models: Transform text to numerical vector representations

- Vector Database: Stores and retrieves vector embeddings efficiently

- Files are uploaded to Google Cloud Storage (GCS) buckets using Storage Tools.

- Files are imported from Google Cloud Storage (GCS) into RAG corpora using RAG Corpus Tools.

- Vertex AI RAG Engine processes files:

- Splits documents into chunks

- Generates embeddings for each chunk

- Index embeddings in the vector database

- The user submits a query through the ADK interface.

- ADK agent routes the query to the appropriate RAG query tools

- Query tools convert the question to an embedding.

- A vector database performs a similarity search.

- Relevant text chunks are retrieved with metadata.

- (Optional) Retrieved contexts are used to ground a Gemini response

- Results are formatted and returned to the user.

Let us build a RAG agent using ADK + Vertex AI Engine

Pre-Requisites

- Python 3.11+ installed

- Google Gemini Generative AI access via API key

Project Structure

```

adk-vertex-ai-rag-engine/

├── rag/ # Main project package

│ ├── __init__.py # Package initialization

│ ├── agent.py # The main RAG corpus manager agent

│ ├── config/ # Configuration directory

│ │ └── __init__.py # Centralized configuration settings

│ └── tools/ # ADK function tools

│ ├── __init__.py # Tools package initialization

│ ├── corpus_tools.py # RAG corpus management tools

│ └── storage_tools.py # GCS bucket management tools

└── README.md # Project documentation

# Clone the repository

git clone https://github.com/arjunprabhulal/adk-vertex-ai-rag-engine.git

cd adk-vertex-ai-rag-engine# Setup virtual environment (Mac or Unix )

python -m venv .venv && source .venv/bin/active

# Install dependencies

pip install -r requirements.txtConfigure the GCP project and Cloud Storage and Vertex AI permission for the GCP project and API fetch key from https://ai.google.dev/

# Configure your Google Cloud project

export GOOGLE_CLOUD_PROJECT="your-project-id"

export GOOGLE_CLOUD_LOCATION="us-central1"# Enable required Google Cloud services

gcloud services enable aiplatform.googleapis.com --project=${GOOGLE_CLOUD_PROJECT}

gcloud services enable storage.googleapis.com --project=${GOOGLE_CLOUD_PROJECT}

# Set up IAM permissions

gcloud projects add-iam-policy-binding ${GOOGLE_CLOUD_PROJECT} \

--member="user:YOUR_EMAIL@domain.com" \

--role="roles/aiplatform.user"

gcloud projects add-iam-policy-binding ${GOOGLE_CLOUD_PROJECT} \

--member="user:YOUR_EMAIL@domain.com" \

--role="roles/storage.objectAdmin"

# Set up Gemini API key

# Get your API key from Google AI Studio: https://ai.google.dev/

export GOOGLE_API_KEY=your_gemini_api_key_here

# Set up authentication credentials

# Option 1: Use gcloud application-default credentials (recommended for development)

gcloud auth application-default login

# Option 2: Use a service account key (for production or CI/CD environments)

# Download your service account key from GCP Console and set the environment variable

export GOOGLE_APPLICATION_CREDENTIALS=/path/to/your/service-account-key.json

Configure all GCP-related GCS, Vertex AI embedding configs, and LLM details in [rag/config/__init__.py]

- Defines configuration constants for GCS and Vertex AI RAG operations

- Sets reasonable defaults for parameters like an embedding model and result counts

- Centralized configuration for easy changes and maintenance

# Project and location settings

PROJECT_ID = "your-project-id" # Your Google Cloud project ID

LOCATION = "us-central1" # Google Cloud region# RAG configuration defaults

RAG_DEFAULT_EMBEDDING_MODEL = "text-embedding-004" # Default embedding model

RAG_DEFAULT_TOP_K = 10 # Number of results to return for a single query

RAG_DEFAULT_SEARCH_TOP_K = 5 # Number of results per corpus for multi-corpus search

RAG_DEFAULT_VECTOR_DISTANCE_THRESHOLD = 0.5 # Similarity threshold

RAG_DEFAULT_PAGE_SIZE = 50 # Default pagination size for listing operations

# GCS configuration defaults

GCS_DEFAULT_STORAGE_CLASS = "STANDARD" # Standard storage class for buckets

GCS_DEFAULT_LOCATION = "US" # Multi-regional location for buckets

GCS_DEFAULT_CONTENT_TYPE = "application/pdf" # Default content type for uploads

GCS_LIST_BUCKETS_MAX_RESULTS = 50 # Max results for bucket listing

GCS_LIST_BLOBS_MAX_RESULTS = 100 # Max results for blob listing

# Logging configuration

LOG_LEVEL = "INFO"

LOG_FORMAT = "%(asctime)s - %(name)s - %(levelname)s - %(message)s"

- Imports required libraries for Vertex AI and ADK.

- Initializes the Vertex AI client with project and location settings

- Creates a new RAG corpus with the specified name, description, and embedding model

- Configures the embedding model using Google’s publisher model identifier

- Returns a structured response with corpus details or error information

- Includes error handling via try-except block

def create_rag_corpus(

display_name: str,

description: Optional[str] = None,

embedding_model: Optional[str] = None

) -> Dict[str, Any]:

"""

Creates a new RAG corpus in Vertex AI.

Args:

display_name: A human-readable name for the corpus

description: Optional description for the corpus

embedding_model: The embedding model to use (default: text-embedding-004)

Returns:

A dictionary containing the created corpus details including:

- status: "success" or "error"

- corpus_name: The full resource name of the created corpus

- corpus_id: The ID portion of the corpus name

- display_name: The human-readable name provided

- error_message: Present only if an error occurred

"""

if embedding_model is None:

embedding_model = RAG_DEFAULT_EMBEDDING_MODEL

try:

# Configure embedding model

embedding_model_config = rag.EmbeddingModelConfig(

publisher_model=f"publishers/google/models/{embedding_model}"

)

# Create the corpus

corpus = rag.create_corpus(

display_name=display_name,

description=description or f"RAG corpus: {display_name}",

embedding_model_config=embedding_model_config,

)

# Extract corpus ID from the full name

corpus_id = corpus.name.split('/')[-1]

return {

"status": "success",

"corpus_name": corpus.name,

"corpus_id": corpus_id,

"display_name": corpus.display_name,

"message": f"Successfully created RAG corpus '{display_name}'"

}

except Exception as e:

return {

"status": "error",

"error_message": str(e),

"message": f"Failed to create RAG corpus: {str(e)}"

}

...

..

# Create FunctionTools from the functions for the RAG corpus management tools

create_corpus_tool = FunctionTool(create_rag_corpus)

update_corpus_tool = FunctionTool(update_rag_corpus)

list_corpora_tool = FunctionTool(list_rag_corpora)

get_corpus_tool = FunctionTool(get_rag_corpus)

delete_corpus_tool = FunctionTool(delete_rag_corpus)

import_document_tool = FunctionTool(import_document_to_corpus)

# Create FunctionTools from the functions for the RAG file management tools

list_files_tool = FunctionTool(list_rag_files)

get_file_tool = FunctionTool(get_rag_file)

delete_file_tool = FunctionTool(delete_rag_file)

# Create FunctionTools from the functions for the RAG query tools

query_rag_corpus_tool = FunctionTool(query_rag_corpus)

search_all_corpora_tool = FunctionTool(search_all_corpora)

process_grounding_metadata_tool = FunctionTool(process_grounding_metadata)

- Wraps each function in an ADK FunctionTool

- The ADK agent can directly use these tools

- Organized by functionality: corpus management, file management, and query tools

- Imports necessary libraries for GCS operations

- Initializes the storage client with the project ID

- Extracts file data from the user’s message in the ADK context

- Handles file naming and content type determination

- Uploads the file data to the specified GCS bucket

- Returns upload details, including the GCS URI for subsequent import

def upload_file_to_gcs(

tool_context: ToolContext,

bucket_name: str,

file_artifact_name: str,

destination_blob_name: Optional[str] = None,

content_type: Optional[str] = None

) -> Dict[str, Any]:

"""Uploads a file from ADK artifacts to a Google Cloud Storage bucket."""

if content_type is None:

content_type = GCS_DEFAULT_CONTENT_TYPE

try:

# Check if user_content contains a file attachment

if (hasattr(tool_context, "user_content") and

tool_context.user_content and

tool_context.user_content.parts):# Look for any file in parts

file_data = None

for part in tool_context.user_content.parts:

if hasattr(part, "inline_data") and part.inline_data:

if part.inline_data.mime_type.startswith("application/"):

file_data = part.inline_data.data

break

if file_data:

# We found file data in the user message

if not destination_blob_name:

destination_blob_name = file_artifact_name

if content_type == "application/pdf" and not destination_blob_name.lower().endswith(".pdf"):

destination_blob_name += ".pdf"

# Upload to GCS

client = storage.Client(project=PROJECT_ID)

bucket = client.bucket(bucket_name)

blob = bucket.blob(destination_blob_name)

blob.upload_from_string(

data=file_data,

content_type=content_type

)

- Imports all the function tools created in corpus_tools.py and storage_tools.py

- Creates an ADK agent with a name, description, and model configuration

- Adds all tools to the agent, giving it the ability to perform various RAG operations

- This agent is the entry point for all user interactions

# Create the RAG management agent

agent = Agent(

name=AGENT_NAME,

model=AGENT_MODEL,

description="Agent for managing and searching Vertex AI RAG corpora and GCS buckets",

instruction="""

You are a helpful assistant who manages and searches RAG corpora in Vertex AI and Google Cloud Storage buckets.

You can help users with these main types of tasks:1. GCS OPERATIONS:

- Upload files to GCS buckets (ask for bucket name and filename)

- Create, list, and get details of buckets

- List files in buckets

2. RAG CORPUS MANAGEMENT:

- Create, update, list ,a nd delete corpora

- Import documents from GCS to a corpus (requires gcs_uri)

- List, get details, and delete files within a corpus

3. CORPUS SEARCHING:

- SEARCH ALL CORPORA: Use search_all_corpora(query_text="your question") to search across ALL available corpora

- SEARCH SPECIFIC CORPUS: Use query_rag_corpus(corpus_id="ID", query_text="your question") for a specific corpus

- When the user asks a question or for information, use the search_all_corpora tool by default.

- If the user specifies a corpus ID, use the query_rag_corpus tool for that corpus.

- IMPORTANT - CITATION FORMAT:

- When presenting search results, ALWAYS include the citation information

- Format each result with its citation at the end: "[Source: Corpus Name (Corpus ID)]"

- You can find citation information in each result's "citation" field

- At the end of all results, include a Citations section with the citation_summary information

Always confirm operations before executing them, especially for delete operations.

- For any GCS operation (upload, list, delete, etc.), always include the gs:/// URI in your response to the user. When creating, listing, or deleting items (buckets, files, corpora, etc.), display each as a bulleted list, one per line, using the appropriate emoji ℹ️ for buckets and information, 🗂️ for files, etc For example, when listing GCS buckets:

- 🗂️ gs://bucket-name/

""",

tools=[

# RAG corpus management tools

corpus_tools.create_corpus_tool,

corpus_tools.update_corpus_tool,

corpus_tools.list_corpora_tool,

corpus_tools.get_corpus_tool,

corpus_tools.delete_corpus_tool,

corpus_tools.import_document_tool,

# RAG file management tools

corpus_tools.list_files_tool,

corpus_tools.get_file_tool,

corpus_tools.delete_file_tool,

# RAG query tools

corpus_tools.query_rag_corpus_tool,

corpus_tools.search_all_corpora_tool,

# GCS bucket management tools

storage_tools.create_bucket_tool,

storage_tools.list_buckets_tool,

storage_tools.get_bucket_details_tool,

storage_tools.upload_file_gcs_tool,

storage_tools.list_blobs_tool,

# Memory tool for accessing conversation history

load_memory_tool,

],

# Output key automatically saves the agent's final response in the state under this key

output_key=AGENT_OUTPUT_KEY

)

Below is a complete example workflow showing how to set up the entire RAG agent with the Google Gen AI Intensive PDF documents:

1. Creating a GCS Bucket

adk run rag

Creates 7 Google Cloud Storage buckets using default configs for each document.

2. Upload PDF Files to GCS Buckets

Upload all GenAI PDFs to the seven buckets created

adk web

Prompt used for uploading files through the ADK web

Upload the file "promptengineering.pdf" to the GCS bucket gs://adk-prompt-engineering/ and use "promptengineering.pdf" as the destination blob name. Do not ask for confirmation.Upload the file "foundational-large-language-models-text-generation.pdf" to the GCS bucket gs://adk-foundation-llm/ and use "foundational-large-language-models-text-generation.pdf" as the destination blob name. Do not ask for confirmation.

Upload the file "agents.pdf" to the GCS bucket gs://adk-agents-llm/ and use "agents.pdf" as the destination blob name. Do not ask for confirmation.

Upload the file "agents-companion.pdf" to the GCS bucket gs://adk-agents-companion/ and use "agents-companion.pdf" as the destination blob name. Do not ask for confirmation.

Upload the file "emebddings-vector-stores.pdf" to the GCS bucket gs://adk-embedding-vector-stores/ and use "emebddings-vector-stores.pdf" as the destination blob name. Do not ask for confirmation.

Upload the file "operationalizing-generative-ai-on-vertex-ai.pdf" to the GCS bucket gs://adk-operationalizing-genai-vertex-ai/ and use "operationalizing-generative-ai-on-vertex-ai.pdf" as the destination blob name. Do not ask for confirmation.

Upload the file "solving-domain-specific-problems-using-llms.pdf" to the GCS bucket gs://adk-solving-domain-problem-using-llms/ and use "solving-domain-specific-problems-using-llms.pdf" as the destination blob name. Do not ask for confirmation.

3. Create RAG Corpora and Import Files

Creates RAG corpus and imports the above files in GCS into RAG

Multiple file Imports into RAG Corpora using ADK CLI

The prompts below were used to create RAG and import files from GCS into RAG.

Create a RAG corpus named "adk-agents-companion" with description of rag as "adk-agents-companion" and import the gs://adk-agents-companion/agents-companion.pdf into RAG.Create a RAG corpus named "adk-agents-llm" with description "adk-agents-llm" and import the file gs://adk-agents-llm/agents.pdf into the RAG corpus.

Create a RAG corpus named "adk-embedding-vector-stores" with description "adk-embedding-vector-stores" and import the file gs://adk-embedding-vector-stores/emebddings-vector-stores.pdf into the RAG corpus.

Create a RAG corpus named "adk-foundation-llm" with description "adk-foundation-llm" and import the file gs://adk-foundation-llm/foundational-large-language-models-text-generation.pdf into the RAG corpus.

Create a RAG corpus named "adk-operationalizing-genai-vertex-ai" with description "adk-operationalizing-genai-vertex-ai" and import the file gs://adk-operationalizing-genai-vertex-ai/operationalizing-generative-ai-on-vertex-ai.pdf into the RAG corpus.

Create a RAG corpus named "adk-solving-domain-problem-using-llms" with description "adk-solving-domain-problem-using-llms" and import the file gs://adk-solving-domain-problem-using-llms/solving-domain-specific-problems-using-llms.pdf into the RAG corpus.

4. Query across all Corpora for retrieval, including citation

Example with RAG Query and Retrieval with Corpora Citation

You can access all the code used in this article on my GitHub:

- 403 Errors: Make sure you’ve authenticated with `gcloud auth application-default login`

- Resource Exhausted: Check your quota limits in the GCP Console

- Upload Issues: Ensure your file format is supported and file size is within limits

In this article, we’ve demonstrated how to build a powerful Retrieval-Augmented Generation (RAG) agent using Google’s Agent Development Kit (ADK) and Vertex AI RAG Engine. This integration showcases a modern approach to creating AI assistants with deep knowledge integration capabilities.

The solution we’ve created offers remarkable versatility in both interaction modes and corpus management:

- Flexible Interfaces: We leveraged both the ADK CLI for command-line automation and ADK Web for an intuitive chat-like experience, providing multiple ways to interact with our RAG system.

- Cross-Corpus Intelligence: Our implementation can seamlessly query across multiple knowledge corpora simultaneously, intelligently retrieving the most relevant information regardless of which corpus contains it.

- Source Transparency: Every piece of retrieved information is accompanied by detailed citations that trace back to the source document and corpus, ensuring transparency and trustworthiness.

- Conversational Memory: By utilizing ADK’s memory capabilities, our agent maintains conversation history throughout the entire workflow, creating a coherent and contextual experience for users.

- Rapid Deployment: The entire system can be set up in under 2 minutes, making it practical for both prototyping and production use cases.

This implementation demonstrates how modern AI frameworks and cloud services can be combined to create sophisticated knowledge retrieval systems that are both powerful and accessible. The modular architecture ensures that the system can be extended with additional capabilities as both ADK and Vertex AI continue to evolve.

By bridging the gap between raw document repositories and natural language interactions, this RAG agent represents the future of enterprise knowledge management , where information from diverse sources becomes instantly accessible through natural conversation.

Source Credit: https://medium.com/google-cloud/build-a-rag-agent-using-google-adk-and-vertex-ai-rag-engine-bb1e6b1ee09d?source=rss—-e52cf94d98af—4