Welcome to the May 1–15, 2025 edition of Google Cloud Platform Technology Nuggets. The nuggets are also available in the form of a Podcast.

If we assume that building a Chatbot that can understand and respond in the users preferred language is easy, you might have underestimated the effort to do that. Consider the scenario that you might have to mix and match multiple models (Gemma, Gemini) and potentially even other translation APIs. Model Context Protocol (MCP) has emerged as the communication protocol that standardizes how you could potentially connect your LLMs to Tools and external services in a standard fashion. Check out this blog post that highlights such an architecture with sample code.

Your AI production workloads definitely push the boundaries to ensure that you have the capacity, predictable response times and enhanced support. In simple terms, you need a dedicated prediction endpoint and Vertex AI Prediction Dedicated Endpoints could be the solution. It provides dedicated networking, optimized network latency, support for larger payloads, longer request timeouts along with support for both Streaming and gRPC. If your production workloads have stricter security requirements, then dedicated endpoints can utilize Google Cloud Private Service Connect (PSC) to provide a secure and performance-optimized path for prediction requests. Check out the blog post for more details.

Agentic applications are no longer expected to just handle text request and responses. They are expected to support a user talking to them and/or even see the environment in which they are operating to decipher and formulate a response. How do you build such capabilities in your applications? The Gemini Live API is the way to go. The capabilities of the Gemini Live API are immense:

- It enables low-latency bidirectional voice and video interactions with Gemini.

- You can provide end users with the experience of natural, human-like voice conversations, and with the ability to interrupt the model’s responses using voice commands.

- The model can process text, audio, and video input, and it can provide text and audio output.

- It leverages multimodal data — audio, visual, and text — in a continuous livestream.

Check out this blog post, Build live voice-driven agentic applications with Vertex AI Gemini Live API, that demonstrates a real world use case that integrates Gemini Live API to do a real-time visual inspection of a motor, suggest repair procedures and more. This is definitely the future of building Agentic apps that can truly hear, see and act.

I am sure that among the terms that you have recently been hearing when it comes to the AI space, “vibe coding” and “model context protocol” would rank definitely in the top items in the list. How about combining them together? What we mean is that, let’s use vibe coding to write a MCP Server? The blog post demonstrates a couple of methods to do exactly that, one via the Gemini App and if you are looking to code/automate this out, then use the Gen AI SDK. Interesting times ahead for coders.

If you are building Agentic applications using Langchain and which integrate with PostgreSQL databases, then the langchain-postgres package is a great efficiency booster since it specifically provides integrations that allow LangChain to connect to and utilize PostgreSQL databases for tasks such as storing chat history, acting as a vector store for embeddings, and loading documents. At Cloud Next ’25, several updates to this package were announced and I quote from the blog post:

- Vector index support so that developers can set up their vector database from LangChain

- Support for flexible database schemas to build more powerful applications that are easier to maintain

- Enhanced LangChain vector store APIs with a clear separation of database setup and usage, adhering to the principle of least privilege, for improved security.

Evaluating models is an extremely important task given the range of models available now, which are able to generate multi-modal outputs. Help could be on the way in the form of Gecko, which is a rubric-based and interpretable autorater for evaluating generative AI models that provides a customizable way to assess the performance of image and video generation models. The blog post provides nice details on the approach that Gecko takes to first break down the prompt into semantic elements, ensure they are there, then generate a set of questions to establish the relationships, finally do a score and present the results. While doing that, Gemini provides justifications, so that you understand better how it arrived at the results.

Gecko is now available via the Gen AI Evaluation Service in Vertex AI.

DORA’s latest research — the Impact of Generative AI in Software Development report brought up some data that was not exactly music to the ears of folks who have been highlighting the great boost that AI has brough to software code generation activities. The report highlighted (refer to the blog post for exact numbers) that there was a decrease in software delivery throughput and lower software delivery stability. The report goes on to highlight that generate more software code without adhering to best practices, having the right documentation and code quality, is likely to cause this situation. The solution: Agents that help not just across coding but various other activities that define the entire software development lifecycle (SDLC) that includes post the checkin, a solid code review, testing, document generation and more. Check out the blog post that dives into this area and highlights how Code Assist Agents are Google Cloud’s answer to addressing the SDLC.

There is a new series that captures the cool things that customers are building with Google Cloud. Check out the inaugural edition of this monthly updates that captures the interesting work done by a few select customers highlighted, which I reproduce from the blog post:

- Lowe’s new Visual Scout product recommendation engine powered by Vertex AI Vector Search.

- A responsive “Driver Agent” platform created by Formula E to bring more equity to the track.

- Ester AI, a conversational tool from wealth.com that can help financial planners review wills and trusts.

- A new operational dashboard that’s improving service for African super app Yassir.

- AI-powered knowledge base health insurer SIGNAL IDUNA is using to enhance customer experience.

- How AlloyDB helped autonomous driving company Nuro manage millions of real-time image queries more efficiently and cost effectively.

- Mars Wrigley can now measure and update ads more agilely with Cortex Framework.

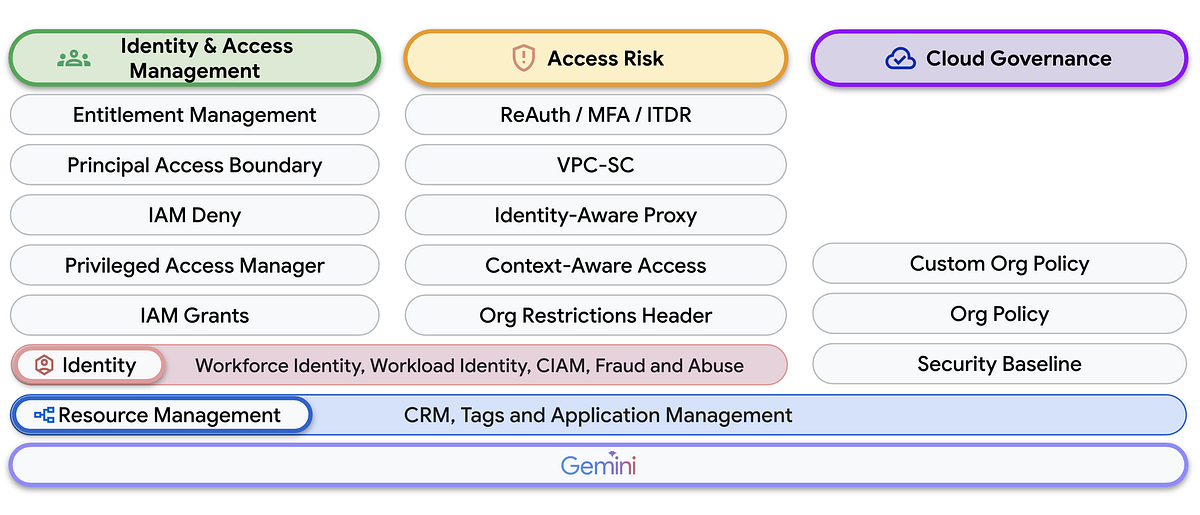

The IAM, Access Risk, and Cloud Governance portfolio is complex as the diagram below indicates. But it is a crucial part of your cloud strategy.

At Cloud Next ’25, several enhancements to services in this portfolio were announced. You should definitely checkout the blog post, that highlights them along with several AI enhancements announced to help users manage this efficiently.

The 2nd edition of Google Cloud CISO Perspectives for April 2025 is out. The key topic that is discussed in the bulletin is how attackers and defenders are using AI. The topic cuts through the noise, one-off incidents that get blown out of proportion and focuses on a data-driven approach to Threat Intelligence.

Earth Engine is used by scientists, researchers, and developers to detect changes, map trends, and quantify differences on the Earth’s surface. It provides multi-petabyte catalog of satellite imagery and geospatial datasets. Now imagine combining this raster data with vector capabilities of BigQuery. This new integration is called Earth Engine in BigQuery and allows users to perform Earth Engine raster analytics directly within BigQuery using SQL, primarily through a new function called ST_RegionStats() and access to analysis-ready Earth Engine datasets in BigQuery Sharing. Check it out.

BigQuery ML contribution analysis models are now generally available. Imagine a scenario where you have retail sales data and you need to sift through it to understand the changes in sales per user. This tool helps users understand the reasons behind changes in metrics by identifying key drivers within large, multidimensional datasets, automating a process that previously required manual effort. Check out the blog post for more details.

BigQuery has introduced “Indexing with column granularity”. What does it mean? BigQuery stores table data in physical files, and within these files, data is stored in a columnar format. The default search index in BigQuery operates at the file level, mapping data tokens to the files where they exist. This helps reduce the search space by only scanning relevant files. However, this file-level approach is less effective when search tokens are selective within specific columns but common across many or all files, potentially leading to scans of most files. The blog post discusses this problem via the example (a diagram of which is given below) where you have to efficiently search for content that has “Google Cloud Logging”.

BigQuery implements indexing with column granularity by enhancing the search index to store the mapping of data tokens along with the column information where they appear. This is in contrast to the default index which lacks this column detail. When a query is executed, BigQuery can leverage this index containing column information to more precisely pinpoint relevant data within columns.

In any business intelligence application, data trustworthiness is important. If we break that down a bit, given that generative AI is all over the place, it can lead to inaccurate and inconsistent results, like miscalculating variables or misinterpreting definitions, which can be harmful for businesses. The solution, proposed in the blog post, is a Semantic Layer. This layer is a centralized, governed foundation providing a single source of truth for your data and defining core business concepts consistently. LookML (Looker Modeling Language) within Looker is the layer that can help you do that. Check out the blog post for more details.

AI-assisted troubleshooting for Cloud SQL and AlloyDB was introduced at Cloud Next ’25. A simple example of how this could be useful is when AI-assisted troubleshooting can help you understand the reasons for slow query performance. The assistance can help to analyze the query performance, highlight the cause of increased latency, provide recommendations and then within Cloud SQL Studio, give you the option of applying the recommended actions too. This service is now available in preview for AlloyDB, Cloud SQL for PostgreSQL and Cloud SQL for MySQL and Cloud SQL for SQL Server. Check out the blog post for more details.

If you have been using the Agent Development Kit (ADK) to author agents, chances are pretty high that you need to integrate with the external tools. Model Context Protocol (MCP) has fast emerged as a standard way to integrate these tools that are primarily being exposed now in the form of MCP Servers. How do you make that happen? A blog post squarely focused on developers helps you first understand the building blocks (MCP and SSE) and then demonstrates how to do that. It also covers Streamable HTTP, the next-generation transport protocol designed to succeed SSE for MCP communications. The interesting part of the article is not just the code but a call out to the important issue of security i.e. authentication in this case.

The AI Hypercomputer is sometimes misunderstood in terms of what it means? It provides customizable components using fully integrated hardware, open software, and flexible consumption models. The goal is to ensure that you are able to train your models efficiently, deliver AI powered applications and serve models at scale but in a cost-effective manner. At Cloud Next ’25, several updates to this platform were announced. Check out the blog post that highlights key areas like TPU (Tensor Processing Unit) and GPU infrastructure, including the new Ironwood TPU designed for inference and enhancements to software like JetStream (Google’s JAX inference engine) and MaxDiffusion.

If you would like to share your Google Cloud expertise with your fellow practitioners, consider becoming an author for Google Cloud Medium publication. Reach out to me via comments and/or fill out this form and I’ll be happy to add you as a writer.

Have questions, comments, or other feedback on this newsletter? Please send Feedback.

If any of your peers are interested in receiving this newsletter, send them the Subscribe link.

Source Credit: https://medium.com/google-cloud/google-cloud-platform-technology-nuggets-may-1-15-2025-54748fe3cbc4?source=rss—-e52cf94d98af—4