Imagine that you’re a software developer, and you have an idea. Living in busy New York City, there are lots of ways to commute to work: subway, bus, walking, or cycling. But you’ve noticed that the best way to get to work on any given day depends on a variety of factors: the weather outside, whether the subway’s running on time, traffic, or how urgently you need to arrive at the office. So let’s say you want to build an app that can help you decide how to commute to work each morning.

Five years ago, building this app would have taken some time. You would have needed to build a deterministic system that would talk to a variety of APIs — transit, weather, traffic, your personal calendar — to choose the best commute option for you. Maybe you could have added a machine learning model that could learn from your prior choices, but training that model would have added toil and extra time, too.

Once successful, you would have ended up with an ‘if this, then that’ application:

if time before first meeting > 60m

AND

if weather == (SUNNY or PARTLY_CLOUDY)

==> return WALK_TO_WORK.

This system would have been somewhat rigid, and may not have been able to account for all the edge cases and combinations — like what if the app thinks you should walk to work, but you’re having some pain in your knee? Further, all the API integrations would make this app brittle — if the MTA overhauls their API, you would need to rewrite your integration code on the client-side.

What if instead, we could build an application that takes the user’s flexible preferences and responds using real-time data, in natural language? This is where AI agents come in.

What is an agent?

An AI agent is an application that uses AI models to make decisions and act independently in order to achieve a user-specified goal.

Unlike standard chatbots, which use generative models like Gemini or Claude for “call and response,” agents use AI models for intermediate steps in the application, that the user might not see — for instance, structuring JSON requests to weather APIs, and reasoning based on the fetched API response.

Like retrieval-augmented generation (RAG) applications, AI agents might “pre-retrieve” before asking the LLM to set its final response. In the example above, the agent might retrieve the morning’s Google Calendar events to augment its prompt to the model.

But with agents, the model also plays the reasoning role, either replacing or adding to hardcoded logic in the application itself. In our traditional CommutePlanner app, for instance, we might always retrieve the morning’s calendar. But the AI agent version might decide to forego fetching calendar events entirely, if it feels it’s made a decision with just the MTA alerts, traffic, and weather. (“I ruled out walking because of the weather, and there are significant express-bus disruptions. I think the subway is the best option.”)

Agent Benefits

AI agents have several advantages over traditional applications. They’re flexible and able to reason based on personalized inputs you might never expect (“I get nervous about cycling over bridges, can I avoid that?”). They can handle fluidity in data — not just “is it windy?” but how windy is it? Too windy to bike safely?

Agents are also often easier to prototype compared to traditional apps. Rather than building a “decision tree” to address a goal or question (“Should I shut off this machine for maintenance?” “How should I route this insurance claim?”), you can rely on an LLM to make a reasoned decision and invoke external tools, as needed. They’re outcome-driven, rather than path-driven.

Agents can also reduce the time building integrations into external systems, especially if you use a tools abstraction like the Model Context Protocol (MCP), which provides a layer between your agent and the external API, database, or system.

Lastly, agents are proactive. They can run in response to a prompt, like in the example above, on a timed schedule, or autonomously, in response to a trigger.

All of these benefits can have positive ripple-effects on the end-user experience. Users will be able to interact more naturally with your software system, input their personal preferences, and hopefully get a higher-quality and more nuanced result.

When to build an agent?

There are some types of software use cases where agents are a great fit. If you want a flexible or personalized tool — think, a retail shopping helper, or a customer support system — agents can work well. Agents are great at taking autonomous action, whether as an intermediate step (“check if there’s a 6:30 dinner reservation slot?”) or as a final task accomplishment (“make the reservation”).

Agents can also speed up workflows that previously required a human in the loop, like a customer refunds system. Agents work well when you’re dealing with multiple, real-time data sources, like IT tickets, insurance claims, or the weather.

But there are plenty of cases where agents might not be a fit, and that’s okay! Let’s say you’re leveraging Gemini 2.5’s long context window to generate marketing copy, and you’ve got a big 100K-token prompt that is working well for brand-tailored responses. You might be able to get by just fine with regular model calls.

Or imagine that you’ve built a Q&A chatbot for your company, using internal documentation as a vector data source. A RAG architecture might work well there. Or say that you help maintain a critical financial transaction system, which is heavily regulated. Your traditional ML classifier approach might be the best option, for now.

When deciding if an agent approach might work for you, closely examine your use case, and know that if you decide to build an agent, there will be challenges ahead.

Agent Challenges

I’ve encountered three big challenges when building AI agents.

Challenge #1: Predictability.

Because agents rely on large language models (LLMs) for reasoning, and LLMs are by definition nondeterministic, it can be tough to predict exactly how the agent will reason and respond. If you ask the CommuteAgent how you should get to work, it might respond “walk” one second, and “subway” the next — even with the same data at its disposal.

Some ways to cope with this:

- Choose models for your agent that are optimized for thinking and reasoning, such as Gemini 2.5 Flash.

- Write detailed system prompts. For instance, provide descriptions for the available tools. This can help your agent call tools in the way you’d expect.

- Guide your agent to think step-by-step, for instance with chain-of-thought prompting or a ReAct architecture.

- Use state-management and memory within your agent, to help the agent remember the user’s prompts and preferences.

- Ground your agent in trusted data to reduce model hallucinations (RAG, grounding with Search).

- Use deterministic agent patterns. Some agent frameworks, like ADK, support workflow agents that control the execution of sub-agents without the use of LLMs.

- Add checks to your agent’s workflow — for instance, add a simple logic check before returning the final response (“is taxi one of the user’s approved commute methods?”). This step might also include API spec validation for tool-calls, and safety checks against the final response.

- Regularly evaluate your agent to identify cases where the agent’s path or response varies from what you’d expect.

Challenge #2: Stability.

The second challenge when building an agent is stability. The agent framework landscape is evolving rapidly — from ADK, to LangGraph, to CrewAI — and sometimes these frameworks introduce breaking changes. Then there’s managing your model-calls and tools in a way that’s resilient and secure. These challenges can’t be entirely avoided — it’s likely you’ll need to refactor a bit of code as your chosen framework launches new features. But here are some ways to mitigate:

- Use tool-calling abstractions, such as the Model Context Protocol (MCP). MCP provides an abstraction layer over external tools like databases or APIs. This way, if the database’s schema or API methods change, you don’t have to rewrite your client code.

- Use your agent framework’s state management, especially the status field (eg. task_status) to help recover from interruptions and prevent infinite loops.

- Implement error-handling in your agent — for instance, retry logic, backoffs, circuit-breakers, or even a handoff to a human in the loop.

- Read up on agent security best practices. For instance, add authorization to your custom MCP servers, and avoid opening the server to the public.

Challenge #3: Operations.

The last challenge is perhaps the most significant when it comes to putting agents into production, and that’s operations. Even if you’re dealing with just one agent, you still might have multiple tools or models. As with any distributed system, tracking tool calls can be hard, and debugging can be tricky. Further, it’s important to monitor LLM token throughput and external API calls, especially for cost and quota purposes.

Here are some ways to keep your agent operations in check:

- Build robust evaluation datasets. Then, automate evaluation and test workflows on every commit, and before every new rollout to production.

- Log verbosely, especially in the development phase. This can help you gain transparency into what your agent is doing as it works towards its goal.

- Gather agent metrics- for instance, token throughput to the model (input and output). Create SLOs and alerts if these metrics trip certain thresholds.

- Use tracing to see tool-calls in real time, and to identify latency bottlenecks in your agent. Many agent frameworks have tracing built-in — for instance, ADK uses OpenTelemetry.

All these agent challenges can be compounded when you add more agents to the mix…

Multi-agent systems

Imagine that the CommuteAgent becomes a widely-adopted mobile app for commuters, with increasing requests to expand the reach outside New York City, with Washington, DC and San Francisco as the top-requested cities. In order to support commuters in those cities, we’d have to add new tools — for instance, the DC Metro and BART APIs.

As the number of tools grows, and the system prompts expand to reach new use-cases, our CommuteAgent might become an unwieldy monolith. This mega-agent might be tough to debug, maintain, and scale.

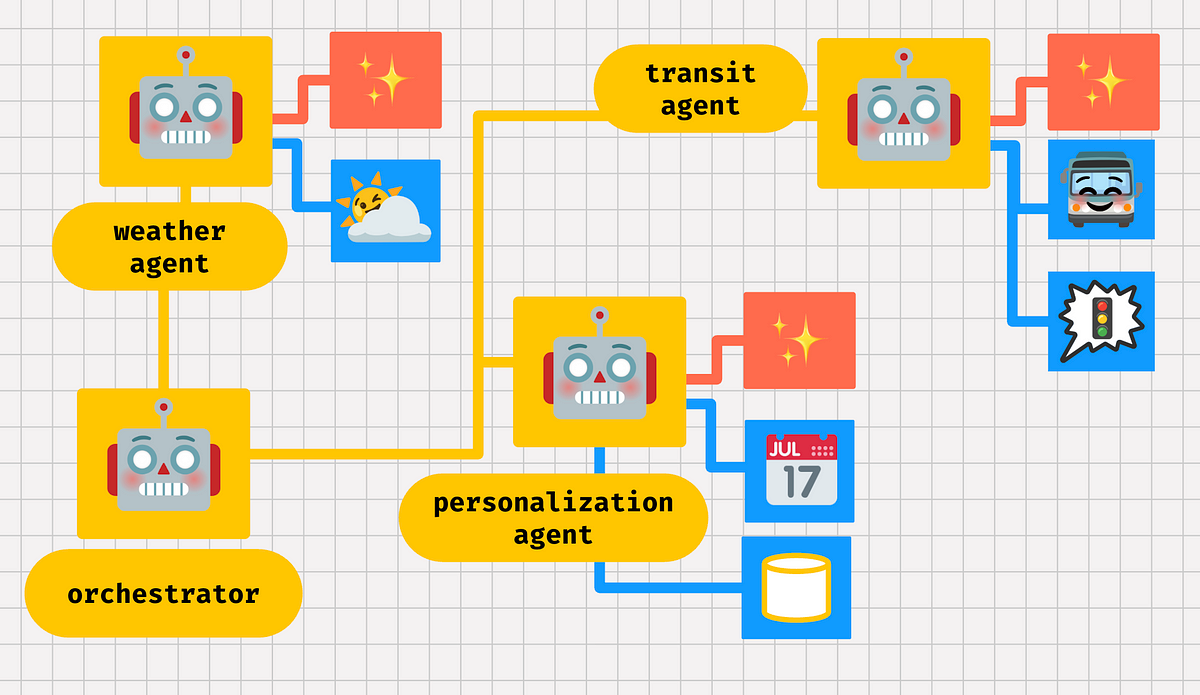

This is where a multi-agent architecture could help. Imagine that we instead decouple our CommuteAgent into four agents:

- Weather Agent: this agent gathers ambient conditions in the user’s current location.

- Transit Agent: this agent talks to multiple transit APIs depending on the user’s home city, and gathers traffic conditions.

- Personalization Agent: this agent gathers data from the user’s calendar, and other important info from a database, like whether the user has access to a bike.

- Orchestrator: this is the “parent” agent that manages the three sub-agents and returns the final response to the user.

Multi-agent systems have two key benefits. The first is response quality. When agents are decoupled into a system of experts, each sub-agent has its own system prompt, state, memory, model, model parameters, and tools. Each of those components can be tweaked for that specific expert use-case.

The second benefit is a modular architecture that can be easier to scale, debug, maintain, and re-use. For instance, let’s say your developer friend wants to re-use your powerful transit agent for their own cool idea. You could maintain this transit agent together with your friend, in its own code repository.

And when it’s time to pull the whole multi-agent architecture together, consistent protocols like Agent2Agent (A2A) can make it easier for your sub-agents to talk to each other.

Wrap-up

To recap, AI agents unlock flexible, personalized software use cases by taking a goal-oriented approach, using AI models and external tools. AI agents provide lots of benefits, especially around speed of development and personalized, high-quality responses. But AI agents are also a fast-moving area that present unique operational challenges.

Here are some agent resources to help you get started:

Image credit: Emoji Kitchen.

Source Credit: https://medium.com/google-cloud/ai-agents-in-a-nutshell-6e322b1e9cbe?source=rss—-e52cf94d98af—4