Development and production environments have different requirements and that applies to the database world too. And there are enough cases when the development database doesn’t need to be up 24×7. Of course there is a possibility that some zealous developer waits until 2 AM on the Saturday morning to work on some ideas evading him or her during business hours. But for many companies the ability to stop resources when nobody uses them is not only a matter of saved money but also a responsible choice of saving energy and reducing emissions.

Until recent times one of the ways to achieve it was to delete the unused instances when they are not required and create them back when they are requested again. It didn’t affect the data since data was stored on cluster level but you would get new IP addresses, parameters needed to be updated and all connections based on those IPs should be updated.

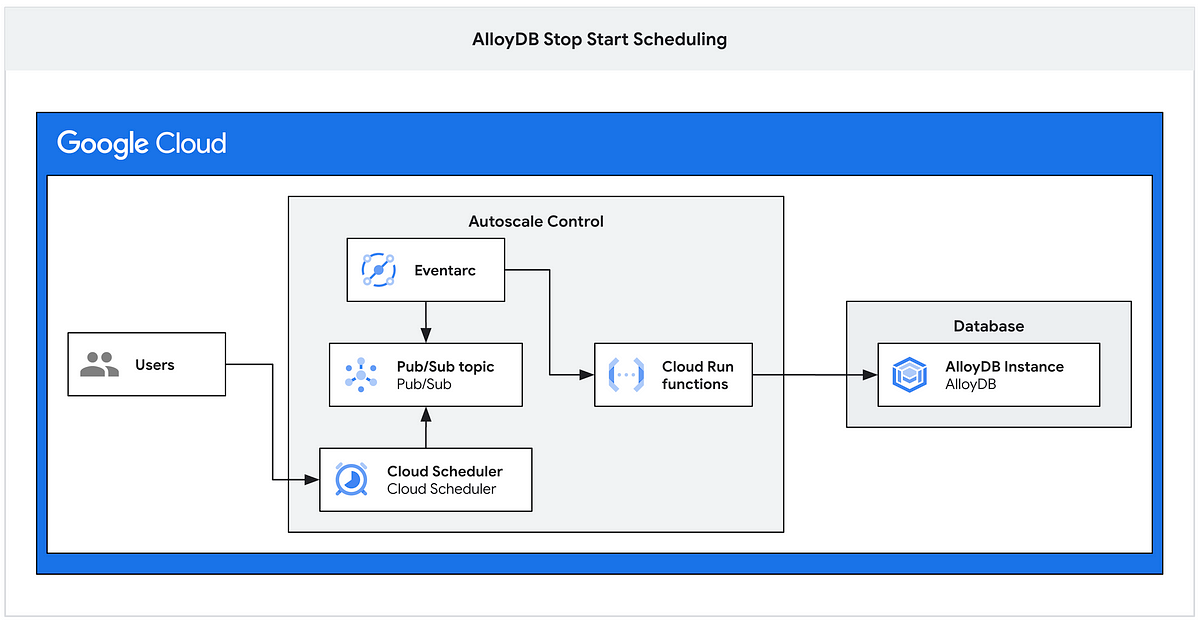

Now AlloyDB API has an interface to stop and start primary instances and read pools without deleting them, saving all network configuration and parameters. That might be a game changer for some and can help manage cloud resources. Probably even more important is to automate that AlloyDB management and be able to start and stop the instances by a schedule. In the blog I will show how you can use Cloud Run Functions, Cloud Pub/Sub and Cloud Scheduler to achieve this goal. I will go step by step and hopefully it can serve as a principle example to develop your own solution.

Let’s assume you have a brand new project for your development environment where you decide to deploy the AlloyDB cluster and schedule it to stop every night by 9pm. First you need all required APIs to be enabled for all necessary components. Here is the list of services we need to enable:

- AlloyDB

- Network services

- Cloud Build — to build the image with function code

- Artifact Registry — to store the image

- Cloud Run functions — running the function

- Eventarc — trigger for the function to execute

- Cloud Pub/Sub — publish the message to trigger execution

- Cloud Scheduler — send message to Cloud Pub/Sub at right time

- Compute Engine and Resource Manager API

The easiest way to enable all of these is to either go to the Google Cloud Console or use Google Cloud Shell and run a command like the following.

gcloud services enable alloydb.googleapis.com \

servicenetworking.googleapis.com \

cloudbuild.googleapis.com \

artifactregistry.googleapis.com \

run.googleapis.com \

pubsub.googleapis.com \

eventarc.googleapis.com \

cloudscheduler.googleapis.com \

compute.googleapis.com \

cloudresourcemanager.googleapis.com

Having the APIs enabled we can create an AlloyDB cluster, primary instance and a couple of read pools for the tests. You can follow instructions in the documentation. By the way, do you know that if you haven’t used AlloyDB in your project, then you can create a free trial AlloyDB cluster. You can read more about free trial clusters here.

Let’s say you get your cluster created in the us-central1 region with the name my-cluster and the primary instance name is my-cluster-primary. The cluster is up and running now it is time to work on our automation.

The AlloyDB start/stop feature is defined by the value of activation policy attribute for the instance. For a running instance it is “ALWAYS” and if we want to stop it we change it to “NEVER”. If we want to start it we return back the “ALWAYS” value. You can use the gcloud command to check the current activation policy for an instance.

gleb@cloudshell:~ (gleb-test-short-001-461915)$ gcloud alloydb instances describe my-cluster-primary --cluster my-cluster --region us-central1 --format="value(activationPolicy)"

ALWAYS

gleb@cloudshell:~ (gleb-test-short-001-461915)$

To update the activation policy of the AlloyDB instances we are going to build a function which will be triggered by the eventarc service. To build the function you can use the Google Cloud Console and the process as described in the documentation or use a command line. In this blog I am showing the command line approach. In the Cloud Shell (or a machine with installed Google SDK) execute:

mkdir alloydb-mgmt-fnc

cd alloydb-mgmt-fnc

Create a requirements.txt file in the alloydb-mgmt-fnc directory with the following contents:

functions-framework==3.*

google-auth

requests

cloudevents

We then create a file with our code for the function. For AlloyDB start stop operations I’ve prepared sample Python code which uses http requests to the AlloyDB API to update the activation policy for an instance based on parameters passed in a Pub/Sub message. As soon as the message is published in the defined topic the eventarc service triggers the function. We will talk about the exact structure for the Pub/Sub message later.

Here is the python code I’ve used for the function. Save it as a file with the name main.py.

# main.py

import base64

import json

import logging

import sys

import timeimport google.auth

import google.auth.transport.requests

import requests

from cloudevents.http import CloudEvent

# Set up basic logging

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

API_BASE_URL = "https://alloydb.googleapis.com/v1beta"

def get_access_token():

"""Gets a Google Cloud access token with the necessary scopes."""

credentials, project = google.auth.default(scopes=["https://www.googleapis.com/auth/cloud-platform"])

auth_req = google.auth.transport.requests.Request()

credentials.refresh(auth_req)

return credentials.token

def log_instance_details(instance_json: dict):

"""Logs all available properties from the instance's JSON response."""

instance_name = instance_json.get('name', 'Unknown').split('/')[-1]

logging.info("-----------------------------------------")

logging.info(f"--- Detailed Properties for Instance: {instance_name} ---")

for key, value in instance_json.items():

logging.info(f" - {key}: {value}")

logging.info("-----------------------------------------")

def control_alloydb_cluster(cloudevent: CloudEvent):

"""

Cloud Function triggered by Pub/Sub to start/stop AlloyDB instances via HTTP.

Args:

cloudevent (cloudevent.http.CloudEvent): The CloudEvent representing the Pub/Sub message.

"""

try:

data_payload = cloudevent.data

if isinstance(data_payload, bytes):

data_payload = json.loads(data_payload.decode('utf-8'))

pubsub_message_b64 = data_payload["message"]["data"]

pubsub_message_json_str = base64.b64decode(pubsub_message_b64).decode('utf-8')

message_json = json.loads(pubsub_message_json_str)

project_id = message_json['project']

region = message_json['region']

cluster_name = message_json['cluster']

operation = message_json['operation'].lower()

except (KeyError, json.JSONDecodeError, UnicodeDecodeError, TypeError) as e:

logging.error(f"Invalid message format or missing keys: {e}")

return 'Invalid message format', 400

if operation not in ['start', 'stop']:

logging.error(f"Invalid operation '{operation}'. Must be 'start' or 'stop'.")

return f"Invalid operation '{operation}'. Must be 'start' or 'stop'.", 400

try:

access_token = get_access_token()

headers = {"Authorization": f"Bearer {access_token}"}

list_url = f"{API_BASE_URL}/projects/{project_id}/locations/{region}/clusters/{cluster_name}/instances"

logging.info(f"Listing instances from: {list_url}")

response = requests.get(list_url, headers=headers)

response.raise_for_status()

response_json = response.json()

all_instances = response_json.get('instances', [])

if not all_instances:

logging.warning(f"No instances found for cluster {cluster_name}")

return 'No instances found', 200

logging.info("Found the following instances. Logging their full details:")

for instance in all_instances:

log_instance_details(instance)

primary_instance = None

read_pool_instances = []

for instance in all_instances:

if instance.get('instanceType') == 'PRIMARY':

primary_instance = instance

elif instance.get('instanceType') == 'READ_POOL':

read_pool_instances.append(instance)

if not primary_instance:

logging.error(f"No primary instance found for cluster {cluster_name}")

return 'No primary instance found', 404

logging.info(f"Proceeding with '{operation}' operation...")

if operation == 'start':

start_cluster(access_token, primary_instance, read_pool_instances)

elif operation == 'stop':

stop_cluster(access_token, primary_instance, read_pool_instances)

logging.info(f"Successfully processed '{operation}' for cluster {cluster_name}")

return 'Operation completed successfully.', 200

except requests.exceptions.HTTPError as e:

logging.error(f"An HTTP error occurred: {e.response.status_code} {e.response.text}")

return 'An internal HTTP error occurred', 500

except Exception as e:

logging.error(f"An unexpected error occurred: {e}", exc_info=True)

return 'An unexpected internal error occurred', 500

def start_cluster(token, primary, read_pools):

"""Starts the primary instance first, then the read pools."""

logging.info(f"Starting primary instance: {primary['name']}")

update_instance_activation_http(token, primary['name'], "ALWAYS")

logging.info("Waiting for 10 seconds before starting read pools...")

time.sleep(10)

for rp in read_pools:

logging.info(f"Starting read pool instance: {rp['name']}")

update_instance_activation_http(token, rp['name'], "ALWAYS")

logging.info("Start operation initiated for all instances.")

def stop_cluster(token, primary, read_pools):

"""Stops the read pool instances first, then the primary instance."""

for rp in read_pools:

logging.info(f"Stopping read pool instance: {rp['name']}")

update_instance_activation_http(token, rp['name'], "NEVER")

logging.info("Waiting for 10 seconds before stopping the primary...")

time.sleep(10)

logging.info(f"Stopping primary instance: {primary['name']}")

update_instance_activation_http(token, primary['name'], "NEVER")

logging.info("Stop operation initiated for all instances.")

def update_instance_activation_http(token, instance_name, policy):

"""Updates the activation policy via an HTTP PATCH request and polls the operation."""

headers = {

"Authorization": f"Bearer {token}",

"Content-Type": "application/json"

}

patch_url = f"{API_BASE_URL}/{instance_name}?updateMask=activation_policy"

payload = json.dumps({"activation_policy": policy})

logging.info(f"Sending PATCH to {patch_url} with policy {policy}")

response = requests.patch(patch_url, headers=headers, data=payload)

response.raise_for_status()

operation = response.json()

op_name = operation['name']

op_url = f"{API_BASE_URL}/{op_name}"

logging.info(f"Initiated operation {op_name}. Polling for completion...")

# WARNING: This polling loop may cause the Cloud Function to time out if the

# AlloyDB operation takes longer than the function's configured timeout.

# For production, consider a "fire-and-forget" approach or a more robust

# workflow using Cloud Tasks to check the operation status.

while True:

op_response = requests.get(op_url, headers=headers)

op_response.raise_for_status()

op_status = op_response.json()

if op_status.get('done', False):

logging.info(f"Operation {op_name} completed.")

if 'error' in op_status:

logging.error(f"Operation failed: {op_status['error']}")

break

logging.info("Operation not done yet, waiting 5 seconds...")

time.sleep(5)

We now have all the build components like source code for function in the main.py file and the requirements.txt file with required packages, and can deploy the function using google gcloud command.

gcloud run deploy alloydb-mgmt-fnc \

--source . \

--function control_alloydb_cluster \

--base-image python313 \

--region us-central1 \

--no-allow-unauthenticated

You can see in the code I’ve named the function alloydb-mgmt-fnc and the entry point will be the control_alloydb_cluster procedure. Also you might notice I’ve explicitly called to not allow unauthenticated requests. An unauthenticated endpoint would allow anybody with network access to stop or start your AlloyDB instances. You can read more about service authentication in documentation. It usually takes around five minutes to build the image and deploy the function to Cloud Run service.

As soon as the function is ready we can move forward with our other components. Since we plan to use Google Pub/Sub to pass the parameters for our function we need to create a topic. We can create it using Google Cloud Console or a command line. Here is an example of creating a topic with the name alloydb-mgmt.

gcloud pubsub topics create alloydb-mgmt

Then we need a service to subscribe to the topic and trigger the function passing the message to the function. That role is played by an eventarc trigger. In the following code I create an eventarc trigger in the same region as my function and with a subscription to the Pub/Sub topic defined earlier. Pay attention to the service account. It might be very useful to have dedicated service accounts for each component but here for simplicity I use the default service account for Compute service. You can read about best practices for using service accounts here.

PROJECT_ID=$(gcloud config get-value project)

PROJECT_NUMBER=$(gcloud projects describe $PROJECT_ID --format="value(projectNumber)")

EVENTARC_SERVICE_ACCOUNT=${PROJECT_NUMBER}-compute@developer.gserviceaccount.com

TOPIC_NAME=$(gcloud pubsub topics describe alloydb-mgmt --format="value(name)")

gcloud eventarc triggers create alloydb-mgmt-fnc-trigger \

--location=us-central1 \

--destination-run-service=alloydb-mgmt-fnc \

--destination-run-region=us-central1 \

--event-filters="type=google.cloud.pubsub.topic.v1.messagePublished" \

--transport-topic=${TOPIC_NAME} \

--service-account=${EVENTARC_SERVICE_ACCOUNT}

As you probably remember we’ve created a function with a service requiring authentication. To be able to pass parameters to our function the Pub/Sub service account needs to be able to generate authentication tokens. And that is exactly what we do in the next step.

PROJECT_ID=$(gcloud config get-value project)

PROJECT_NUMBER=$(gcloud projects describe $PROJECT_ID --format="value(projectNumber)")

PUBSUB_SERVICE_ACCOUNT=service-${PROJECT_NUMBER}@gcp-sa-pubsub.iam.gserviceaccount.com

PUBSUB_ROLE="roles/iam.serviceAccountTokenCreator"

gcloud projects add-iam-policy-binding ${PROJECT_ID} --member="serviceAccount:${PUBSUB_SERVICE_ACCOUNT}" --role="${PUBSUB_ROLE}"

We are almost there and can already test our function by passing a JSON message to the Pub/Sub topic. The message structure is simple. We provide the project, region, cluster name and operation. Here is an example:

{"project":"your-project-id","region":"us-central1","cluster":"my-cluster","operation":"stop"}

You can pass it directly to the Pub/Sub topic which then the eventarc subscription picks it up and triggers the function execution. Here is how to run it using the gcloud command (don’t forget to replace placeholder “your-project-id” by your real project id).

topic_name=$(gcloud pubsub topics describe alloydb-mgmt --format="value(name)")

gcloud pubsub topics publish ${topic_name} --message='{"project":"your-project-id","region":"us-central1","cluster":"my-cluster","operation":"stop"}'

Shortly after publishing the message you can check the status of your cluster instances in the console and see that one of the read pool instances is running the stopping operation.

You can see that one of the read pool instances is running the stopping operation. Remember that the stop operation starts from read pools, stopping them one by one, and only at the end stops the primary. When you start the instances it works in reverse order starting primary first.

The only thing that is left is scheduling of our operations. We can schedule the execution using Cloud Scheduler. Let’s schedule the stop operation to be executed at 9pm EDT every night.

TOPIC_NAME=$(gcloud pubsub topics describe alloydb-mgmt --format="value(name)")

CRON_SCHEDULE="0 21 * * *"

gcloud scheduler jobs create pubsub stop-my-cluster \

--location=us-central1 \

--schedule="${CRON_SCHEDULE}" \

--time-zone="America/New_York" \

--topic=${TOPIC_NAME} \

--message-body='{"project":"your-project-id","region":"us-central1","cluster":"my-cluster","operation":"stop"}'

And now every night at 9 PM EDT the scheduler will pass the message to the topic where eventarc will pick it up and pass it to the function, which will stop all instances for our cluster.

Using a similar approach you can schedule AlloyDB to start all the instances every morning at 8AM just before you get your first cup of coffee and login to your instance as a developer. Try it, test it and let us know if you find any issues. I would also love to know if you prefer examples using Google Cloud Console instead of command line.

Source Credit: https://medium.com/google-cloud/automating-alloydb-starts-and-stops-a794ca5a83c1?source=rss—-e52cf94d98af—4