With most enterprise apps now running in containers, it’s no surprise that developers are embedding GenAI tools — like Gemini, GPT, or Claude — right into their native code. But what does this mean for the way we build and run these apps? And more importantly, how do we scale them responsibly with an eye on cost and security?

Kubernetes (K8s) has become the go-to platform to make all this work. It doesn’t just run containers — it orchestrates entire workflows, automates scaling, manages resources, and keeps things secure. When it comes to GenAI in production, K8s is itself to be the best game in town.

- Handles Big Compute Easily: Need GPUs or TPUs? Kubernetes supports them out of the box.

- Repeatable and Portable: Whether you’re on AWS, GCP, Azure, or on-prem, your workloads behave the same.

- Supports AI Pipelines: Tools like Kubeflow, Argo Workflows, and KNative help you stitch together training and inference workflows.

- Vendor-Neutral: Run your GenAI anywhere. No lock-in.

When GenAI becomes part of your core app logic, things get interesting:

- GenAI as a Service Layer: Think of LLMs as smart APIs. You call them like any other service, but they come with context windows, latency concerns, and cost implications.

- More Event-Driven Workflows: You’re not just processing data anymore — you’re generating responses, triggering actions, and rerouting flows based on AI outputs.

- You’ll Need to Track State: LLMs work better with context. Now you need to store and retrieve conversation history or embeddings.

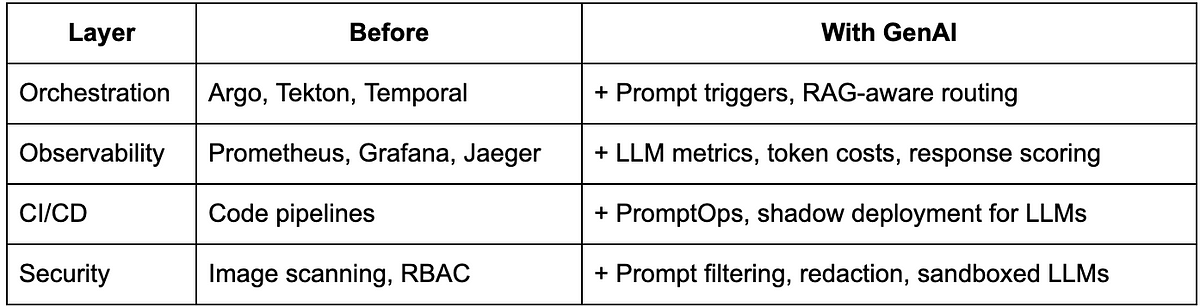

MLDevOps (or AIOps, if you prefer) is all about treating your AI components like first-class citizens in your DevOps lifecycle. Here’s how it evolves:

- PromptOps is Real: Prompts change. Track them. Test them. Deploy them.

- Observability Gets AI-Specific: Now you’re monitoring token usage, latency, hallucinations, and prompt success rates.

- Security is More Than Firewalls: You need runtime protection, prompt injection defense, and maybe even LLM sandboxes.

- CI/CD Includes AI: Model and prompt updates should flow through your pipelines just like code.

MLDevOps Workflow:

Git (Code + Prompts) ─▶ CI Pipeline ─▶ Container Build ─▶ Kubernetes

└─▶ Prompt Registry

└─▶ Canary Testing (Prompt Versions)

Monitoring Stack ─▶ Logs, Metrics, Traces, LLM Output Feedback

GenAI is not just another library — it fundamentally changes how your apps behave. Kubernetes, with its extensibility and automation, makes this transformation possible at scale. But with great power comes great complexity, and that’s where MLDevOps shines: giving you the tools and practices to iterate, monitor, and secure your AI-powered applications.

Source Credit: https://medium.com/google-cloud/orchestrating-the-future-how-kubernetes-powers-genai-in-the-age-of-mldevops-a4549712e47d?source=rss—-e52cf94d98af—4