Building a Real-Time AI-Powered Video Streaming Analysis with Google’s Live Stream API and Gemini Live 2.0

A deep dive into creating a blazingly fast security monitoring system that turns any camera feed into an intelligent guardian

The Challenge: Real-Time Intelligence at the Edge

Picture this: you need to monitor a space for security threats in real-time. Traditional motion detection systems are plagued with false positives from shadows, pets, or swaying trees. What if instead of relying on simple pixel changes, we could leverage Google’s most advanced multimodal AI to understand what’s actually happening in each frame?

That’s exactly what I built — a real-time security system that combines Google Live Stream API for ultra-low-latency video processing with Gemini 2.0 Flash Live Preview for intelligent frame analysis. The result? A system that can detect people, faces, gestures, and even respond to spoken keywords with minimum latency.

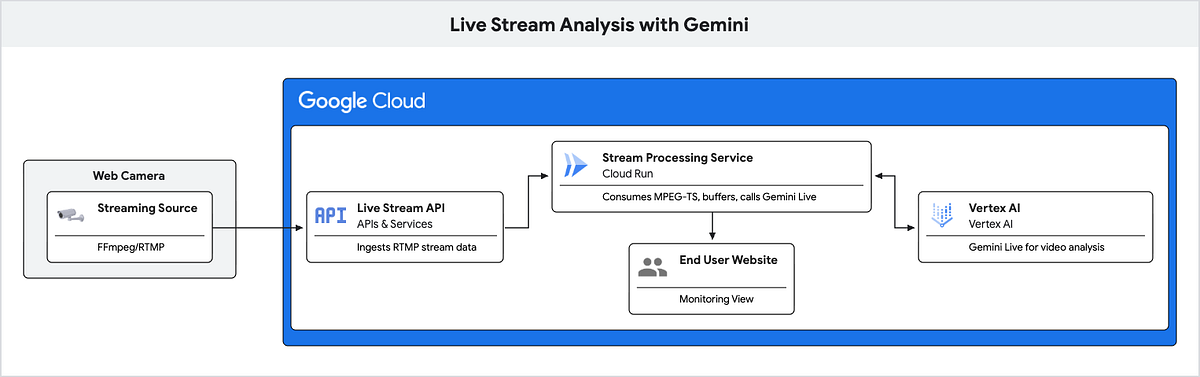

Architecture Overview: The Data Pipeline

Architecture Key Components: The GCP Power Trio

This system runs on three core Google Cloud services that handle the entire pipeline:

- Google Cloud Live Stream API: This service does the heavy lifting of video ingestion. We feed it our raw camera feed (like RTSP), and it automatically converts it into a reliable web stream (HLS). This saves us from having to build and manage our own complex media server.

- Gemini Live in Vertex AI: This is the AI “brain.” It provides a live, streaming connection where we send our video frames along with our instructive text prompts (like “count the people”). It analyzes the video in real-time and sends back the structured JSON answers corresponding with the prompt instruction.

- Cloud Run: This is the serverless service that runs our backend Go code. It connects everything: it pulls frames from the Live Stream, sends them to Gemini, gets the AI results, and pushes the data to the web UI via WebSockets. We don’t have to manage any servers, and it scales up or down automatically.

The stream processing service we developed operates on several key principles:

- Frame Throttling: Capture at 30fps but analyze at 2–3fps to prevent overwhelming the AI model

- Correlation: Each frame gets a unique ID that’s tracked through the entire pipeline

- Backfilling: When AI analysis completes, we apply results to subsequent frames until new analysis arrives

The Power of Prompt-Only Detection

Traditional security systems are limited to what they were trained to detect. Want to detect “people wearing red shirts”? You need a custom dataset. “Cats on kitchen counters”? Another training cycle. “Smoke or fire in server rooms”? Yet another specialized model.

With Gemini Live, you simply change the prompt:

// Detect people - no training required

prompt := "Count people in the frame. Return JSON with people_found (boolean) and count (integer)."

// Detect specific clothing - just change the prompt

prompt := "Count people wearing red clothing. Return JSON with red_clothing_found (boolean) and count (integer)."

// Detect pets in specific locations - again, just a prompt change

prompt := "Detect cats on kitchen surfaces or counters. Return JSON with cats_on_counter (boolean) and description (string)."

// Detect safety hazards - no specialized training

prompt := "Look for smoke, fire, sparks, or other safety hazards. Return JSON with hazard_detected (boolean), type (string), and urgency_level (1-10)."

// Detect suspicious behavior - pure natural language

prompt := "Analyze if anyone appears to be acting suspiciously, looking around nervously, or attempting to hide something. Return JSON with suspicious_behavior (boolean) and description (string)."

Real-World Prompt Examples from the System

The system comes with a configurable prompt that can be updated in real-time through the web interface:

// Default finger counting prompt

var taskPrompt = "Detect and count the number of fingers shown by the main hand in the frame. Respond ONLY with a single JSON object containing: frame_id (number), fingers_found (boolean), count (integer)."// But you can change it to anything:

// "Detect if anyone is using a cell phone or mobile device"

// "Count the number of face masks being worn properly"

// "Look for unattended bags or packages"

// "Detect if anyone appears to be falling or in distress"

// "Count vehicles in the parking area"

// "Identify if doors or windows are open when they should be closed"

Gemini Live Integration with Frame Correlation

The integration with Gemini Live ensures each frame gets analyzed with the current prompt while maintaining perfect correlation:

// Send frame with unique ID to model

msg := fmt.Sprintf("frame_id=%d; %s", it.ID, strings.TrimSpace(task))

err := session.SendRealtimeInput(genai.LiveRealtimeInput{

Media: &genai.Blob{

MIMEType: "image/jpeg",

Data: it.JPEG

},

Text: msg

})

// The model responds with structured JSON that always includes the frame_id

// No matter what you're detecting, the response format stays consistent

This approach revolutionizes realtime video processing because:

- No Training Required: Deploy new detection capabilities in seconds

- Unlimited Detection Types: If you can describe it, Gemini can detect it

- Context-Aware: The AI understands complex scenarios, not just object classification

- Natural Language: Security rules written in plain English, not code

- Zero Latency Updates: Change detection parameters without service interruption

Smart Buffering and Backfill Strategy

One of the trickiest parts was handling the asynchronous nature of AI responses. Frames arrive every 33ms (30fps), but AI analysis might take 200–500ms. The solution? A sophisticated backfill system:

func buildBackfillJSON(frameID uint64) string {

if last := getLastResp(); last != nil {

// Reuse last response schema but update frame_id

last["frame_id"] = frameID

b, _ := json.MarshalIndent(last, "", " ")

return string(b)

}

// Fallback to minimal object

obj := map[string]any{"frame_id": frameID}

b, _ := json.MarshalIndent(obj, "", " ")

return string(b)

}

When a new AI response arrives, we backfill up to 150 subsequent frames so the UI shows consistent captions even during analysis gaps:

const maxBackfill = 150

endID := latestID

if endID > parsedID+maxBackfill {

endID = parsedID + maxBackfill

}

for id := parsedID + 1; id <= endID; id++ {

if it2, ok := internal.GetFrameByID(id, &mu, &framesBuf); ok {

desc := buildBackfillJSON(it2.ID)

internal.MemUpdateCaption(it2.ID, desc)

}

}

Real-Time WebSocket Streaming with Zero-Copy Performance

The web UI receives frames via WebSocket with a carefully optimized protocol that separates binary JPEG data from JSON metadata:

func (h *WSHub) broadcastNewFrames(useDisk bool, capturesDir string) {

for client := range h.clients {

go func(c *WSClient) {

// Send binary JPEG immediately

c.conn.SetWriteDeadline(time.Now().Add(5 * time.Second))

if err := c.conn.WriteMessage(websocket.BinaryMessage, jpegData); err != nil {

return

}

// Follow with metadata

message := WSMessage{

Type: "frame_meta",

Data: map[string]interface{}{

"id": frame.ID,

"time": frame.Time,

"caption": frame.Caption,

},

}

c.conn.WriteJSON(message)

}(client)

}

}

The client runs at 60fps (16ms intervals) to minimize display latency, while the broadcast system processes new frames as they arrive.

Results Website:

The results website provides a modern, real-time dashboard for monitoring live video streams analyzed by Gemini AI. The interface features a sleek, dark-themed design optimized for clarity and usability.

Key features include:

- Live Video Player: Displays the current frame from the stream with overlays for camera labels and live status indicators.

- Intelligent Captions: AI-generated captions summarize detected objects and activities, updating in sync with the video.

- Task Control Panel: Easily switch between detection presets or define custom tasks for Gemini AI, with instant feedback on new frames.

- Dynamic Prompt: Ability to change prompts and tasks dynamically in the web UI.

Example:

Conclusion: AI-Powered Security is Here

This project demonstrates how modern AI APIs can be integrated into real-time systems with careful attention to latency and performance. By combining Google’s Live Stream API with Gemini 2.0, we’ve created a security system that’s not just faster than traditional approaches — it’s fundamentally more intelligent.

The complete source code is available on GitHub, and the system can be deployed in minutes with just a few environment variables. Whether you’re securing a retail space, monitoring a data center, or just want to know when your cat is on the kitchen counter, this AI-powered approach opens up possibilities that simple motion detection could never achieve.

The future of security isn’t just about detecting movement — it’s about understanding what that movement means.

Source Credit: https://medium.com/google-cloud/hi-gemini-secure-the-room-01903d5541f6?source=rss—-e52cf94d98af—4