A real-world case study on how a simple change in data storage format led to a 25% cost reduction and a 50% latency improvement.

In the world of big data, the choices we make in our architecture can have cascading effects on performance, scalability, and cost. For teams running large-scale data processing pipelines, these decisions are critical.

Recently, a large technology company undertook an investigation to optimize its Dataflow pipelines that write to and read from Bigtable. The core questions were.

- What is the most efficient way to store our data in Bigtable?

- Should we store data in granular, individual columns, or as a single, compressed binary object?

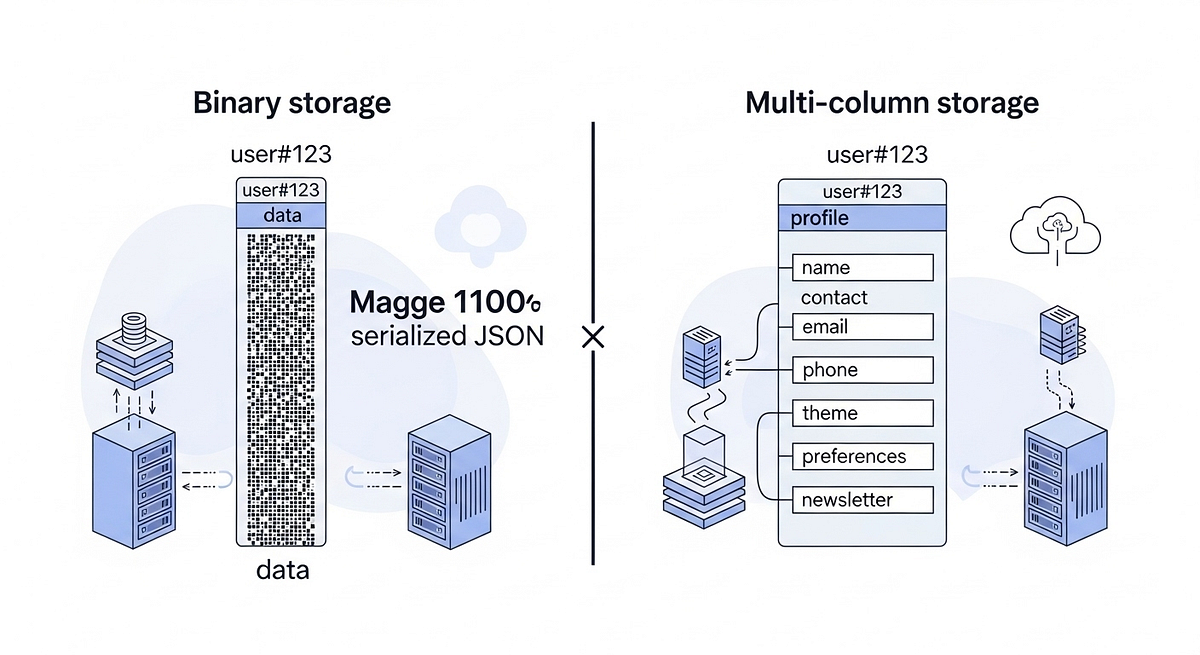

The team decided to test two approaches:

- Multi-Column Storage: Storing each attribute of a record in a separate Bigtable cell.

- Bytes Storage: Serializing the entire record into bytes and storing it in a single Bigtable cell.

The Background: The Quest for Efficiency

The team was managing high-throughput Dataflow jobs that process and store massive volumes of data in Bigtable. As the system scaled, two major concerns came into focus:

- Latency: Ensuring that both writing data to and reading data from Bigtable were as fast as possible.

- Cost: Bigtable costs are influenced by storage volume and the number of nodes. Reducing the data footprint could lead to significant savings.

The hypothesis was that changing the storage format from multiple columns to a single bytes record could yield substantial benefits. It was time to put it to the test.

The Findings: Clear Wins for Binary Storage

The performance analysis focused on four key metrics: Bigtable storage (cluster/storage_utilization), read/write latency [1], and CPU utilization (cluster/cpu_load). The comparison between the multi-column approach and the binary approach revealed a clear winner for this use case.

1. Storage and Cost: A 25% Reduction

The team observed that the binary data is more effectively.

The result: a 25% reduction in the storage space required.

Bigtable pricing is tied to storage and the number of nodes. By reducing the storage footprint by a quarter, the number of Bigtable nodes required for the cluster was also reduced by 25%, leading to substantial cost savings.

2. Latency: Up to 50% Faster

Performance tests showed dramatic improvements in read and write latency when using the bytes.

- Read Latency (p99): ~50% lower. Fetching a single binary object is much faster than retrieving multiple cells to reconstruct a record.

Read latency metric

resource bigtable_table

| metric bigtable.googleapis.com/server/latencies

| (cluster !~ "unspecified")

| (resource.instance == "")

| (resource.project_id == "")

| (metric.method =~ ".*(ReadRows)")

| group_by [resource.instance, resource.cluster, resource.table],

[value_latencies_percentile: percentile(value.latencies, 99)]

- Write Latency (p99): ~40% lower. Writing a single cell is a more efficient operation for Bigtable than mutating a row with many individual columns.

Write latency metric

resource bigtable_table

| metric bigtable.googleapis.com/server/latencies

| (cluster !~ "unspecified")

| (resource.instance == "")

| (resource.project_id == "")

| (metric.method =~ ".*(CheckAndMutateRow|MutateRow|MutateRows|ReadModifyWriteRow)")

| group_by [resource.instance, resource.cluster, resource.table],

[value_latencies_percentile: percentile(value.latencies, 99)]

3. Flexibility

- Query Flexibility: The primary drawback of the binary approach is the loss of server-side filtering. When data is stored as a binary blob, you cannot query or retrieve individual attributes from a record directly within Bigtable. The entire object must be read and deserialized by the client (in this case, the Dataflow job).

Lessons Learned: When to Use Binary vs. Multi-Column

This investigation provides a clear framework for deciding which approach is right for your use case.

Choose Binary Storage when:

- Your application typically needs to read the entire record at once.

- Low latency and high throughput are top priorities.

- Cost reduction through better data compression is a key objective.

- You do not need to query or filter on individual record attributes within Bigtable.

Choose Multi-Column Storage when:

- You need the flexibility to read or update specific attributes of a record without fetching the entire object.

- You want to use Bigtable’s server-side filters on different columns to narrow down query results.

- Your data schema changes frequently, and you want to add or remove columns without re-deploying serialization logic.

Code for writing bytes on Bigtable

/**

* Serializes the Avro record and creates Bigtable mutations.

* @param record The Avro record to serialize.

* @param timestampMicros The timestamp for the Bigtable cell (in microseconds).

* @return A list containing a single mutation, or an empty list if serialization fails.

*/

private List createMutations(ObjectRecord record, long timestampMicros) {

byte[] avroBytes;// Use try-with-resources for automatic stream closing

try (ByteArrayOutputStream out = new ByteArrayOutputStream()) {

SpecificDatumWriter datumWriter =

new SpecificDatumWriter<>(ObjectRecord.getClassSchema());

BinaryEncoder encoder = EncoderFactory.get().binaryEncoder(out, null);

datumWriter.write(record, encoder);

encoder.flush();

avroBytes = out.toByteArray();

} catch (IOException e) {

LOG.error("Failed to serialize Avro record for objectKey '{}': {}",

record.getObjectKey(), e.getMessage(), e);

return Collections.emptyList(); // Return an immutable empty list

}

// Mutation creation is the same and is correct

Mutation mutation =

Mutation.newBuilder()

.setSetCell(

Mutation.SetCell.newBuilder()

.setFamilyName(columnFamily)

.setColumnQualifier(ByteString.copyFromUtf8(BYTES_COLUMN_QUALIFIER_NAME))

.setValue(ByteString.copyFrom(avroBytes))

.setTimestampMicros(timestampMicros))

.build();

return Collections.singletonList(mutation);

}

Code for reading bytes from Bigtable

/**

* Processes Bigtable cells to deserialize an Avro object.

* @param cells List of Bigtable cells.

* @return Deserialized data object, or null if not found/failed.

* Replace objectRecord with the exact Object Record class

*/List cells = row.getCells(columnFamily); //Bigtable column Family

processRowAsBytes(cells);

private ObjectRecord processRowAsBytes(List cells) {

for (RowCell cell : cells) {

String qualifier = cell.getQualifier().toStringUtf8();

if (BYTES_COLUMN_QUALIFIER_NAME.equalsIgnoreCase(qualifier)) {

byte[] byteInput = cell.getValue().toByteArray();

Either deserializedRecord = deserialize(byteInput);

if (deserializedRecord.isRight()) {

return (objectRecord) deserializedRecord.right().get();

} else {

LOG.error(

"Cannot deserialize record from Bigtable to Data Object",

deserializedRecord.right().get());

}

}

}

return null;

}

private Either deserialize(byte[] avroBinaryPayload) {

SpecificDatumReader datumReader =

new SpecificDatumReader<>(objectRecord.class);

BinaryDecoder decoder = DecoderFactory.get().binaryDecoder(avroBinaryPayload, null);

try {

return Either.right(datumReader.read(null, decoder));

} catch (IOException e) {

return Either.left(e);

}

}

Conclusion

The choice between binary and multi-column storage in Bigtable is a classic engineering trade-off between performance and flexibility. For this large-scale Dataflow use case, where whole-record processing was the norm, the benefits were overwhelmingly clear. Switching to an binary format yielded a 25% cost reduction, a ~50% improvement in read latency, and a ~40% improvement in write latency.

For any team operating a data-intensive platform, it’s a worthwhile exercise to challenge your assumptions and test your storage strategies. As this investigation shows, a small change can unlock massive gains in both performance and efficiency.

Source Credit: https://medium.com/google-cloud/optimizing-dataflow-with-bigtable-cost-and-performance-savings-4ff5c4338510?source=rss—-e52cf94d98af—4