Google Cloud Pub/Sub is best way to build decoupled, distributed, high scale systems that require message delivery or queuing features. This article will help you understand the best practices for efficient and cost effective use of Pub/Sub.

Pricing

Pricing is composed of 3 main components (monthly):

- Throughput ($40/TiB. $50 for BQ or GCS targets) — all data published to a Topic or read from a Subscription. Measured in 1 KB increments.

- Data egress— all data into Pub/Sub is free. All data out to internet or been regions cost you money.

- Storage ($0.27/GiB) — for using message retention on your Topic and for any message in a Subscription that is older than 1 day

A quick recap

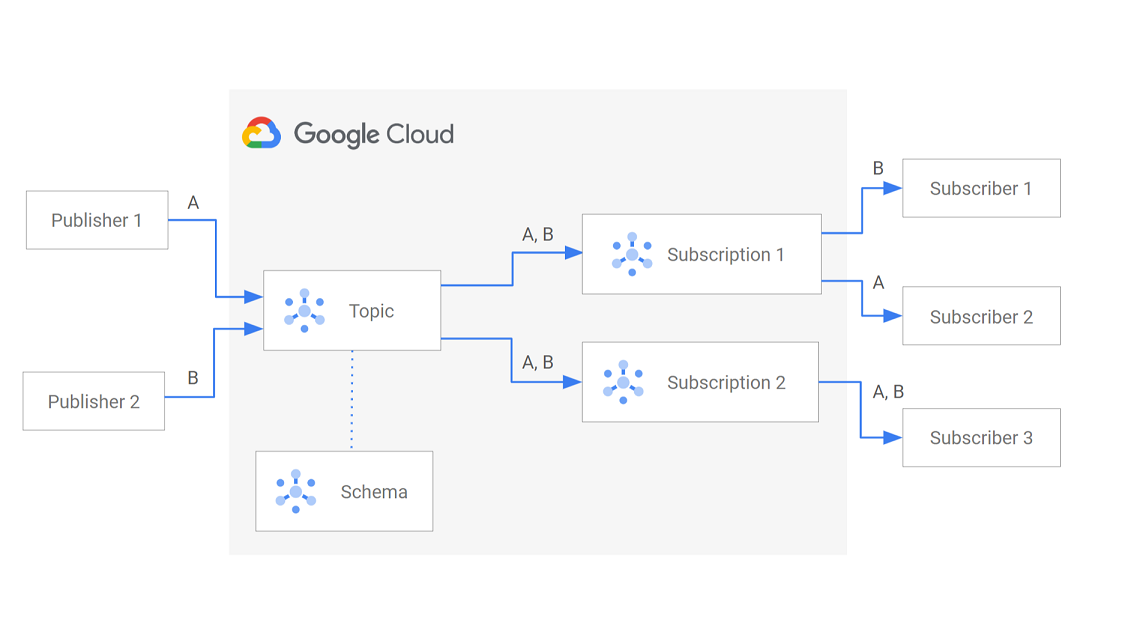

Pub/Sub is a global service — this means you don’t need to deploy a Topic in every region you have workloads in. Pub/Sub subscriptions attach to a Topic so you can listen to incoming messages. Subscriptions can either be Push or Pull based. Push is the simplest since Pub/Sub will push messages to subscribers and handle authentication, scaling, and backoff/retry. Pub/Sub uses exponential back off to optimize the push rate to your consumers.

Pro tip: You should use Push unless there is a good reason not to

Hint: There are very few reasons to Pull. You have to write & manage code + you are wasting compute $$$ if you do this in serverless compute like Cloud Run.

Understand how Subscriptions work

Here’s the key things to remember about a subscription:

- Messages are duplicated from the Topic to all Subscriptions. This means Throughput charges grow in a linear fashion directly proportional to the number of active Subscriptions

- Subscriptions maintain independent control of their copy of each message. Acknowledged messages in a Subscription don’t affect any other subscriptions

- Subscriptions only contain messages that arrive to the Topic after the subscription was created

Understand Message Retention

By default Topics do not retain messages, however by default Subscriptions retain messages for 7 days. You can change both of these settings at cost.

Pro Tip: If you need to Seek back in time and replay previously acknowledge messages, then add Topic retention.

Delivery Format matters: Wrapped vs Unwrapped

By default, Pub/Sub will wrap published messages in a Pub/Sub specific JSON schema. The specific location will be at the message.datafield.

{

"message": {

"data": "[Published message as a Base64 encoded string]",

"messageId": "2070443601311540",

"message_id": "2070443601311540",

"publishTime": "2021-02-26T19:13:55.749Z",

"publish_time": "2021-02-26T19:13:55.749Z"

},

"subscription": "projects/myproject/subscriptions/mysubscription"

}

Pub/Sub pushes messages using a standard HTTP POST that includes a Content-Type header of application/json. This helps downstream consumers process the data appropriately.

Your code must base64 decode the message.data field as the first step. Here’s sample code for a Cloud Run Function:

functions.http('helloHttp', async(req, res) => {

try {

if (req.method !== 'POST') {

res.status(405).send('Method Not Allowed');

return;

}// Get the payload from the request body

const payload = req.body;

//console.log("Body:"+ JSON.stringify(payload));

if (!payload.message || !payload.message.data) {

const errorMessage = 'Bad Request: Invalid Pub/Sub message format';

console.error(errorMessage);

res.status(400).send(errorMessage);

return;

}

const b64Message= payload.message.data

const message = Buffer.from(b64Message, 'base64').toString().trim()

console.log("Decoded msg: " + message)

const jsonObject = JSON.parse(message);

// TODO: Do something with the message

// A 200 Ack's the message

res.status(200).send('Success');

} catch (error) {

console.error('Error:', JSON.stringify(error));

res.status(500).send('Error processing request');

}

});

Alternatively, you can enable Message Unwrapping (for push subs only) — which tells Pub/Sub to deliver the published message as-is without any additional information. When using Unwrapped messages, all headers will be removed from the HTTP POST request, including the Content-Type header.

Cloud Run will attempt to parse the incoming message from Pub/Sub according to the Content-Type header, however the lack of headers causes delivery issues for certain HTTP frameworks.

Pro tip: When using Unwrapped, I suggest adding back the metadata (which includes headers) so you don’t run into delivery and parsing issues downstream

Best Practices

Message Sizes

Throughput costs are measured in 1 KB increments, which means a 1 byte message costs the same as a 1KB message. If all of your messages are small, you should use Batch settings to maximize the 1KB billing window and deliver multiple messages in a single publish.

For example, lets assume each message averages 300 bytes. That means you can fit 3 messages per batch to maximize the 1KB window.

If you’re using the Node APIs, set the batching configuration for maxMessages = 3.Always set the maxMilliseconds option to prevent delays there is a low throughput of messages to publish.

Then call the publishMessage method multiple times. The client will automatically buffer your messages and deliver them together.

const publishOptions = {

batching: {

maxMessages: 3, // Choose a value that gets you close to 1KB

maxMilliseconds: 3000, // Don't delay too long. Publish at 3 seconds

},

};

const batchPublisher = pubSubClient.topic(topicNameOrId, publishOptions);const promises = [];

for (let i = 0; i < 3; i++) {

promises.push(

(async () => {

const messageId = await batchPublisher.publishMessage({

data: dataBuffer,

});

console.log(`Message ${messageId} published.`);

})(),

);

}

await Promise.all(promises);

Process messages within 1 day

Messages sitting in a Subscription for less than 1 day do not incur Storage charges. Try to process and Acknowledge messages within 1 day to minimize costs. Monitor the Unacknowledged Message metric — if this metric increases over time, that means your consumer is not keeping up.

Confirm the Oldest unacknowledged message age metric to understand how far behind your consumer is.

Filter your Subscriptions

Always include attributes when you publish messages. Attributes allow you to enable a filter and ensure only valid messages make it to a Subscription.

This will prevent your consumer from attempting to process invalid messages, ultimately improving processing throughput and improving overall cost efficiency.

Filtered messages cut out the entire data transfer component of the pricing but you still pay the Throughput component.

The cost savings can be very significant, especially if you have large message sizes or high scale volume.

Use a DLQ

If you don’t use a DLQ, messages that exceed the Retain duration or Retry limit are deleted forever. This usually happens for a few reasons:

- Your consumer attempts to process a message but encounters an error or does not Acknowledge the message

- Your consumer is unable to keep up with incoming publish throughput and messages start exceeding the Retain setting

Setup a different process to inspect the DLQ and redrive the messages back into the main Topic.

Test throughput end to end

It’s critical to understand how your consumers respond to different scale of incoming messages. You should understand CPU Utilization and Memory consumption so you can tune your consumer appropriately.

The only way to do this is to load test the flow of messages from end to end. You can write your own load generator that publishes messages to Pub/Sub — but you have to own code and deploy this to compute.

I recommend using Google Cloud’s Dataflow service to generate artificial load. There’s a built in Template that you can use called the Streaming Data Generator.

Set the Output Rate to something you want to simulate:

Enable the Streaming Engine so you offload the publisher compute to Google owned infrastructure.

Next, you will need to provide Dataflow with a JSON template that will be used to generate random messages to push to PubSub. All possible configs and functions are available here. Here’s a sample template that pushes a fictitious order in JSON. Upload it to GCS for reference in the next step.

{

"id": {{integer(0,1000)}},

"orderId": "{{uuid()}}",

"firstName":"{{firstName()}}",

"email":"{{email()}}",

"state":"{{state()}}",

"product": "{{random("labubu","molly","snoopy")}}",

"isInStock": {{bool()}},

"orderDate": "{{date('YYYY-MM-dd')}}"

}

Select the JSON template from above and make sure you specify the Output Sink as PUBSUB.

Finally, if you removed your default network and made a custom one, you will need to specify the network and subnetwork (subnet) you want to deploy to. Pay attention to the subnetwork format.

Dataflow will provision all resources and launch the job. This usually takes a few minutes and then slowly ramp up the RPS to the max you configured. You can watch the progress:

Summary

This post doesn’t cover a bunch of features you should be aware of, such as:

Reach out to me if you need any help.

Happy Coding!

Source Credit: https://medium.com/google-cloud/top-google-cloud-pub-sub-tips-tricks-30cb0074c603?source=rss—-e52cf94d98af—4