Google Cloud’s automatic data lineage is a powerful feature, mapping data flows for supported services like BigQuery. But for a comprehensive data governance strategy, it’s important to address the blind spots: the custom scripts, legacy systems, and unique data sources that automated tools can’t see. This is where the Data Lineage API can be a valuable asset.

To use it effectively, it helps to understand its structure and its strategic role in your data ecosystem.

The Core Components of Lineage: Process, Run, and Event

The Data Lineage API is built on three core concepts. Let’s use a baking analogy.

1. The Process: Your Recipe

A Process is your recipe. It’s the static, reusable definition of what you’re doing — for example, the “Customer Data Transformation Pipeline.” It’s the blueprint for the transformation logic, and you can attach metadata to it, like the owner or a description. You define it once and reuse it for every execution.

2. The Run: Your Baking Session

A Run is the act of baking the cake. It’s a specific execution of your Process that happens at a particular time. If your “Nightly Sales Aggregation” Process is the recipe, the Run is what happened on “Tuesday night at 11 PM,” complete with a start time and a status (COMPLETED, FAILED, etc.).

3. The Lineage Event: The “Before and After” Photo

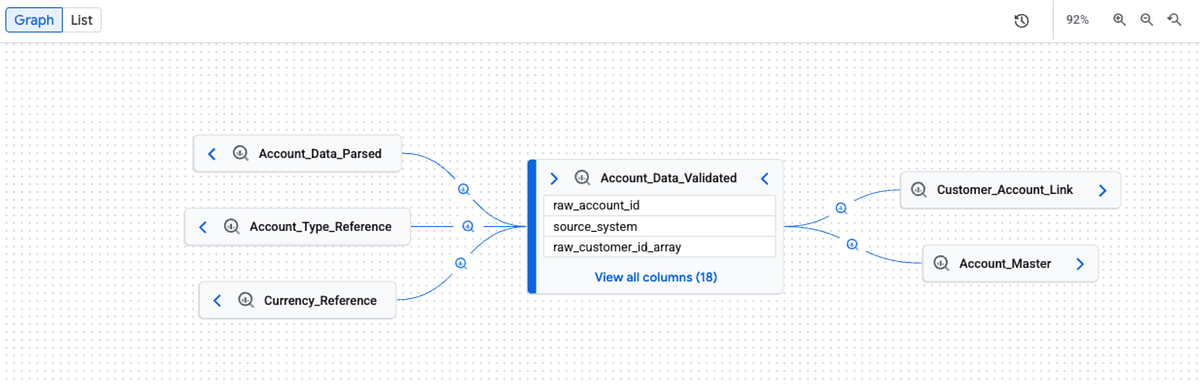

A Lineage Event is the proof. It’s the “before and after” photo of your ingredients (sources) and your finished cake (target). Happening during a Run, this is what actually draws the lines on the lineage graph by linking source and target assets using their unique fully_qualified_name (FQN).

The API as a Unified Control Plane

With these building blocks, the Data Lineage API becomes more than a tool; it’s a unified control plane for your entire data ecosystem’s metadata. It can act as a single source of truth for how data is transformed, regardless of where it happens. This provides a few key advantages:

- Holistic Governance: By manually reporting lineage, you close the gaps in your data map, which helps with impact analysis (“What breaks if I change this schema?”) and root cause analysis.

- Democratizing Trust: A complete lineage graph allows data consumers to self-serve and verify the trustworthiness of data by seeing its full journey.

- Future-Proofing: As your data stack evolves, the API provides a consistent way to report lineage, whether you’re using a Python script today or a Spark job tomorrow.

Power Tools for Richer Lineage

The API also provides features to integrate with the broader ecosystem and enrich your lineage graphs.

- OpenLineage: Google has embraced OpenLineage, an open standard for data lineage. Using the dedicated

ProcessOpenLineageRunEventendpoint, you can send OpenLineage-formatted events directly to Dataplex, ensuring interoperability and a unified view. - Rich Metadata: A lineage graph is most useful when it’s enriched with context. The API allows you to attach key-value

attributesto yourProcess. A powerful example is adding asqlkey containing the transformation query. Dataplex will render this as a highlighted code block in the UI, giving developers instant context.

From Theory to Practice

Let’s see how a simple Python wrapper can handle this workflow, turning the concepts into a concrete implementation.

lineage = DataplexLineage(project_id="your-project", location="your-location")lineage_event = lineage.create_lineage(

source_entries=[

lineage.build_fqn(project_id="project-id", gcs_path="gs://bucket/path/file.avro"),

lineage.build_fqn(project_id="project-id", dataset_id="dataset", table_name="source_table")

],

target_entry=lineage.build_fqn(

project_id="project-id",

dataset_id="dataset",

table_name="target_table"

),

process_display_name="My ETL Process", # The Recipe (Process)

source_type="CUSTOM",

labels={"env": "prod", "team": "data-engineering"},

attributes={

"description": "Process that transforms raw customer data",

"sql": "SELECT * FROM source_table WHERE status = 'active'" # Rich Metadata!

}

)

The full code for this DataplexLineage wrapper is available on GitHub: https://github.com/jskura/dataplex-lineage

This single function call handles the entire sequence:

- It finds or creates the Process (the recipe).

- It kicks off a new Run (the baking session).

- It creates the Lineage Event (the before-and-after photo), linking sources to the target and attaching your rich metadata.

By using the Data Lineage API for your custom sources, you can enhance your data governance from a passive, automated process to a more active, comprehensive strategy. You’re not just mapping the main highways; you’re adding the local roads, helping to create a more complete and trustworthy map of your entire data landscape.

Source Credit: https://medium.com/google-cloud/a-practical-guide-to-custom-lineage-with-googles-data-lineage-api-0ee8d4d3af2c?source=rss—-e52cf94d98af—4