Exploring a generative AI workflow that combines computer use, multimodal reasoning, and creative generation to build end-to-end shopping experiences.

👉 The complete code for this workflow is available on GitHub.

Introduction

Generative AI has transformed how we create, learn, and connect, but also how we shop. Online shopping often means endless scrolling, filtering, and comparing. Despite having infinite options, finding what we actually want can still take hours.

But what if shopping were conversational? What if you could simply say: “I need an outfit for a birthday party,” or “I want to decorate my living room in a cozy, minimalist style.” and get back curated selections, a photorealistic preview showing you wearing the outfit or your room styled with new furniture, and even a short video that brings it all to life?

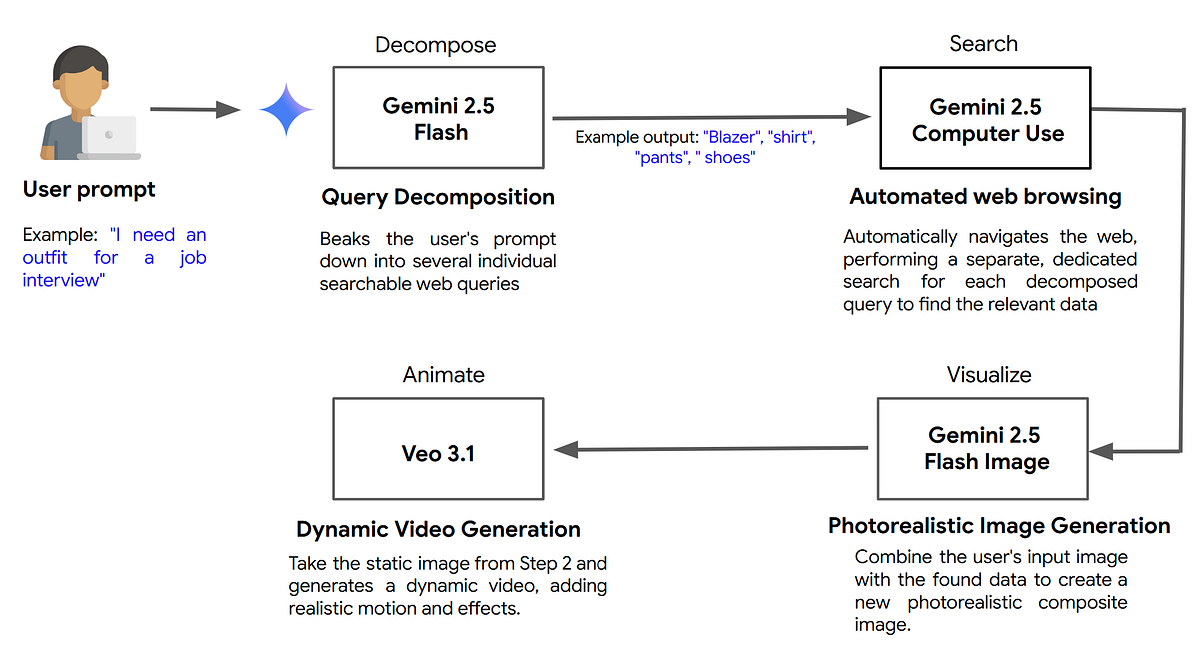

In this post, we’ll explore what becomes possible when Gemini 2.5 Computer Use, Gemini 2.5 Flash Image (aka Nano Banana), and Veo 3.1 work together to power an AI shopping workflow that can:

- Understand a user’s natural language intent and break it down into a list of structured, actionable search terms

- Automatically perform multiple searches on the web to collect relevant products for each term

- Generate realistic creative visuals and videos showing how items fit together

The AI Shopping Workflow: An Overview

While our main example will be finding an outfit, this exact workflow can be applied to other use cases, like home decor, event planning, and more.

Here’s the global picture of what we’re building:

Together, these three models form a multimodal pipeline:

- Gemini 2.5 Computer Use → Web navigation and data collection

- Gemini 2.5 Flash Image (Nano Banana) → Image synthesis and virtual try-on

- Veo 3.1 → Video generation and realistic motion

Step 1: The Agentic Loop: Navigate Websites (Gemini 2.5 Computer Use)

Once the user’s intent is broken down, the next task is interacting with the web to find the right data. This is where Gemini 2.5 Computer Use comes in.

What is Gemini 2.5 Computer Use?

In the context of Artificial Intelligence (AI), “computer use” refers to an agent’s ability to operate a computer’s graphical user interface (GUI) to achieve a goal. Instead of relying on structured data or APIs, this type of agent “sees” the screen visually and decides where to click, type, and scroll to complete a task. Think of it as an AI agent that can operate a web browser by seeing the screen and using a virtual “mouse” and “keyboard” to execute a task.

Gemini 2.5 Computer Use is Google’s specialized model built to do exactly this. It’s built on Gemini 2.5 Pro’s capabilities to power agents capable of interacting with user interfaces.

It works as part of an agentic loop process:

- Input: The model receives a user request (the goal) and a screenshot of the current environment.

- Analysis: It analyzes these inputs and generates a response, typically a

function_callrepresenting a UI action (e.g.,click_at,type_text_at). - Action: Your application code executes this action.

- Feedback: A new screenshot is captured and sent back to the model, restarting the loop.

- This iterative process continues until the task is complete.

In our AI shopping workflow, this agentic loop is the engine for the search step. It’s what turns the user’s intent into action on the web. When a user gives a complex prompt, like: “I need an outfit for a job interview under 300 euros”.

The workflow first uses Gemini 2.5 Flash model to decompose this query into a list of searchable items (e.g., blazer, shirt, pants, shoes)

Once we have this list, the Computer Use agent takes over. For each item in the list, it performs an independent, automated browsing session. It will:

- Navigate to an e-commerce site (using permitted, test-friendly environments).

- Click the cookie acceptance button.

- Type “blazer” into the search bar and press Enter.

- Scroll down the page to load more results.

- Extract the product details (name, price, image URL) from the 5 best-matching items.

- Repeat this entire process for “shirt,” “ pants,” and “shoes.”

The image below shows our application’s logs, which capture the series of actions and observations as the model works towards its goal.

The code to run this is straightforward. We operate in a loop: we give the agent our goal and a screenshot, it gives us the next action to perform, we execute that action, and repeat.

# From computer_use_helper.py - Main Action Loop

# The user's goal

goal = f"""Search Amazon.fr for "{search_terms}".

1. Click search box

2. Type: "{search_terms}"

3. Press Enter

4. Scroll down twice

5. Say "Done"

"""

# Give the goal and the first screenshot

contents = [

Content(

role="user",

parts=[

Part(text=goal),

Part.from_bytes(data=screenshot, mime_type="image/png")

]

)

]

# Loop until the agent is "Done"

for turn in range(max_turns):

# Get the agent's desired action

response = client.models.generate_content(

model=MODEL, # "gemini-2.5-computer-use-preview-10-2025"

contents=contents,

config=config # Config specifies the computer_use tool

)candidate = response.candidates[0]

# Omitted for brevity

# Execute the browser actions (click, type, scroll)

results, fcs, parts = execute_actions(candidate, page, ...)

# Create the function response and new screenshot

function_responses, screenshot = create_function_responses(page, results, fcs, parts)

# Omitted for brevity

At the end of this step, our workflow has a curated list of 20 products (5 for each of the 4 items) ready for the user to review.

👉 Note: For this demonstration, we are targeting a single, known e-commerce site for simplicity and reliability. However, Gemini 2.5 Computer use itself is site-agnostic, and this workflow could be generalized to navigate other sites.

For the workflow’s design, the code provides a specific, multi-step

goal(e.g., “Click search box,” “Type,” “Scroll”) and programmatically navigates to a known e-commerce site.This defines the high-level strategy for the agent. The computer use model’s job is to take each of those steps and figure out how to visually execute it, for example, by looking at the screenshot to find the actual coordinates of the “search box” to click or identifying where to type the text.

Step 2: Visualize (Gemini 2.5 Flash Image)

The agent has successfully found a list of products. Now, the next step in our workflow is to bridge the gap between a list of items and a cohesive look. How do these products look together? And more importantly, how could we create a photorealistic visualization of them on the user?

This is where Gemini 2.5 Flash Image (Nano Banana) steps in. Here’s how this step works in practice:

- The user selects their favorite items from the search results (e.g., one blazer, one shirt, one pair of pants, one pair of shoes).

- They upload a photo of themselves (the “original asset”).

- Our application creates a simple product collage from the selected items. This is a crucial step because Gemini 2.5 Flash Image (Nano Banana) accepts a maximum of three input images. To send the user’s photo plus a full outfit of 3–4 items, we must first combine all the product images into a single collage.

- We send the user’s photo and the product collage to the Gemini 2.5 Flash Image model with a clear, simple prompt.

# From vto_generator.py - Nano Banana Virtual Try-On

def generate_multi_product_tryon(user_photo_base64, products, gemini_client):

# ... (Logic to decode user photo)

# ... (Logic to download product images)

# 1. Create the collage (if more than 2 items)

collage_bytes = create_product_collage(product_images_bytes)# 2. Build the prompt

prompt = "Generate a picture of the person in the first image wearing all the clothes shown in the collage photo (second image)."

model = "gemini-2.5-flash-image"

# 3. Build the content parts

parts = [

types.Part.from_text(text=prompt),

types.Part.from_bytes(data=user_photo_bytes, mime_type="image/jpeg"), # Image 1

types.Part.from_bytes(data=collage_bytes, mime_type="image/jpeg") # Image 2

]

contents = [types.Content(role="user", parts=parts)]

# 4. Generate the new image

response = gemini_client.models.generate_content_stream(

model=model,

contents=contents,

# Omitted for brevity

)

# ... (Extract image from response)

In seconds, the user receives a single, photorealistic Virtual Try-On (VTO) image of themselves wearing the complete outfit they just assembled from the agent’s search results.

Step 3: Animate (Veo 3.1)

We have a static, photorealistic image, which is a fantastic result. But to truly enhance the user experience, we can take it one step further. A dynamic video gives the user a complete, 360-degree view, helping them understand the fit and flow of the outfit in a way a static image can’t. The final step in our workflow is to bring this static image to life

This final animation step is powered by Veo 3.1, Google’s most capable video generation model. Veo can take a starting image and a text prompt to generate a high-definition, coherent video with complex and realistic camera movements.

Our workflow logic for this final step is:

- We take the static virtual try-on image generated by Nano Banana in Step 2. This will be the starting frame for our video.

- Our application logic checks the list of selected items. If it detects “shoes,” “boots,” or other footwear, it knows a full-body shot is required.

- Based on this check, it builds an intelligent prompt:

- If shoes are present: It requests a “smooth 360-degree camera rotation” to show the full outfit from head to toe.

- If no shoes are present: It requests a “smooth 180-degree rotation” to focus on the upper body.

- This starting image and the custom prompt are sent to the Veo 3.1 API.

This “smart prompt” logic ensures the generated video is always framed correctly for the specific outfit.

# From video_generator.py - Veo 3.1 Video Generationdef generate_outfit_video_veo(user_photo_base64, products, project_id, ...):

# ... (Logic to check for shoes)

has_shoes = any(keyword in name.lower() for name in product_names ...)

# 1. Select the right camera movement based on presence of shoes

if has_shoes:

# Full outfit with shoes - 360° rotation

prompt = f"""A professional video showing the full outfit.

SUBJECT: The person wearing {len(products)} new clothing items.

CAMERA MOVEMENT: Smooth 360-degree clockwise rotation around the person, showing all angles.

VISUAL STYLE: Full body shot (head to toe visible), well-lit, simple neutral background. Keep the person centered.

"""

else:

# Upper body outfit - 180° rotation

prompt = f"""A professional video showing the upper-body outfit.

SUBJECT: The person wearing {len(products)} new clothing items.

CAMERA MOVEMENT: Smooth 180-degree rotation (front to back to front).

VISUAL STYLE: Medium shot focusing on the upper body, well-lit, simple neutral background. Keep the person centered.

"""

# 2. Load the try-on image (output from Step 2)

tryon_image = types.Image.from_file(location=temp_path)

# 3. Call the Veo 3.1 API

operation = client.models.generate_videos(

model="veo-3.1-generate-preview",

prompt=prompt,

image=tryon_image, # Use the try-on as the starting frame

config=types.GenerateVideosConfig(

aspect_ratio="9:16", # Vertical for outfit showcase

duration_seconds=8,

resolution="1080p",

),

)

# ... (Wait for operation to complete)

The result is a 8-second, high-definition, vertical video of the user in their new outfit, complete with smooth, professional camera motion. It’s a dynamic asset that gives a 360° view of the products, taking the shopping experience to a whole new level.

Beyond Apparel: The Workflow’s Versatility

While we used outfit generation as the main example, this agentic workflow generalizes beautifully to many domains. Whether it’s home decor, event planning, or recipe creation, the pattern Decompose → Search → Visualize → Animate remains the same.

Example 1: Home Decor

- Prompt: “I need a mid-century modern look for my living room under $2000.”

- Step 1: The agent browses sites like Wayfair or IKEA, searching for “mid-century sofa,” “walnut coffee table,” and “abstract rug” that fit the budget. It gathers a list of products.

- Step 2: The user uploads a photo of their empty living room…

- Step 3: The model takes the “virtually staged” room and generates a short video, smoothly panning across the room to showcase the new furniture from different angles.

Example 2: Professional Recipe Showcase

- Prompt: “I need a 3-course Italian dinner menu for a corporate event this week.”

- Step 1: The agent decomposes this into “Italian appetizer,” “Italian main course,” and “Italian dessert.” It browses recipe sites to find 3–4 top-rated options for each, extracting ingredients and instructions.

- Step 2: The user selects one recipe from each category. They upload a photo of a simple dinner plate. Nano Banana generates a photorealistic image of the final, plated meal.

- Step 3: The model takes the plated meal image and generates a short, appetizing promotional video, perhaps with a slow zoom or a subtle steam effect, making the recipe irresistible.

Demo: AI Shopping Workflow in Action

Watch the end-to-end workflow in action, from intent understanding to product search, virtual try-on, and video generation.

👉 Note: For demonstration purposes, some browsing sequences in the video have been accelerated. In real-world usage, the complete workflow (searching 4 items across Amazon.fr, generating virtual try-on, and creating video) takes approximately 8–9 minutes from start to finish.

Conclusion

What we’ve built here is just one of many possible generative AI workflows. It shows the power of specialized multimodal models accessible via APIs, each designed for a specific task, from reasoning and computer use to image and video generation.

By combining these capabilities, we can build complex, end-to-end applications that move beyond simple Q&A to actively assist users in completing real-world tasks. As models become more multimodal and compositional, these orchestrated pipelines are shaping the next generation of AI experiences, ones that understand, act, and collaborate with users seamlessly.

Responsible Use

Gemini 2.5 Computer Use was built with safety at its core. Developers should follow the best practices outlined in the documentation, including per-step safety checks, user confirmation for sensitive actions, and thorough testing before deployment. Use these capabilities responsibly, with transparency, respect for website policies, and a focus on trust, safety, and human benefit.

Disclaimer

This project is a technical proof-of-concept for educational purposes. The workflow shown in the demo is intended to illustrate the capabilities of these AI models. The use of a commercial website and its content is for demonstration purposes only.

A real-world application of this technology would require explicit permission from website owners to ensure it aligns with their policies and content usage guidelines.

Source Credit: https://medium.com/google-cloud/building-a-multimodal-ai-shopping-workflow-with-gemini-2-5-916fe04800c6?source=rss—-e52cf94d98af—4