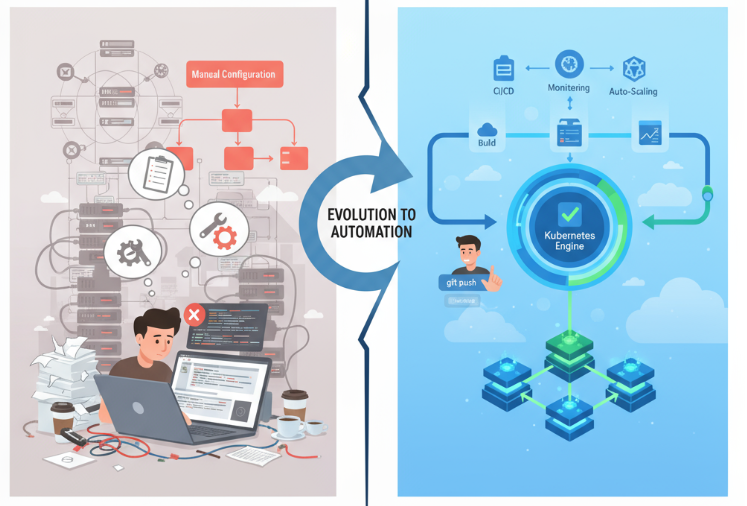

Google Kubernetes Engine (GKE) has always provided powerful ways to run containerized workloads, but the options available to define and manage the underlying compute resources have undergone a steady evolution over time. Moving from manual node pool management to sophisticated, policy-driven automation with Node Auto-Provisioning and Custom ComputeClasses. Google also recently announced the best of Autopilot in Standard — which allows you to implement features of autopilot for workloads in standard clusters.

I need a minute here — to take a step back and recap on all the current options for node selection in GKE — Standard and Autopilot. This post attempts to do just this — walking through this evolution, showcasing each configuration option and their key features.

Stage 1: Manual Configuration using Node Pools and Selectors

In the early days of GKE, users had direct control over their cluster infrastructure, primarily by manually creating and managing node pools. Each node pool would be configured with a specific machine type, sizing, and features like spot VMs or attached GPUs.

Pods are targeted to the correct nodes using node selector or node affinity rules within your pod specifications.

An example of this is as follows — first create the node pool — shown here using a gcloud command (snippet):

# Manually create a node pool

gcloud container node-pools create my-n2-pool - machine-type=n2-standard-8 …

and then specify the nodepool, or the machine type in the Pod spec.nodeselector field:

# Pod Spec targeting a manually labeled Node Pool

apiVersion: v1

kind: Pod

metadata:

name: my-app-pod

spec:

nodeSelector:

cloud.google.com/gke-nodepool: my-n2-pool

# machine-type: n2-standard-8 - other selector option

This model offers a lot of control over the provisioned compute, but will require operational overhead to manage, scale, and optimize costs across potentially many node pools.

Stage 2: Automation with Node Auto-Provisioning (NAP)

To reduce the overhead of manual node pool management, GKE introduced Node Auto-Provisioning (NAP).

When a pod cannot be scheduled on any existing nodes, NAP automatically creates a new node pool with a suitable machine type according to the workload requirements, and delete it when it’s no longer needed. This removes the requirement to pre-create every possible node configuration.

NAP supports a wide range of node specifications such as spot VMs, GPUs, TPUs, and specific machine families (via node selectors like cloud.google.com/machine-family).

Consider the sample pod specification below. In it, the pod requirements are 1 nvidia-tesla-t4 GPU. If there are no nodes with that accelerator type, NAP will provision one.

# Example Pod requesting resources that NAP can use to provision a new node pool

apiVersion: v1

kind: Pod

metadata:

name: gpu-pod

spec:

nodeSelector:

cloud.google.com/gke-accelerator: nvidia-tesla-t4

containers:

- name: cuda-container

image: nvidia/cuda:11.0.3-base-ubuntu20.04

resources:

limits:

nvidia.com/gpu: 1

requests:

cpu: "2"

memory: "8Gi"

NAP can significantly improve cluster efficiency and reduce manual operational overhead.

Stage 3: Guided Specialization — Autopilot’s Built-in Compute Classes

GKE Autopilot marked a major shift by abstracting away node management. Initially, Autopilot provided a general-purpose compute platform in response to workload types — in effect finding the “best fit” node for the workload. However, to cater to more specific needs without reintroducing manual node management, Built-in Compute Classes were introduced.

These classes — Scale-Out, Balanced, and Performance — provided a simpler way to request nodes optimized for certain characteristics. For example, to disable multi-threading use the Scale-Out class; for CPU and memory intensive workloads use Balanced; to request C3 machines use Performance with cloud.google.com/machine-family: c3 — example below).

# Pod Spec using the Performance Compute Class with a specific machine family

apiVersion: v1

kind: Pod

metadata:

name: performance-workload

spec:

nodeSelector:

cloud.google.com/compute-class: Performance

cloud.google.com/machine-family: c3

containers:

- name: my-container

image: "k8s.gcr.io/pause"

resources:

requests:

cpu: 4000m

memory: 16Gi

While useful within the Autopilot context, built-in classes were a stepping stone towards more granular control.

Stage 4: More Control with Custom ComputeClasses

Custom ComputeClasses (CCC) were introduced into GKE and these brought a whole new level of declarative power and control over node provisioning. They fully integrate with, and enhance NAP, while also supporting manually managed node pools.

These ComputeClasses allow the platform admin to define profiles for node provisioning and autoscaling and apply them to workloads, namespaces or clusters. Key capabilities to take note of include:

- Prioritized Hardware Choices — Define a list of preferred machine types, families, Spot/On-Demand, reservations, and accelerator configurations.

- Automated Fallbacks — Ensures workload obtainability by automatically trying lower-priority options if the preferred ones are stocked out.

- Active Migration- Optionally migrates workloads back to higher-priority (e.g., more cost-effective) nodes when capacity becomes available.

- NAP Integration- When nodePoolAutoCreation: enabled: true, the ComputeClass dictates how NAP provisions new node pools.

- Manual Node Pool Support- ComputeClasses can also apply to manually created node pools. This is done by labeling the nodes/node pools to match the ComputeClass selector and ensuring the nodes meet one of the priority criteria.

Let’s have a look at an example below. Firstly, NAP is enabled using “spec.nodePoolAutoCreation = true”. Then, a priority list of machine family types that will be evaluated in order is specified. The highest priority option that can be satisfied will be the deployment target of the workload.

Finally, setting “spec.activeMigration=true” provides the capability for GKE to actively migrate workloads to a higher priority option if it becomes available.

# Custom ComputeClass for GKE Standard with NAP

apiVersion: cloud.google.com/v1

kind: ComputeClass

metadata:

name: general-purpose-standard

spec:

nodePoolAutoCreation:

enabled: true

priorities:

- machineFamily: n2

spot: true

- machineFamily: n2d

spot: true

- machineFamily: e2

spot: true

- machineFamily: n2

spot: false

activeMigration:

optimizeRulePriority: true

autoscalingPolicy:

consolidationDelayMinutes: 10

Pods then reference the class via the node selector:

# Pod using the Custom ComputeClass

apiVersion: apps/v1

kind: Deployment

spec:

template:

spec:

nodeSelector:

cloud.google.com/compute-class: general-purpose-standard

containers:

# … container spec

This declarative approach of ComputeClasses helps centralize infrastructure policy and enhance resource obtainability. It can help platform admins work toward a future-proof platform by allowing for adoption of new machine types without requiring changes to application manifests.

Stage 5: The unified experience — Autopilot Mode in GKE Standard

In the latest advancement in GKE, you can now run workloads in GKE Autopilot mode within your existing GKE Standard clusters. This means you can have Autopilot features like Google-managed nodes, pod-based pricing, and the hardened security posture for specific workloads, alongside your traditional, user-managed node pools in GKE Standard.

This “mixed-mode” operation is enabled via ComputeClasses in a couple of ways:

- You can use pre-installed ComputeClasses like Autopilot or Autopilot-spot:

# Pod Spec using the built-in Autopilot compute class in a Standard cluster

kind: Pod

metadata:

name: my-autopilot-pod-in-standard

spec:

nodeSelector:

cloud.google.com/compute-class: Autopilot # This triggers Autopilot management

containers:

- name: my-container

image: "k8s.gcr.io/pause"

resources:

requests:

cpu: 500m

memory: 1Gi

2. Or, you can enable Autopilot mode within a custom ComputeClass definition using the autopilot: enabled: true flag:

# Custom ComputeClass enabling Autopilot mode for N2 machines

apiVersion: cloud.google.com/v1

kind: ComputeClass

metadata:

name: n2-autopilot-managed

spec:

autopilot:

enabled: true # Key flag to enable Autopilot management

priorities:

- machineFamily: n2

spot: false

activeMigration:

optimizeRulePriority: true

And then reference it in your workload:

# Deployment using the custom n2-autopilot-managed ComputeClass

spec:

template:

spec:

nodeSelector:

cloud.google.com/compute-class: n2-autopilot-managed

# … containers …

This hybrid approach means you no longer face a hard “either/or” choice between GKE Standard and Autopilot cluster types upfront. You can now run workloads with special requirements on user-managed node pools alongside Autopilot-managed workloads.

From a pricing perspective, you can utilize existing Committed Use Discounts (CUDs) across both VM-based and Pod-based pricing models within the one cluster.

This unifies the GKE platform, providing a single, flexible environment that adapts to any workload.

Visualizing the Evolution

They say a picture is worth a 1000 words — so I have tried to summarise all of the above in one — a flow chart really. Each stage is presented with its implementation detail along with some key user benefits.

Key Takeaways & Next Steps

The evolution of compute management in GKE, from manual node pool selection to NAP to the policy-driven approach of Custom ComputeClasses, demonstrates a clear trend towards greater automation, resilience, and efficiency.

To read more and get started with GKE, NAP and Custom ComputeClasses, explore the following Google Cloud documentation:

Source Credit: https://medium.com/google-cloud/the-evolution-of-compute-selection-in-google-kubernetes-engine-gke-from-manual-to-intelligent-9a22a1cffd60?source=rss—-e52cf94d98af—4