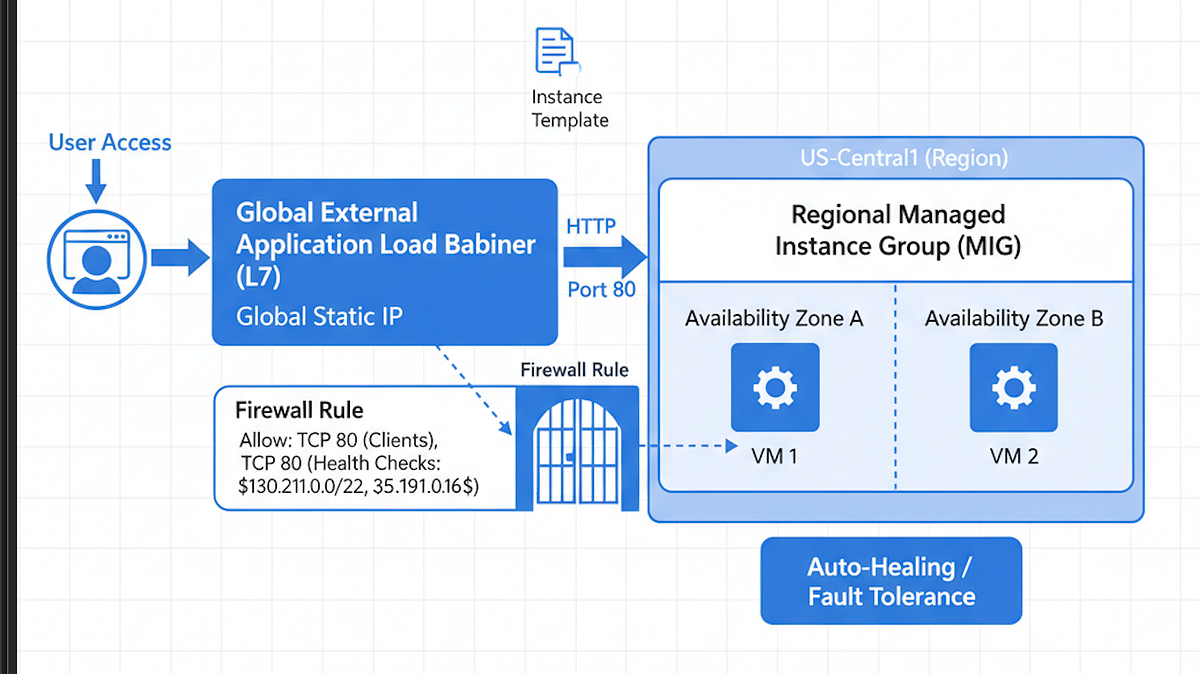

In this tutorial, we will build a production-ready, highly available web application on Google Cloud Platform. Our primary focus is achieving fault tolerance and auto-healing — meaning if a VM fails, it is automatically replaced, and traffic is instantly rerouted, ensuring minimal downtime.

Why is this level of reliability necessary? In the real world, single points of failure are inevitable:

- Zone Failures: A rare, localized power outage or network issue in a single data center (Zone) could take down all your services instantly. By distributing our app across multiple zones using a Regional Managed Instance Group (MIG), we ensure the service remains operational even if an entire zone goes offline.

- Software Crashes/Corruptions: If a bug in your application or an automated configuration change causes the web server on a VM to crash or hang, the Load Balancer’s Health Check detects the failure and the MIG’s Auto-Healing feature immediately provisions a replacement. This prevents manual intervention and restores service in minutes.

- Traffic Spikes: While our focus here is on healing, a highly available setup is the foundation for scaling. When a flash sale or viral news post drives a sudden surge of users, having the infrastructure ready to handle the load across multiple instances ensures your application remains responsive and doesn’t buckle under pressure.

Key Components:

- VPC Firewall: Secure ingress for health checks.

- Instance Template (with Startup Script): Defines the application installation process.

- Managed Instance Group (MIG): Handles auto-healing and scaling.

- Global External Application Load Balancer: Provides a single, global entry point.

Prerequisites and Code Setup

The Demo Application

We will use a simple Python Flask application. Clone the code from the dedicated repository.

Code Repository:

https://github.com/Amiynarh/gcp-network-demo-app

The repository contains:

main.py: The Flask app.requirements.txt: Specifies dependencies.startup-script.sh: The critical script that installs and runs the app on the VM.

Part 1: Configuring the Network and Compute

Step 1: Create the Firewall Rule for Health Checks

The Load Balancer needs to ensure your VMs are alive. We must allow traffic from Google’s health check probe ranges on the app port (80). GCP’s load balancer probes use specific IP ranges (130.211.0.0/22 and 35.191.0.0/16) for health checks. You must explicitly allow ingress traffic on the application port (80) from these ranges.

To create the firewall rule via console, navigate to VPC Network > Firewall > Create firewall rule

- Name:

fw-allow-lb-health - Targets:

Specified target tags - Target tags:

allow-health-check - Source filter:

IP ranges - Source IP ranges:

130.211.0.0/22, 35.191.0.0/16 - Protocols and ports: Check Specified protocols and ports and enter

tcp:80

Or use the gcloud command

gcloud compute firewall-rules create fw-allow-lb-health \

--network=default \

--action=ALLOW \

--direction=INGRESS \

--source-ranges=130.211.0.0/22,35.191.0.0/16 \

--target-tags=allow-health-check \

--rules=tcp:80

Step 2: Create the Instance Template with Startup Script

The template defines the VM configuration, including the software and startup script, allowing the MIG to create identical replicas. The template is the blueprint for all VMs in our deployment.

To set up the instance template — Navigate to Compute Engine > Instance templates.

- Click Create instance template.

- Name:

lb-backend-template - Machine type:

e2-micro(sufficient for the demo). - Firewall: Check Allow HTTP traffic (or rely on the tags below).

- Network tags (under Networking): Add

http-server, allow-health-check - Management Section:

- Automation: Paste the contents of

startup-script.shinto the Startup script box.

gcloud Command

(Note: Using --metadata-from-file is cleaner for real-world setups)

gcloud compute instance-templates create lb-backend-template \

--machine-type=e2-micro \

--image-family=debian-11 \

--image-project=debian-cloud \

--tags=http-server,allow-health-check \

--metadata=startup-script="$(cat startup-script.sh)"

Step 3: Create the Regional Managed Instance Group (MIG)

The MIG uses the template to create and manage the VMs, ensuring auto-healing and high availability across zones.

Navigate to Compute Engine > Instance groups.

- Click Create instance group.

- Group name:

lb-backend-group - Location: Select Regional (e.g.,

us-central1). - Instance template: Select

lb-backend-template. - Autoscaling: Set minimum number of instances to 2.

- Autohealing: Under Initial delay, set to

300seconds (to allow app installation). - Port Mapping (Crucial for LB): Under Configure ports, click Add item and map Port Name

httpto Port Number80.

Note: Why 300 seconds? The Initial delay gives your application time to fully install and start the web server defined in your startup-script.sh before the health checker starts probing it. If the check begins too soon, the instance will be marked as UNHEALTHY (even though it’s still starting up) and immediately replaced, wasting resources and causing an endless repair loop. We set it high enough to guarantee a successful first start.

gcloud Command

gcloud compute instance-groups managed create lb-backend-group \

--base-instance-name=lb-backend \

--size=2 \

--template=lb-backend-template \

--region=us-central1 \

--named-ports=http:80

Part 2: The Global External Application Load Balancer

This component ties everything together, providing a single, reliable entry point.

Step 4: Reserve a Global Static IP Address

A global IP is required for the front-end of the global load balancer. In the console — Navigate to VPC network > IP addresses.

- Click Reserve external static address.

- Name:

lb-ipv4-1 - Network Service Tier:

Premium(required for Global LB) - Type: Global

Note: Why Premium Tier? The Premium Tier offers the highest performance and lowest latency because it uses Google’s global, private fiber network for the majority of the connection path, starting right from the user’s entry point. This is required for a Global External Load Balancer because you want a single IP that serves users worldwide using the fastest path possible. The Standard Tier uses the public internet for part of the path, which is not suitable for a global entry point.

gcloud Command

gcloud compute addresses create lb-ipv4-1 \

--ip-version=IPV4 \

--global

Step 5: Configure the Load Balancer

Use the Load Balancing wizard to configure the three parts: Backend, Host Rules, and Frontend. Navigate to Network Services > Load balancing > Create load balancer.

- Select Application Load Balancer (HTTP/S) > Public facing (external) > Global External Application Load Balancer.

- Click Configure.

A. Backend configuration

- Backend services & backend buckets: Create a new Backend service.

- Name:

web-backend-service - Backend type:

Instance group - Instance group: Select

lb-backend-group. - Port numbers:

80 - Health check: Create a new check.

- Name:

http-health-check - Protocol:

HTTP - Request path:

/healthz(This is the endpoint we wrote in the Flask app!)

/healthz path.B. Frontend configuration

- Protocol:

HTTP - IP address: Select

lb-ipv4-1 - Port:

80

NOTE: Port 80 is the standard port for all HTTP (unencrypted web) traffic. When a user types a public IP address into their browser without specifying a port, the browser defaults to port 80. This is the global entry point for all client requests.

/healthz path.Click Create and give your loadbalancer a name.

Step 6: Final Verification

Wait 5–10 minutes for the load balancer to provision.

Find the global IP address of the Load Balancer.

Paste the IP into a browser.

Expected Result

You should see:

“Hello from the GCP Network Reliability Demo Backend! Instance Name:

“

If you refresh the page, the VM hostname should change, confirming traffic is being load-balanced across your MIG instances.

When we route /healthz for the health check request path. Using a dedicated health check endpoint is a standard practice for modern web apps. The /healthz endpoint in our Flask app is designed to return a simple HTTP 200 OK status code only if the application is fully running and ready to serve traffic. This tells the Load Balancer, “Yes, I am alive and healthy,” allowing the MIG to only direct traffic to ready instances.

Congratulations! You have successfully deployed a highly available, auto-healing web application on Google Cloud Platform. By combining the power of the Global External Application Load Balancer and a Regional Managed Instance Group (MIG), you achieved a resilient architecture. Traffic is distributed globally, and the built-in health check and auto-healing features ensure that if any backend VM fails, it is automatically replaced and traffic is immediately redirected, establishing a strong foundation for cloud-native reliability.

Next Steps to Production

To enhance this setup for a real-world production environment, consider these steps:

- Implement HTTPS: Use a Target HTTPS proxy and Google-managed SSL certificates in your load balancer configuration to encrypt traffic.

- Enable Autoscaling: Configure the MIG to scale dynamically based on CPU utilization or HTTP load to handle unpredictable traffic spikes.

- Use GKE/Cloud Run: For more complex microservice deployments, migrate your application to Google Kubernetes Engine (GKE) or the serverless Cloud Run platform.

References

Source Credit: https://medium.com/google-cloud/cloud-native-reliability-101-building-an-auto-healing-web-app-on-gcp-with-migs-and-a-global-load-f71fa2c21d6c?source=rss—-e52cf94d98af—4