Welcome to the November 1–15, 2025 edition of Google Cloud Platform Technology Nuggets. The nuggets are also available on YouTube.

AI and Machine Learning

The partnership between Hugging Face and Google Cloud has deepened with the goal of improving the developer experience with open AI models. The focus is on three key areas:

- Reducing model and dataset download times for Hugging Face repositories through caching on Google Cloud via Vertex AI and Google Kubernetes Engine.

- Introducing native support for TPUs for all open models on Hugging Face

- Safer experience by integrating Google Cloud’s built-in security protocols into Hugging Face models deployed via Vertex AI.

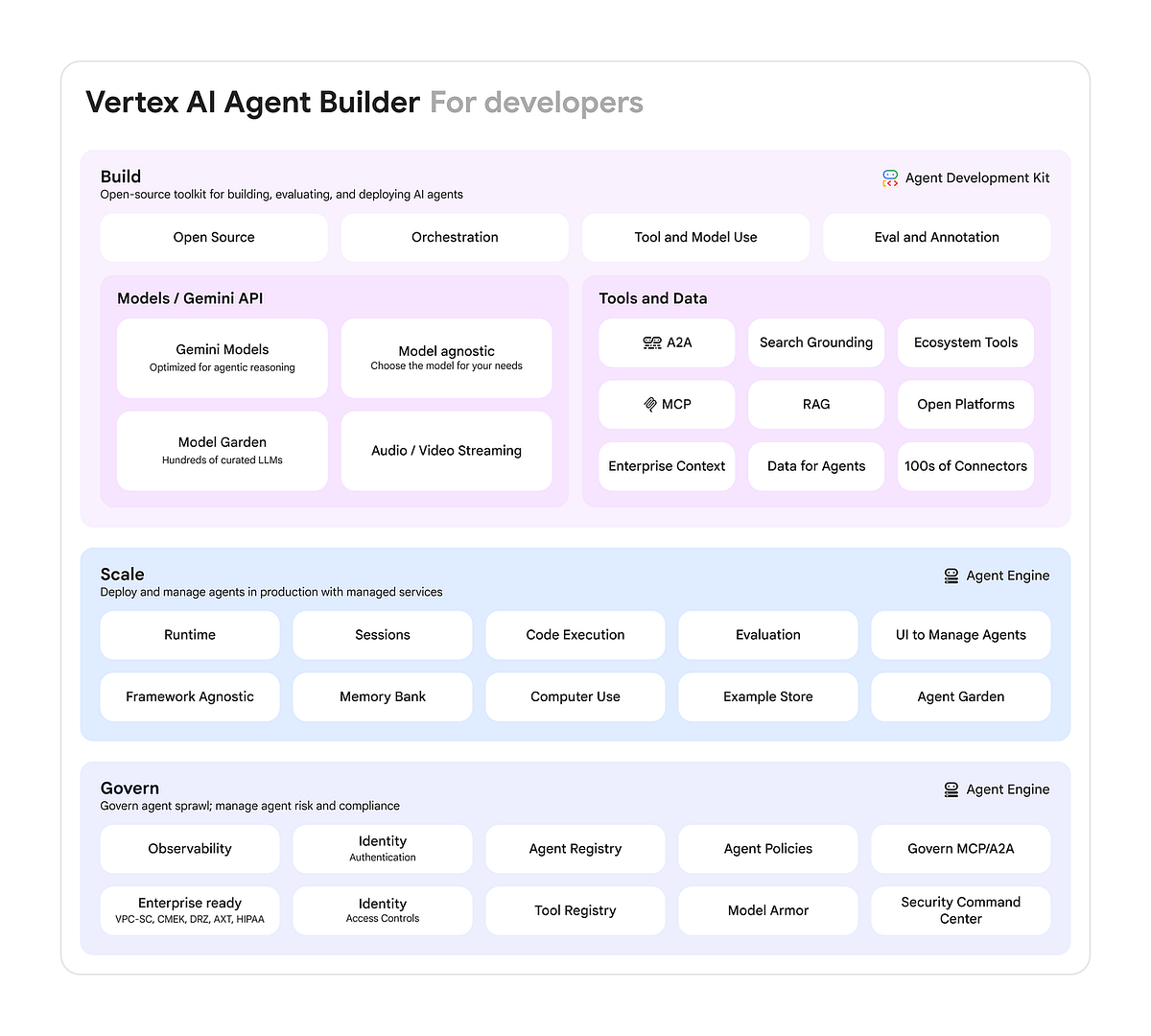

Vertex AI Agent Builder, which helps in developing, scaling, and governing AI agents, has got key updates:

- Agent Development Kit (ADK) now supports more languages and single-command deployment

- Vertex AI Agent Engine (AE) now has new observability, tracing, and evaluation tools

- Stronger focus on security through new features like native agent identities and enhanced security safeguards, including integrations with Security Command Center.

When it comes to designing online shopping experiences with conversational AI Agents, what are some of the best practices around design and user experience to look out for? Check out the blog post that highlights seven key ways conversational AI agents can significantly improve e-commerce, moving beyond rigid keyword searches to offer smarter, more natural, and personalized interactions. The article focuses on essential design principles, including handling user intent, providing clear visuals, offering product comparisons, and building user trust through transparency and graceful error handling. And the best part about the article? Availability of a downloadable component library on Figma to help teams quickly implement these advanced conversational commerce designs.

Gemini Code Assist on Github has got an additional capability that is designed to help improve AI-powered code reviews. The new feature provides a persistent memory feature that allows Gemini Code Assist to dynamically adapt to a team’s coding standards and style by securely storing learned rules derived from pull request (PR) comments and feedback. It operates on three pillars: learning from interactions once a PR is merged, intelligently inferring and storing generalized rules from those interactions, and applying these stored rules to future reviews both to guide initial analysis and to filter its own suggestions for increased consistency. Check out the blog post for more details.

Identity and Security

Google Security Operations has introduced a new capability, Emerging Threats Center, that aims to drastically accelerate how organizations detect and respond to major cyber vulnerabilities and critical threat campaigns. Powered by Gemini, an advanced detection-engineering agent, the system automatically ingests threat intelligence from sources like Mandiant and VirusTotal to generate representative events, assess current coverage, and close detection gaps. Check out the blog post for more details.

Google Cloud has announced a program called Google Unified Security Recommended program, that has a dual purpose: designed to expand strategic partnerships and offer customers enhanced, interoperable security solutions. The solutions planned to be made available from the Google Cloud Marketplace lists inaugural partners include CrowdStrike, Fortinet, and Wiz, with each offering specific integrations for areas like endpoint protection, network protection, and multi-cloud CNAPP. The program also looks to include AI initiatives like Model Context Protocol (MCP) that could help to integrate and utilize partner tools. Check out the blog post for more details.

The first Cloud CISO Perspectives for November 2025, focuses on how threat actors are advancing their misuse of AI tools. Key findings detail the use of Large Language Models (LLMs) to dynamically generate malicious commands, instances of threat actors using social engineering pretexts to bypass AI safeguards, and the maturation of an underground cybercrime marketplace for illicit AI tools.

Data Analytics

Data Engineering Agent in BigQuery, a new feature powered by Gemini designed to automate complex and repetitive data engineering tasks, is now available in preview. This agent allows engineers to focus on best practices while the system handles pipeline creation and maintenance using natural language prompts. Some of this agents’ capabilities include pipeline development, data preparation, troubleshooting, and migrations. Check out the blog post.

There are several other BigQuery updates in this edition. First up, is the public preview of BigQuery-managed AI functions, which are designed to integrate generative AI capabilities directly into SQL queries. These new functions — AI.IF (semantic filtering), AI.CLASSIFY (data categorization) and AI.SCORE (ranking based on natural language criteria) are introduced to help data practitioners gain nuanced insights from unstructured data like text and images without the complexities of traditional Large Language Model (LLM) integration, such as data movement or extensive prompt tuning. Check out the blog post.

If you are interested in the evolution of BigQuery’s native vector search, which was first introduced in 2024, you should check out this blog post. Through the power of embeddings, the post describes the complex, multi-step process of building vector search before its native integration with the current serverless, simplified approach in BigQuery, which eliminates the need for external vector databases and infrastructure management.

Networking

When it comes to running AI workloads at scale on Google Cloud, Networking plays a vital role in it. Its often behind the scenes and here is an interesting blog post that highlights 7 ways that networking is powering our AI workloads. Some of them include high-speed GPU-to-GPU communications, connecting privately to AI Workloads and more.

Databases

Waze modernized its backend infrastructure to handle massive volumes of dynamic, real-time user session data. Key to its modern architecture is a centralized Session Server backed by Memorystore for Redis Cluster. This fully managed service played a key role in handling over 1 million MGET commands per second with low latency. Check out the blog post for more details.

Containers and Kubernetes

Kubernetes cluster management has introduced control-plane minor-version rollback in Kubernetes 1.33. The key to implementing a safe rollback in this feature is a two-step upgrade process utilizing “emulated versions.” This two-step method first upgrades the binaries while maintaining the old emulated version for safe validation and potential rollback, and then, after validation, finalizes the upgrade by bumping the emulated version to enable new features. Check out the blog post that also highlights support for skip-version upgrades and improvements to component health and leader election processes.

Gemini CLI is fast gaining mindshare when it comes to terminal based AI Agents. Key to adding more capability to Gemini CLI are Extensions, which lets developers package together functionality that can be easily invoked as a command or via natural language from inside of Gemini CLI. How about working with your Kubernetes cluster and applications from right inside of Gemini CLI. This is enabled via the GKE Gemini CLI extension, available as open-source , which lets developers and operators use natural language prompting and specific slash commands to complete complex GKE workflows. Check it out.

At KubeCon 2025, there were several announcements made in Google Kubernetes Engine (GKE) that focused on optimizing the platform for Artificial Intelligence (AI) workloads. Check out a summary that includes the Agent Sandbox for securing non-deterministic AI agents, support for 130,000-node clusters, introduction of GKE Inference Gateway and GKE Pod Snapshots to drastically improve the performance and reduce the startup latency of large language model (LLM) serving.

Speaking of Agent Sandbox, it is a new Kubernetes primitive built collaboratively with the Kubernetes community, specifically designed to provide the necessary security, performance, and scale for the next generation of agentic AI workloads, which involve agent code execution and computer use. The Agent Sandbox provides strong isolation at scale needed, is built on gVisor with additional support for Kata Containers. Check out the blog post for more details.

The partnership between Google Cloud and Anyscale to advance distributed AI and machine learning (ML) workloads using the Ray compute engine, has seen several enhancements:

- Improved scheduling and scaling of Ray applications on GKE.

- Ray label selectors for flexible hardware targeting and fallback strategies.

- A more native experience for Cloud TPUs on GKE via a new Ray TPU Library to automatically manage hardware topology, and integration of the label-based scheduling with GKE custom compute classes for efficient TPU procurement and utilization.

Business Intelligence

Looker Conversational Analytics, that allows users to query data using natural language is now in General Availability (GA). Conversational Analytics integrates the power of Google’s Gemini models and agentic frameworks with Looker’s trusted semantic layer, almost giving a Google Search like functionality to extracting business intelligence. Key new features include the ability to analyze data across multiple domains (up to five Looker Explores) and the option to share agents with colleagues, and importantly, providing transparency through an explanation of how results are calculated. Check out the blog post.

Developers and Practitioners

If you have been feeling overwhelmed with the constant news around Generative AI and Agentic AI, and are still looking to get started with Agent Development, the task is simpler than you think. In the blog post, “Your First AI Application is Easier Than You Think”, you can take your first steps to understand what it takes to build these applications, starting with a carefully identified codelabs that help you understand the core building blocks of an Agent i.e. model (Gemini), external tools (Google Search, etc) and more. Check out the blog post.

Once you’ve got started with the above introduction to writing your first agent, why not dive deeper and build out a full Agent workforce. Check out 3 codelabs that help you build your first agent, empower the agent with tools and then build a team of Agents that accomplish a task.

This issue seems to be full of developing Agents. With Agent Development Kit (ADK), you not only get to use the framework in a programming language of your choice (Python, Java, Go) but you can also get support for not just simple or multi-agents but even deterministic flows like Sequential execution, Parallel Execution and more. Check out this deep-dive guide that explores designing multi-agent systems, the various protocols that help connect them and more.

If you have been developing AI Agents for a while, there is an intersection where as you increase the number of tools and add complexity to your agent, should you consider breaking up into Sub-Agents or test out the limit of how many tools can a single agent safely and accurately handle? Read this essential blog post that covers a decision table and use cases, in which you can consider these options.

Sometimes the best option to building out automation with AI is to consider existing workflow automation services rather than building all by yourself. n8n provides a popular cloud and open-source option to build your AI Automation that has 100s of connectors to real-world services, that enable your AI workflow automation to be grounded and relevant, while working with your own data. If you are looking to host n8n on your own, one of the best options for you could be Google Cloud Run, the fully managed service from Google Cloud that allows you to run containerized workloads on Google Infrastructure, comes with a generous free tier and a top developer experience. Check out this guide to hosting n8n on Google Cloud Run. If you’d like to do a hands-on codelab to experience the whole process, check out step by step instructions with some Google Cloud Credits thrown in to try it out.

If you have been using GKE for hosting your LLMs for inference purposes, then a method for improving Large Language Model (LLM) performance on Google Kubernetes Engine (GKE) should be of interest. The method works by addressing the memory bottleneck of the Key-Value (KV) Cache and involves using tiered storage to expand the cache beyond the fast, but limited, NVIDIA GPU High Bandwidth Memory (HBM) to include cheaper, larger tiers like CPU RAM and local SSDs. This approach due to the increase in cache size leads to a higher cache hit ratio and allows LLMs to handle much longer context lengths, improving metrics such as Time to First Token (TTFT) and throughput. Check out the blog post for more details.

Write for Google Cloud Medium publication

If you would like to share your Google Cloud expertise with your fellow practitioners, consider becoming an author for Google Cloud Medium publication. Reach out to me via comments and/or fill out this form and I’ll be happy to add you as a writer.

Stay in Touch

Have questions, comments, or other feedback on this newsletter? Please send Feedback.

If any of your peers are interested in receiving this newsletter, send them the Subscribe link.

Source Credit: https://medium.com/google-cloud/google-cloud-platform-technology-nuggets-november-1-15-2025-4110def8f2cd?source=rss—-e52cf94d98af—4