The whole story….easy way

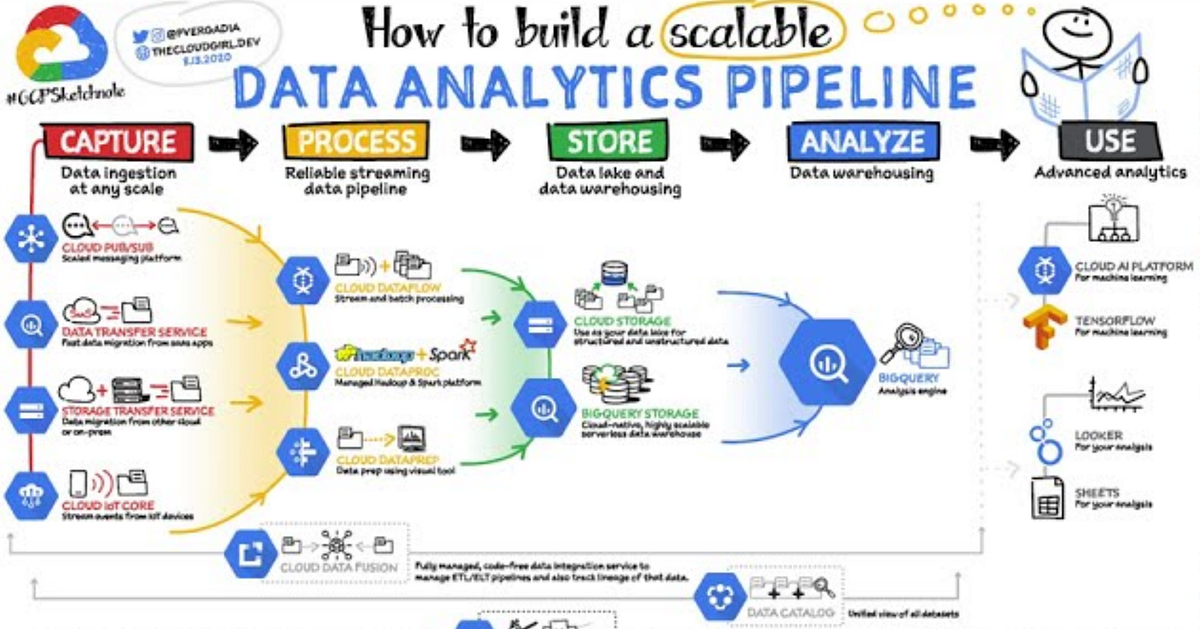

The complete picture, in Google Cloud, for travelling from Raw Data (any kind of Data, Batch and streaming) to Transformations (ETL — Dataform, free) landing to BI with Looker.

Security, Performance, Single Source of Truth…with all the Best Practices…… the easy way.

Starting with the KING: BigQuery

BigQuery

BigQuery is serverless, highly scalable …more than a simple data warehouse; it possesses distinct and unique characteristics, which will be outlined in this document.

To begin, we will examine the types and sources of the data that BigQuery is capable of processing.

Batch and Streaming Ingestion

Fundamentally, there are two ways data can be ingested:

Batch Ingestion: This is for data that isn’t time-sensitive. You collect data over a period (e.g., hours, a day) and load it in one go.

- Use Case: Loading end-of-day sales reports from all retail stores into a central table for nightly analysis.

How-To (Common Methods):

- Load from Google Cloud Storage (GCS): This is the most common pattern. You upload your files (CSV, Parquet, Avro, JSON) to a GCS bucket and then run a load job from the BigQuery UI or the bq load command. This is fast, efficient, and cheap (loading data is free).

- BigQuery Data Transfer Service (DTS): This is the “set it and forget it” tool. It’s a fully managed service for scheduling recurring, automated data copies from sources like Amazon S3, Redshift, Google SaaS apps (like Google Ads), and other GCS buckets.

Streaming Ingestion: This is for real-time data that needs to be analyzed immediately. Data is sent event-by-event as it’s generated using the tabledata.insertAll API.

Business Use Case: Capturing website click-stream data to feed a real-time recommendation engine or for immediate fraud detection on financial transactions.

Attention: Batch ingestion id free, streaming not.

Handling “Difficult” Sources: Legacy Systems and IoT

Getting data from modern applications is one thing, but most businesses have critical data in other places.

Legacy Databases (e.g., on-prem Oracle, SQL Server, DB2, Informix): We can’t just connect them directly for real-time streaming. A common pattern is to use a Change Data Capture (CDC) tool. This tool watches the database transaction log for changes (inserts, updates) and streams those changes as events to a message queue like Google Cloud Pub/Sub.

Simple Ingestion: For many use cases, you can use a “Pub/Sub to BigQuery Subscription.” This is a “push” subscription that writes messages directly from Pub/Sub to a BigQuery table as they arrive, giving you near real-time ingestion with no code.

When to use Dataflow: If you need to transform, enrich, or aggregate the data before it lands in BigQuery, you would add Cloud Dataflow. Dataflow is a stream processing service. You would read from Pub/Sub, perform your logic (e.g., join with another data stream, cleanse data, calculate a running average), and then write the processed results to BigQuery. We aren’t focusing on Dataflow in this guide, but it’s important to know where it fits.

When you don’t need Dataflow:

If you need to validate, filter, and modify one by one messages you can use Pub/Sub Single Message Transforms (SMT): it is a newer, integrated solution that allows you to apply transformations directly to the Pub/Sub subscription, without requiring external services for common operations.

If a message can be corrected, it is written to BigQuery; otherwise, it can still be forwarded to a Dead Letter Topic (DLT) for manual analysis, combining the benefits of both approaches

IoT Devices (e.g., factory sensors, logistics scanners): These devices can send massive volumes of small messages. They should send their data to Cloud Pub/Sub, which acts as a scalable buffer. This decouples the devices from the database. A streaming pipeline (often using Dataflow, as mentioned above, to calculate 1-minute averages) can then read from Pub/Sub and stream the results into BigQuery.

BigQuery Before the Operational Database

The important consideration is that modern analytical platforms like BigQuery are now primary data destinations, often receiving information before the traditional operational or accounting database (Lambda or Kappa architecture approach.)

The Key Shift

- Direct Ingestion: Streaming data (from websites, IoT sensors, cash registers) is sent immediately to a scalable buffer (Pub/Sub) and then written directly into BigQuery.

- Bypassing the Middleman: This bypasses the need to wait for the data to be written to a transactional database first, then extracted via a slow nightly batch job (ETL/ELT).

- Real-Time Analytics: This inversion makes data available for analysis and decision-making in seconds, contrasting sharply with the hours-long delay typical of traditional Data Warehouses (DWHs) that relied on nightly processing of operational database data.

No Ingestion at All: External and Federated Data

Sometimes, the most efficient way to query data is to not move it. BigQuery excels at this:

Federated Queries: This method lets you query live operational databases directly. BigQuery handles the connection “live.” This is perfect for operational reporting where you need to join your warehouse data with live production data without a full ETL process.

Supported Sources: This feature is for Google Cloud’s managed databases: Cloud SQL (MySQL & PostgreSQL), Cloud Spanner, and AlloyDB.

Location: The BigQuery dataset and the source database must be in the same location (either the same single region, or within the same multi-region geography like the US or EU).

External Tables (BigQuery Omni): This method lets you query files that live in other cloud storage systems.

Supported Sources: You can create tables in BigQuery that read data directly from files sitting in Google Cloud Storage (GCS), Amazon S3, or Azure Blob Storage.

Use Case: This is how you would query data residing in AWS or Azure without moving it. For example, you could query Parquet files in an S3 bucket as if they were a native BigQuery table. This is ideal for querying data lakes or ad-hoc analysis on log files.

Unifying Lake and Warehouse (BigLake): This is the natural evolution of External Tables. BigLake allows you to create tables that point to your open-source data files (Parquet, Avro, etc.) in GCS, S3, or Azure. The key difference is that BigLake allows you to apply fine-grained BigQuery security policies — like the Row-Level and Column-Level Security we discuss below — directly onto those external files.

Use Case: You get the low-cost storage flexibility of a data lake combined with the powerful, centralized security and governance of a data warehouse. It unifies your lake and warehouse.

BigQuery cannot natively connect to databases sitting outside its environment (like on-premise or in other clouds) for high-speed, scalable querying.

BigQuery’s power comes from reading data stored in its own optimized, columnar format (Colossus storage). It cannot optimize queries running against foreign systems where it doesn’t control the network or storage structure.

The Solution (Data Movement)

To use external data, you must move or stage it into Google Cloud:

- Batch Data: Use Cloud Storage (as an intermediary file stage) or the BigQuery Data Transfer Service (DTS) for scheduled loads.

- Streaming/Real-Time Data (CDC): Use Datastream to capture changes from databases (like Oracle or SQL Server) and stream them into BigQuery tables.

Practice Lab (do it with Sandbox)

Derive Insights from BigQuery Data

Is BigQuery Only for Relational Data? Handling JSON

This is a common misconception. BigQuery is not just a traditional relational database. It has first-class support for semi-structured data.

You can load JSON data in two primary ways:

- Standard Columns (with

STRUCTandARRAY): BigQuery can automatically detect a JSON structure and create a schema with nested (STRUCT) and repeated (ARRAY) fields. This is incredibly powerful for data that has a consistent structure. - The

JSONData Type: For maximum flexibility, you can load an entire JSON object into a single column with theJSONtype. You can then use dot-notation to query paths within the JSON object directly in your SQL, offering a “schema-on-read” approach.

Example 1: Using STRUCT and ARRAY (Schema-on-Write)

This is the best-practice approach when your JSON data has a predictable structure, like order data from an e-commerce system.

Use Case: We’ll store customer orders. Each order has a main ID and an array of line items.

-- 1. Create the table with nested and repeated fields

CREATE TABLE my_dataset.orders (

order_id STRING,

customer STRUCT,

line_items ARRAY>,

created_at TIMESTAMP

);

-- 2. Insert a sample order (this mimics loading from a JSON file)

INSERT INTO my_dataset.orders VALUES (

'ORD-1001',

('CUST-A123', 'jane.doe@example.com'),

[

('PROD-XYZ', 1, 29.99),

('PROD-ABC', 2, 9.50)

],

CURRENT_TIMESTAMP()

);-- 3. Query the nested data

-- This query "flattens" the line items to analyze individual products sold

SELECT

order_id,

customer.email, -- Access nested field with dot notation

item.product_id, -- Access field from the unnested array

item.quantity

FROM

my_dataset.orders,

UNNEST(line_items) AS item; -- The UNNEST function expands the array into rows

Example 2: Using the JSON Data Type (Schema-on-Read)

This is ideal for ingesting event data, logs, or payloads where the schema might change frequently, and you don’t want the load job to fail.

Use Case: We’ll store raw click-stream events from a website.

-- 1. Create the table with a JSON type column

CREATE TABLE my_dataset.raw_events (

event_id STRING,

event_timestamp TIMESTAMP,

event_payload JSON

);

-- 2. Insert a raw JSON string

INSERT INTO my_dataset.raw_events VALUES (

'EVT-888',

CURRENT_TIMESTAMP(),

JSON '{"user_id": "U-987", "page_of_url": "/products/new", "action": "click", "element_id": "buy-button"}'

);-- 3. Query the JSON payload directly using dot notation

SELECT

event_timestamp,

event_payload.user_id, -- Access JSON fields with dot notation

event_payload.action,

event_payload.page_url

FROM

my_dataset.raw_events

WHERE

event_payload.action = 'click'; -- You can even filter on JSON fields

Practice Lab

Working with JSON, Arrays, and Structs in BigQuery

Warehouse Optimization: Why No Indexes? (Partitioning and Clustering)

A user familiar with traditional databases (like SQL Server or Oracle) will immediately ask: “Where do I create my indexes?”

The answer is: You don’t. BigQuery doesn’t use or need them.

BigQuery’s architecture (based on Google’s Dremel technology) is designed for massive, parallel, full-table scans. It’s a columnar storage system, meaning it reads only the columns you select, not entire rows. It then uses a massive fleet of workers to scan these columns in parallel.

At petabyte-scale, traditional B-tree indexes (which are great for finding a specific row) become a bottleneck. They are slow to update during high-speed ingestion and are inefficient for the kinds of large-scale analytical queries BigQuery is built for.

You can leverage two primary methods for query optimizationAND SAVINGs , both of which work by physically pruning (skipping) data before the scan begins.

1. Partitioning (The “File Cabinet”)

This physically separates your data into different “drawers” based on a specific column, almost always a date or timestamp.

- Business Use Case: You have a 10-petabyte table of sales data going back 10 years. You almost never query all 10 years at once. 99% of your queries are for the last month or a specific quarter.

- How-To: You partition the table by a

DATEorTIMESTAMPcolumn.

CREATE TABLE my_dataset.daily_sales ( sale_id STRING, sale_timestamp TIMESTAMP, amount FLOAT64 ) PARTITION BY DATE(sale_timestamp); -- Creates a new partition for each day

- The Result: When you run a query with a filter on the partition column, BigQuery only scans the partitions that match the filter.

-- This query only scans 3 days of data, not 10 years.

-- Cost is minimal, and results are fast.

SELECT SUM(amount) FROM my_dataset.daily_sales

WHERE DATE(sale_timestamp) BETWEEN '2025-11-01' AND '2025-11-03';

2. Clustering (The “Sorted Folders” in the Drawer)

Clustering physically sorts the data within each partition based on one or more columns.

- Business Use Case: Within your daily sales partitions, you frequently filter or join by

customer_idandregion. - How-To: You add the

CLUSTER BYclause to your table definition.

CREATE TABLE my_dataset.optimized_sales

( sale_id STRING, sale_timestamp TIMESTAMP, customer_id STRING, region STRING, amount FLOAT64 )

PARTITION BY DATE(sale_timestamp) CLUSTER BY region, customer_id;

-- Sorts data by region, then by customer

- The Result: When you filter on a clustered column, BigQuery knows the min/max values in each underlying storage block. It can skip scanning entire blocks that don’t contain the data you’re looking for.

-- 1. PARTITIONING prunes to only the '2025-11-01' partition.

-- 2. CLUSTERING skips all blocks within that partition

-- that do not contain the 'EU' region.

SELECT * FROM my_dataset.optimized_sales WHERE DATE(sale_timestamp) = '2025-11-01' AND region = 'EU';

Partitioning prunes by date (or numbers), and Clustering prunes by common filter columns.

Logical & Performance Layers: Views and Materialized Views

Beyond tables, you can create two other types of objects to query: Views and Materialized Views. They are critical for security, simplicity, and performance.

1. Standard Views (Logical Layer)

A Standard View is a saved query. It does not store any data. When you query the view, BigQuery re-runs the underlying query every single time.

Business Use Case:

- Simplicity: You have a complex 5-table join to get “customer lifetime value.” Instead of making analysts write this join, you save it as a view called

v_customer_ltv. They can now just runSELECT * FROM v_customer_ltv;. - Security: You can use a view to expose only certain columns or rows. You give analysts permission to query the view, but not the underlying (sensitive) tables.

- How-To (SQL Example):

-- Create a view that simplifies sales data for the EU team

CREATE VIEW my_dataset.v_sales_eu

AS SELECT s.sale_id, s.amount, c.customer_name, p.product_name FROM my_dataset.sales AS s

JOIN my_dataset.customers AS c ON s.customer_id = c.customer_id

JOIN my_dataset.products AS p ON s.product_id = p.product_id

WHERE s.region = 'EU';

The Result: An analyst can query v_sales_eu without needing access to the base sales, customers, or products tables.

2. Materialized Views (Performance Layer)

A Materialized View (MV) is a “smart” view. It does store the pre-computed results of its query. BigQuery automatically refreshes this data in the background when the base tables change.

- Business Use Case: You have a Looker dashboard that shows “Total Sales by Day.” This query runs 1,000 times a day against your 10-petabyte

salestable. This is slow and expensive. - How-To: You create an MV that pre-calculates this aggregation.

-- Create an MV to accelerate our dashboard

CREATE MATERIALIZED VIEW my_dataset.mv_daily_sales_summary

AS SELECT DATE(sale_timestamp) AS sale_date, SUM(amount) AS total_sales,

COUNT(sale_id) AS total_orders

FROM my_dataset.daily_sales -- Our big, partitioned table GROUP BY 1;

- The Result (Smart Query Routing): The next time an analyst (or Looker) runs a query like

SELECT sale_date, total_sales FROM my_dataset.daily_sales WHERE sale_date > '2025-01-01', BigQuery is smart enough to not query the 10-petabyte table. Instead, it automatically rewrites the query to read from the tiny, pre-aggregated MV table.

Key Limitations (The “Why Not Use MVs for Everything?”):

- Limited Query Logic: MVs are not for complex ETL. They must be simple aggregations. They do not support

OUTER JOIN,UNION ALL,HAVING, analytic functions (likeROW_NUMBER()), or queries on external tables. - Base Table Requirements: They work best on a single table or with simple

INNER JOINs. - Refresh: BigQuery manages the refresh, but it’s not instantaneous (it’s “near real-time”).

- Purpose: They are not for transformation; they are for accelerating common aggregation queries.

Metadata: Data Catalog vs. INFORMATION_SCHEMA

A key part of managing a warehouse is understanding its metadata. BigQuery offers two ways to do this, for two very different purposes.

Data Catalog (via Dataplex UI)

The main purpose of Data Catalog is Business Governance & Discovery.

- Data Catalog has an Organization-wide scope. It discovers and catalogs assets across all projects, including diverse services like BigQuery, Pub/Sub, Cloud Storage (GCS), and more.

- It’s used for finding data and governing data (e.g., applying Column-Level Security). It answers business questions like: “What data do we have about our customers?”

- Access is primarily via the Dataplex UI, a web interface designed for data stewards and analysts

Key Use Case: A primary function is Applying Policy Tags for enforcing security. Users search for tables using a business tag like “PII” rather than a technical table name.

BigQuery INFORMATION_SCHEMA

The main purpose of INFORMATION_SCHEMA is Technical & Operational Introspection.

- Its scope is limited to a single BigQuery Dataset (e.g., my_dataset.INFORMATION_SCHEMA). It only provides metadata about BigQuery resources.

- It’s used for querying technical metadata and automating tasks. It answers technical questions like: “What is the data type of column X in table Y?”

Key Use Case: It’s used for writing SQL queries to list all tables in a dataset, check which tables are partitioned, or monitor job history programmatically.

Analogy: It acts like the Table of Contents and Index inside one specific book (a dataset).

So, you use Data Catalog to find and govern data from a business perspective (like applying a “Confidential” tag). You use INFORMATION_SCHEMA to programmatically query the technical details of a specific dataset (like listing tables or checking partition settings).

Simple and Powerful Data Access: Row and Column Security

Once data is in BigQuery, you must control who sees what. This is critical for compliance (like GDPR or HIPAA) and internal governance. BigQuery provides fine-grained controls at the column and row level.

Method 1: Column-Level Security

This method lets you restrict access to specific columns. The “how-to” is managed through Dataplex and IAM, not SQL.

Business Use Case: We have a table, company_dataset.employees, with columns employee_id, name, region, and salary.

- Analysts (e.g., analysts-group@example.com) should see employee_id, name, and region.

- HR (e.g., hr-group@example.com) should see all columns.

No one should see the salary column unless they are in the HR group.

Here is the simplest, most direct way to implement this.

Step 1: Create the Policy Tag (One-Time Setup)

- In the Google Cloud Console, navigate to BigQuery — Find Data Governance:

- Click on Policy Tags (or Taxonomies).

- Name: HR_Data Description: “Data classifications for sensitive employee information.”

Click Create.

Now, click on the HR_Data taxonomy you just created.

- Click Create Policy Tag.

- Name: Highly_Confidential Description: “For PII and sensitive financial data like salaries.”

Click Create. You have now created the tag.

Step 2: Apply the Policy Tag in BigQuery

Find your company_dataset.employees table and click on it to open the schema. Click Edit Schema.

Find the salary column. In its “Policy Tags” field, click Add policy tag.

Select the HR_Data taxonomy and check the Highly_Confidential tag.

Click Select, then click Save on the schema editor.

The salary column is now officially tagged as Highly_Confidential across your entire Google Cloud organization.

Step 3: Set IAM Permissions

- Return to Policy Tags: Go back to the Data Governance

- Click Policy Tags and open the Taxonomy.

- Click on the Highly_Confidential Policy Tag.

- Add Authorized Group:

- In the right-hand Permissions panel (you may need to expand it or click a gear icon), click Add principal.

- New principals: Enter the email of your authorized user or group

- Role: Search for and select the Data Catalog Fine-Grained Reader role.

- Click Save.

This is a powerful, centralized way to govern data. You define “Highly_Confidential” once and apply it to hundreds of columns across your warehouse. If an auditor asks who can see salaries, you just show them the IAM policy for that one tag.

Method 2: Row-Level Security (Filtering Rows)

This method filters which rows a user is allowed to see, even when they query the exact same table. The “how-to” is implemented directly on the table using SQL DDL.

Business Use Case: A regional sales manager for Europe should only see sales rows where the region column is ‘EU’. A manager for North America (‘NA’) should only see ‘NA’ rows.

How-To (SQL Example):

We have a table my_dataset.all_sales (sale_id STRING, amount FLOAT64, region STRING).

We create a Row Access Policy on this table. This policy acts as a “hidden” WHERE clause that is automatically applied for specific users.

- Create the policy for European managers

CREATE ROW ACCESS POLICY eu_sales_filter

ON my_dataset.all_sales

GRANT TO ("group:sales-managers-eu@example.com")

FILTER USING (region = 'EU');

- Create a separate policy for North American managers

CREATE ROW ACCESS POLICY na_sales_filter

ON my_dataset.all_sales

GRANT TO ("group:sales-managers-na@example.com")

FILTER USING (region = 'NA');

The Result:

A user in the sales-managers-eu@example.com group runs

SELECT * FROM my_dataset.all_sales;.

BigQuery automatically adds WHERE region = ‘EU’ to their query, so they only see European sales.

A user in the sales-managers-na@example.com group runs the exact same query and only sees North American sales.

A user who is not in either group (and has no other policy) will see zero rows.

Pay Attention:

BigQuery Data Viewer: Grants basic table access. This is the standard read-only role that allows a user to query all data in a specified table or dataset.

Data Catalog Fine-Grained Reader: Bypasses column-level restrictions. This is the special role that grants a user permission to read the unmasked, original data in columns tagged with a specific Policy Tag.

Source Credit: https://medium.com/google-cloud/the-gcp-data-pipeline-from-raw-ingestion-to-business-intelligence-bigquery-dataform-and-looker-9e5f109eb196?source=rss—-e52cf94d98af—4