High-performance computing and generative AI are no longer reserved for giant research labs. In 2025 any engineer, scientist, or start-up can stand up a full cluster in Google Cloud — without writing hundreds of shell scripts. Cluster Toolkit is the open-source kit that turns modular building blocks into ready-to-run HPC, AI, and ML environments. Below I explain how it works, why it matters, and share real-world scenarios that already save teams weeks of effort.

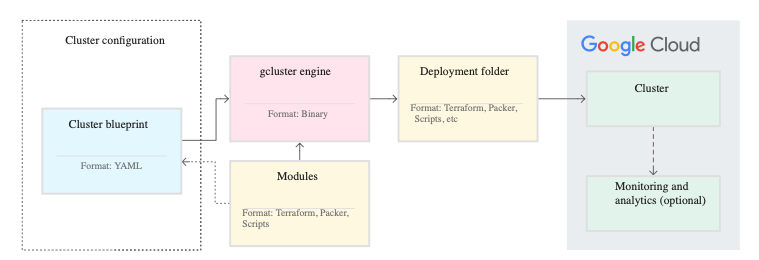

- Cluster constructor. You outline your desired architecture in a single YAML “blueprint.”

- Module catalog. Need compute nodes, networking, a file system, or a Slurm scheduler? Pick the bricks you need and stack them together.

- The

gclusterengine. It reads your blueprint, assembles a self-contained “deployment folder” with Terraform or Packer, and prints the exact commands to launch the cluster. - Deployment folder. A standalone directory that can create — or tear down — a cluster at any time.

- Speed. A typical HPC cluster spins up in under ten minutes; a GPU-rich AI stack in roughly an hour.

- Open source. Adapt modules, write your own, or patch existing ones at will.

- Batteries included. Slurm, Batch, GKE, Filestore, Lustre, Parallelstore, Cloud Monitoring — all pre-integrated.

- Cost clarity. Automatic labels let you filter billing reports and see cluster spend instantly.

- Flexibility. Tweak a variable, regenerate the folder, redeploy — no vendor lock-in.

- Prep your environment

Use Cloud Shell or a local workstation withgcloud, Terraform, Packer, Go, and Git. Enable Compute Engine, Filestore, Cloud Storage, and Resource Manager APIs. - Clone the repo

git clone https://github.com/GoogleCloudPlatform/cluster-toolkit.git

cd cluster-toolkit

make # builds the gcluster binary

3. Write a blueprint

Start from an example like hpc-slurm.yaml or draft your own: define a name, variables, and module groups.

4. Generate the deployment folder

./gcluster create my-blueprint.yaml --vars project_id=MY_PROJECT

5. Deploy

./gcluster deploy my-blueprint

- Confirm the plan; minutes later the cluster is live.

6. Run jobs & monitor

SSH to the login node, submit jobs, and watch metrics in Cloud Monitoring.

7. Clean up

./gcluster destroy my-blueprint --auto-approve

1. One-hour coastal storm simulation

- Scenario: A university group models micro-climate for a seaside city.

- Toolkit angle: The

serverless-batch-mpiexample deploys a Batch cluster on cost-efficient C-series VMs that auto-scale with MPI tasks. - Outcome: Spot VMs cut compute costs by half; the cluster stood up faster than the researchers compiled the weather code.

2. Training a chat-bot on A4 GPUs

- Scenario: A start-up fine-tunes a large Russian language model across eight B200 GPUs.

- Toolkit angle: The

a4-highgpu-8gblueprint wires up high-bandwidth gVNIC networking, Filestore storage, and a Slurm scheduler in a single shot. - Outcome: Instead of juggling reservations and drivers, the team focused on optimizing the training pipeline.

3. Processing 10 000 genomic samples

- Scenario: Bioinformaticians launch thousands of short, CPU-heavy jobs.

- Toolkit angle: The

htc-slurmblueprint is tuned for high throughput: it spins up many inexpensive N-series nodes and tears them down the moment they go idle. - Outcome: Turnaround time dropped from days to hours; sequencing machines no longer sit idle.

4. Rendering an animated short film

- Scenario: An indie studio renders frames with Blender Cycles.

- Toolkit angle: A partition on H-class compute-optimized machines offers huge core counts and auto-scales overnight to save budget.

- Outcome: Peak demand hit 88 vCPU per node, then collapsed to a single controller by morning — completely unattended.

- Check quotas first, especially for specialized machine families and Filestore.

- Mind the firewall. Enabling OS Login simplifies SSH access without juggling keys.

- Store Terraform state in versioned Cloud Storage for safe rollbacks.

- Label everything. Custom tags in the blueprint make billing and logging painless.

- Prototype locally. Use

gcluster create -l ERRORto validate your YAML before spending a cent.

- The deployment folder’s name becomes a billing label. One team called their cluster “potato,” resulting in a finance report where most of the spend was “Potato-Compute.”

- A minimal Slurm cluster can finish deploying faster than your IDE installs its extensions.

- Setting

exclusive: falsekeeps nodes alive after jobs—perfect for debugging, but don’t forget to shut them down later!

Cluster Toolkit turns the complexity of HPC, AI, and ML orchestration into a straight path: draft a blueprint, generate a folder, hit “deploy.” In 2025 it’s arguably the quickest route from an idea for a compute cluster to real results in the cloud. Give it a try and an hour from now you could be running Slurm — or GKE — on infrastructure you fully control.

🙏 If you found this article helpful, give it a 👏 and hit Follow — it helps more people discover it.

🌱 Good ideas tend to spread. I truly appreciate it when readers pass them along.

Source Credit: https://medium.com/google-cloud/google-cloud-cluster-toolkit-2025-the-definitive-guide-for-slurm-based-workloads-ad60f515cda3?source=rss—-e52cf94d98af—4