16 hours ago

… because you cannot generalize best practices across use cases!

The field of Generative AI is rapidly evolving, making it difficult to find a one-size-fits-all set of best practices. Every industry, architecture, and use case introduces unique constraints around latency, cost, and data integrity.

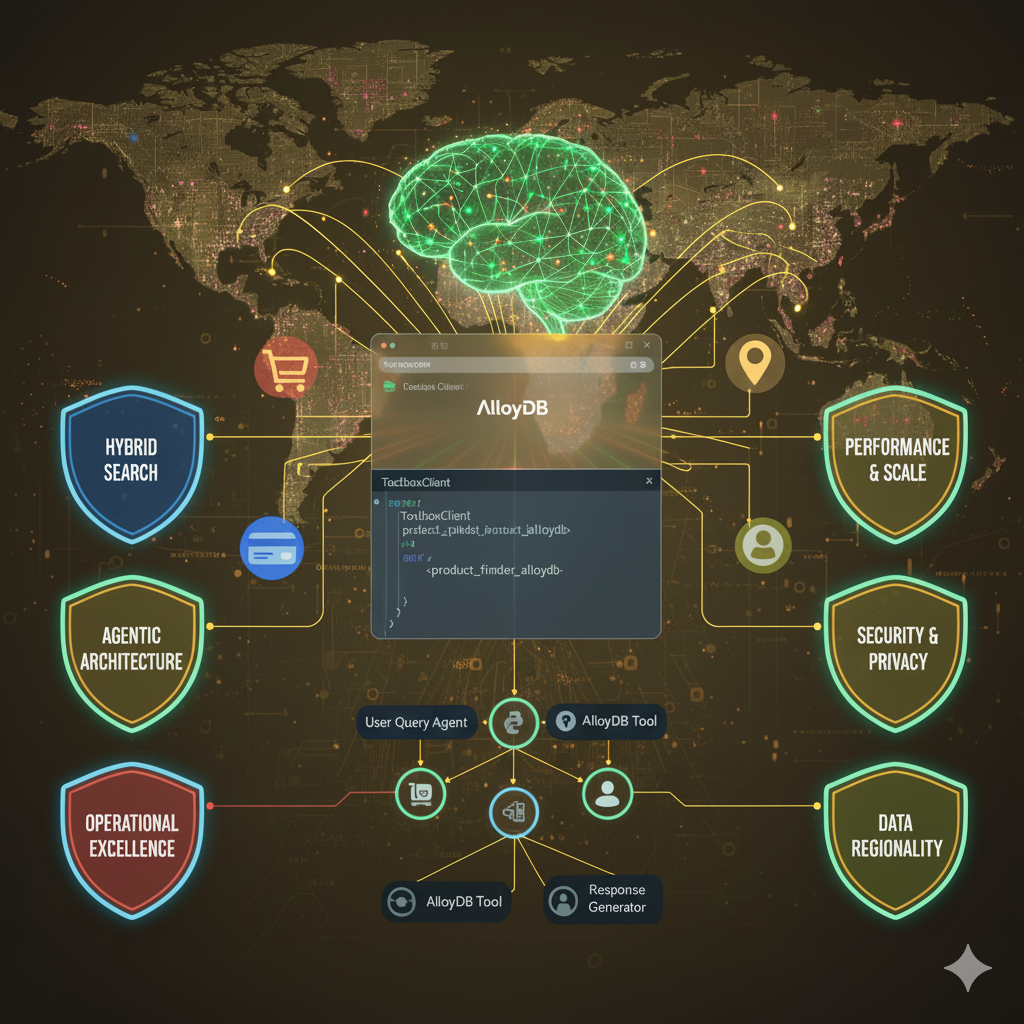

Today, we narrow our focus to a critical, high-stakes domain: E-commerce Search and Agentic Applications using AlloyDB AI, an area I have been spending a lot of time and focus the last few months. This is where structured data (price, inventory) must blend seamlessly with semantic understanding (product description, user intent) to drive conversions. We’ll discuss this in 2 sections:

Section 1: Best practices to follow while making your data smart and contextual. This first set of best practices shows how to fuse Generative & Agentic AI directly into your database using AlloyDB AI.

Section 2: Best practices to follow while making your data fast, reliable, and compliant. We must turn our attention to the essential conventional best practices — the performance, resilience, security and residency measures that ensure your underlying relational database can handle the high-traffic, transactional demands of a modern e-commerce platform, which is covered in the second set of best practices in this blog.

1*Best practices to follow while making your data smart and contextual

🛍️ Core Best Practice #1 The Hybrid Search Imperative

In e-commerce, semantic search is critical, but on its own, it’s not enough. Shoppers don’t just ask “show me comfortable shoes”, they ask for “comfortable running shoes under $100.” This requires combining the nuance of vector search with the precision of structured SQL filtering.

Key best practices & example

- Embrace Filtered Vector Search: Always combine the vector similarity (semantic) search with a structured SQL filter (e.g., price, color, size, in-stock status). AlloyDB AI is designed for this with features like its ScaNN index and Adaptive Filtering, which optimizes the query plan to ensure this hybrid approach is lightning fast.

* Check out an end to end hybrid search application I have written about with step by step instructions.

- Co-locate Data and Vectors: Store your vector embeddings within the same database as your core transactional data (product catalog, inventory). This eliminates the latency and complexity of managing a separate vector database.

Example:

Consider an e-commerce catalog agent handling the query: “Find me a blue rain jacket for hiking that costs less than $150.”

The powerful hybrid query in AlloyDB AI would look like this:

SELECT

product_name,

price,

1 - (embedding <=> embedding('gemini-embedding-001', 'Find me a blue rain jacket for hiking.')::vector) AS similarity

FROM

ecommerce_catalog

WHERE

price < 150.00

AND color = 'blue'

ORDER BY

similarity DESC

LIMIT 5;

Why this is a best practice: This query uses the embedding() function and the vector search operator (<=>) for semantic relevance (the “rain jacket for hiking” part) and the standard WHERE clause for precise filtering (the “blue” and “less than $150” parts), all executed efficiently by the integrated ScaNN index.

🤖 #2: Architecture for Agentic Search

Agentic applications need to reason, plan, and take action. We can build this by separating the business logic (the Agent) from the data access logic (the Database Tool).

Key Best Practices and ADK Example

- RAG as a Multi-Tool Strategy: The agent should use a specialized tool for database interaction. This allows the LLM to focus on reasoning, while the tool handles secure, efficient data retrieval.

- Decouple with MCP Toolbox: The MCP Toolbox for Databases simplifies agent development by letting you define database tools in a simple

tools.yamlfile. This abstracts away the connection details and complex SQL from your Python application logic.

Example Tool Definition (tools.yaml):

sources:

alloydb:

kind: "alloydb-postgres"

project: "YOUR_PROJECT"

region: "us-central1"

cluster: "vector-cluster"

instance: "vector-instance"

database: "postgres"

user: "postgres"

password: "alloydb"tools:

product_finder_alloydb:

kind: postgres-sql

source: my-alloydb-instance

description: Use this tool to find relevant products in the e-commerce catalog based on a natural language query and any available filters like price or color.

parameters:

- name: query_vector

type: string

description: A single natural language query (e.g., 'best hiking gear').

- name: max_price

type: float

description: The maximum price filter.

statement: |

-- Use the hybrid query from Best Practice 1 here

SELECT product_name, price, 1 - (embedding <=> embedding('gemini-embedding-001', $1)::vector) AS similarity

FROM ecommerce_catalog

WHERE price < $2

ORDER BY similarity DESC

LIMIT 5;

* Check out an end to end multi-database e-commerce application powered by MCP Toolbox for Databases that demonstrates how you can integrate a wide variety of structures of data into your AI application with little to no change in the application itself for any update on the data layer when needed.

⚙️ #3: Operational Excellence & Developer Experience

Operational excellence extends beyond runtime monitoring to include the quality and maintainability of the development process. By using the right integrations and frameworks, you simplify the path from idea to production.

Key Best Practices

- Frameworks for AI Integration (The Glue):

Use orchestration frameworks like LangChain or LangGraph to manage complex, multi-step agent workflows (e.g., conversational memory, tool selection, response formatting). These frameworks provide robust, reusable components that abstract the complexity of interacting with LLMs, vector stores, and structured databases.

A simple Cloud Run Functions code snippet to demonstrate the use of LangChain in the context of using MCP Toolbox for databases:

from toolbox_langchain import ToolboxClient

import functions_framework

import json@functions_framework.http

def hello_http(request):

request_json = request.get_json(silent=True)

if request_json and 'name' in request_json:

name = request_json['name']

elif request_args and 'name' in request_args:

name = request_args['name']

else:

name = 'World'

if name == 'FILTERS':

filters = toolboxcall();

else:

filters = toolboxcallformatches(name);

return filters

def toolboxcall():

toolbox = ToolboxClient("<>")

tool = toolbox.load_tool("get-retail-facet-filters")

result = tool.invoke({})

return result

- Separation of Concerns with MCP Toolbox:

As demonstrated above, the MCP Toolbox for Databases allows you to completely separate the data access layer (the SQL queries and connection logic in tools.yaml) from the AI application logic (the Python agent code). This makes maintenance easier, improves security, and allows database experts to manage the tools.yaml without touching the agent code. This pattern applies not just to AlloyDB, but can integrate external databases via different MCP servers as well.

Use monitoring tools to track the full request lifecycle:

a. Agent Success Rate: Did the agent correctly choose the product_finder_alloydb tool?

b. Database Latency: What was the execution time for the hybrid vector search query in AlloyDB?

c. LLM Grounding Quality: Did the final response accurately reflect the products returned by the database?

Implement a pipeline (e.g., using Cloud Dataflow or a scheduled Cloud Run job) or a real time trigger to frequently update product embeddings whenever a product description or key feature changes, ensuring your search is always current.

* You can also check an Agentic Toystore App using AlloyDB AI through MCP Toolbox for databases from an agentic application using ADK framework.

By adopting these practices, you not only build a high-performing search experience but also create an application architecture that is maintainable, scalable, and easy for your development teams to work with.

Source Credit: https://medium.com/google-cloud/a-practical-deep-dive-into-best-practices-for-powering-e-commerce-data-search-and-agentic-39460b0c9504?source=rss—-e52cf94d98af—4