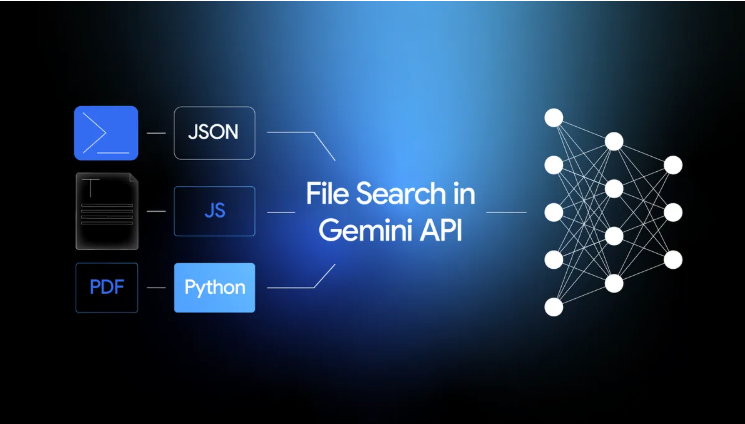

The Gemini team at Google recently announced the File Search Tool, a fully managed RAG system built directly into the Gemini API as a simple, integrated, and scalable way to ground Gemini. I gave it a try and I’m impressed how easy it is to use to ground Gemini with your own data.

In this blog post, I’ll introduce the File Search Tool and show you a concrete example.

Before File Search Tool

Retrieval Augmented Generation (RAG) is a technique used to ground Large Language Model (LLM) responses with relevant data. Previously, I showed how to do RAG with different frameworks and vector stores:

All of these samples used a PDF file as the grounding data and used frameworks (LangChain, LlamaIndex) and vector stores (Annoy, Firestore, SimpleVectorStore) to manage the RAG pipeline. While the samples were straightforward, there were quite a few steps that you had to go through.

For the ingestion phase, you had to:

- Read the PDF document and split into smaller chunks.

- Initialize a vector store with an embedding model.

- Embed and ingest the PDF chunks into the vector store.

For the query phase, you had to:

- Create a retriever with the vector store.

- Embed the user prompt and run a similarity search against the vector store.

- Feed similar documents as context to the model.

Frameworks like LangChain and LlamaIndex helped but it was still a lot of work.

After File Search Tool

Gemini’s File Search Tool greatly simplifies the RAG ingestion and query phases.

For the ingestion phase, you just create a file search store and upload documents to it. Gemini takes care of chunking, embedding, and ingestion.

For the query phase, you just generate content with Gemini and File Search Tool Gemini and Gemini takes care of retrieving the relevant documents and using it as grounding data.

Let’s look at a concrete example. You can see the full sample in main.py in my repo.

Without file search tool

Before we try file search tool, let’s run the sample without it.

Note: File Search Tool is only supported by Gemini API right now (not Vertex AI API)

Make sure you get and set your Gemini API key and create a client:

export GEMINI_API_KEY=your-gemini-api-key

client = genai.Client(api_key=os.environ["GEMINI_API_KEY"])

Ask a question about a fictitious vehicle called Cymbal Starlight:

python main.py generate_content "What's the cargo capacity of Cymbal Starlight?"

You get a response where the LLM does not really know what Cymbal is:

Generating content with file search store: None

Response: I couldn't find any information about a vessel named

"Cymbal Starlight" with a publicly listed cargo capacity.It's possible:

* It's a very obscure or private vessel.

* It's a fictional vessel.

* The name might be slightly different.

Could you provide more context or verify the name?

Create a file search store

First, let’s create a file search store:

file_search_store = client.file_search_stores.create(

config={'display_name': display_name}

)

print(f"Created a file search store:")

print(f" {file_search_store.name} - {file_search_store.display_name}")

You can list all the file search stores you have to verify:

print("List of file search stores:")

for file_search_store in client.file_search_stores.list():

print(f" {file_search_store.name} - {file_search_store.display_name}")

The file search store is ready for documents.

Upload the PDF

Upload the PDF user manual of Cymbal Starlight ( cymbal-starlight-2024.pdf) to the file search store:

print(f"Uploading file: {file_path} with display name: {display_name} to file search store:")

print(f" {file_search_store_name}")

upload_op = client.file_search_stores.upload_to_file_search_store(

file_search_store_name=file_search_store_name,

file=file_path,

config={

# Optional display name for the uploaded file.

'display_name': display_name,

# Optional config for telling the service how to chunk the data.

# 'chunking_config': {

# 'white_space_config': {

# 'max_tokens_per_chunk': 200,

# 'max_overlap_tokens': 20

# }

# },

# Optional custom metadata to associate with the file.

# 'custom_metadata': [

# {"key": "author", "string_value": "Robert Graves"},

# {"key": "year", "numeric_value": 1934}

# ]

}

)

As you can see, there are some optional configuration such as chunking config and custom metadata that you can provide during upload.

You also need to wait for the upload to complete as follows:

while not upload_op.done:

print("Waiting for upload to complete...")

time.sleep(5)

upload_op = client.operations.get(upload_op)

print("Upload completed.")

Once the PDF is uploaded, you can list the documents in the file search store:

print(f"List of documents in file search store: ")

for document in client.file_search_stores.documents.list(parent=file_search_store_name):

print(f" {document.name} - {document.display_name}")

With file search tool

Now, we can generate content with the file search store:

generate_config = GenerateContentConfig(

tools=[Tool(

file_search=FileSearch(

file_search_store_names=[file_search_store_name]

)

)]

)print(f"Generating content with file search store: {file_search_store_name}")

response = client.models.generate_content(

model='gemini-2.5-flash',

contents=prompt,

config=generate_config

)

print(f"Response: {response.text}")

You can also access the documents used in grounding:

grounding = response.candidates[0].grounding_metadata

if not grounding:

print("Grounding sources: None")

else:

sources = {c.retrieved_context.title for c in grounding.grounding_chunks}

print("Grounding sources: ", *sources)

Run it:

python main.py generate_content "What's the cargo capacity of Cymbal Starlight?" fileSearchStores/myfilesearchstore-5a9x71ifjge9

You can see that Gemini now knows what Cymbal Starlight is!

Generating content with file search store: fileSearchStores/myfilesearchstore-5a9x71ifjge9

Response: The Cymbal Starlight 2024 has a cargo capacity of 13.5 cubic feet,

which is located in the trunk of the vehicle. It is important to distribute

the weight evenly and not overload the trunk, as this could impact the

vehicle's handling and stability. The vehicle can also accommodate up

to two suitcases in the trunk, and it is recommended to use soft-sided

luggage to maximize space and cargo straps to secure it while driving.

Grounding sources: cymbal-starlight-2024.pdf

This was way easier than setting up your own RAG pipeline!

Cleanup

Once you’re done, you can delete the document in the file store:

client.file_search_stores.documents.delete(name=document_name, config={"force": True})

print(f"Deleted document:")

print(f" {document_name}")

Or, you can delete the entire file search store:

client.file_search_stores.delete(name=file_search_store_name, config={"force": True})

print(f"Deleted file search store:")

print(f" {file_search_store_name}")

Conclusion

In this blog post, we explored the File Search Tool in Gemini. It’s the easiest way to ground Gemini with your own data and abstracts away a lot of ingestion and query logic in a nice and easy to use API.

Here are some links to learn more:

Source Credit: https://medium.com/google-cloud/rag-just-got-much-easier-with-file-search-tool-in-gemini-api-6494f5b1c6bc?source=rss—-e52cf94d98af—4