In my previous post, I showed how I built a development agent using Gemini and the Agent Development Kit (ADK), a powerful tool that makes it easy to create autonomous and collaborative agents. This agent, the Development Tutor, is able to interact naturally with developers, answering technical questions in a light, humorous and didactic tone.

But building the agent was just the beginning of the journey.

In this new episode, I share the next step of the project: evaluating the LLM’s performance in the context of the agent and the complete deployment in the cloud. I’ll show you how to evaluate the responses and how I set everything up to run reliably on Google Cloud Run.

If you like the idea of creating custom agents with LLMs and want to see how to take them to production, this is the reading for you.

First of all, I organized the project by creating two new folders:

├── deployment # deploy scripts

├── development_tutor

├── eval # evaluation directory

├── img # Images

├── README.md

└── requirements.txt# The eval folder has both the evaluation script and the test data.

I created a small JSON file with expected interactions:

[

{

"query": "Olá!",

"expected_tool_use": [],

"reference": "Olá, eu me chamo R2-D2... Pi, pi, pow! Eu não tenho um nome kkkj, mas pode me chamar de Dev. Como posso te ajudar?"

},

{

"query": "Como anda a comunidade de machine learning em Go?",

"expected_tool_use": [],

"reference": "E aí! Suave? Então, a parada é a seguinte: a comunidade de Machine Learning em Go ainda tá crescendo..."

}

]

This file serves as a comparison base to verify that the agent responds in a manner close to what we expect.

Then, created eval script:

import os

import pathlib

import pytest

from dotenv import load_dotenv

from google.adk.evaluation.agent_evaluator import AgentEvaluator@pytest.fixture(scope="session", autouse=True)

def load_env():

load_dotenv()

def test_agent():

AgentEvaluator.evaluate(

agent_module="development_tutor",

eval_dataset_file_path_or_dir=str(

pathlib.Path(__file__).parent / "eval_data/chat.test.json",

),

num_runs=2

)

This script runs the agent twice for each question in the dataset and evaluates whether the answers are as expected. I used pytest to make it easier to run like a traditional test.

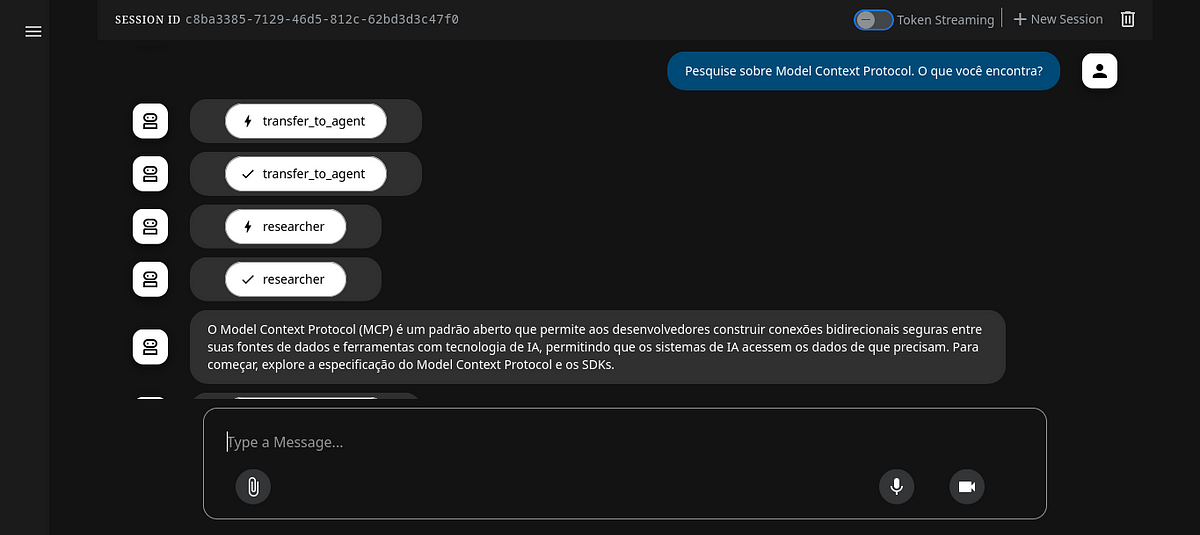

Evaluation in Dev UI

Run Web Dev UI:

adk web

- Start a new conversation with the agent.

- Go to the Eval tab.

- Click on the “+” symbol to create a new evaluation.

- Give it a name and confirm.

- Select the current conversation and click on “Add current session to eval”.

- Finally, click on “Run Evaluation”.

The result will be a simple Passed or Failed, with insights into the similarity between the expected response and the one generated by the agent.

Check this:

Source Credit: https://medium.com/google-cloud/evaluate-and-deploy-an-llm-agent-with-adk-on-google-cloud-c61ae95ab129?source=rss—-e52cf94d98af—4