I recently faced a common challenge as a Database Architect helping a customer migrate their monolithic application running on sharded MySQL clusters to a microservices architecture leveraging Spanner, where the critical issue was a Very Large Database (VLDB) holding tens of terabytes of images (BLOBs) directly within tables.

Storing large binary objects inside a transactional database like MySQL causes bloat and slows performance. Attempting to migrate that data as-is into Spanner is not only architecturally difficult because Spanner limits cells to 10 MiB, but also cost prohibitive.

To solve this, we had to make a fundamental change in how we think about data storage: adopting the Spanner + Google Cloud Storage (GCS) Hybrid Architecture.

The Proposed Architecture

The strategy is simple: separate your content from its context.

- Metadata Layer: To reduce storage and operational costs, we use Spanner to store only image metadata rather than the images themselves. Each record includes user ID, image ID, timestamps, captions, and a GCS Uniform Resource Identifier (URI) pointing to the actual image. This approach minimizes database bloat while preserving a strongly consistent view of all relational data. Spanner provides transactional integrity and global consistency, allowing us to scale metadata writes without compromising correctness.

- BLOB Storage Layer: To store raw image data cost-effectively at scale, we evaluated several cloud-native storage options. While Bigtable offers low-latency writes and high throughput, its pricing model is optimized for workloads with small, high-frequency data writes, not large binary objects. Its eventual consistency across regions also introduces complexity when paired with Spanner’s strong consistency guarantees. GCS Standard class was selected for its low cost per gigabyte, automatic regional replication, and ability to store large objects up to 5 TiB. Combined with strong global read-after-write consistency, GCS provides the right balance of durability, scalability, and cost for frequently accessed image content.

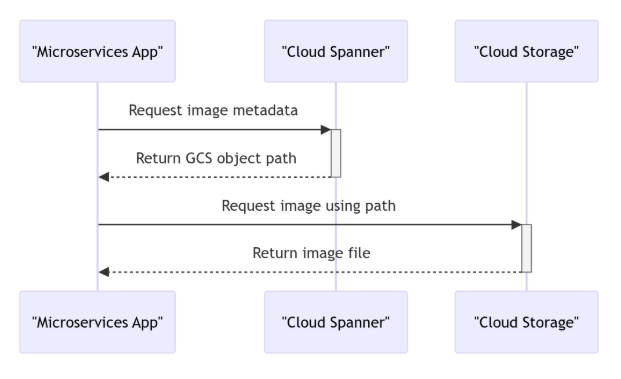

Image retrieval becomes a fast, two-step lookup: (1) Query Spanner for the GCS URI, and (2) Retrieve the image from GCS using that URI.

Architectural Advantages

Key Challenges & Mitigations

1. Cross-Service Atomicity

A core challenge is maintaining atomicity when a single logical transaction spans two independent systems i.e. GCS for image storage and Spanner for metadata. If the image is successfully written to GCS but the subsequent metadata write to Spanner fails, the system is left with an orphaned object.

The Solution: Orchestrated Write Pattern (Saga Principle) – Implement an application-level orchestration workflow, following a Saga-like pattern. The image is uploaded to GCS first. Only upon successful completion does the system proceed to commit the associated metadata, including the GCS URI, in a single Spanner transaction. If the Spanner commit fails, a compensating action is triggered to delete the orphaned GCS object asynchronously. This pattern preserves eventual consistency while preventing unreferenced or orphanned image data.

2. The Hotspot Risk

Legacy MySQL often uses simple, monotonically increasing primary keys. Applying this pattern in Spanner is a critical mistake. It directs all high-volume writes to a single logical server partition, creating severe write hotspots that cripple throughput.

The Solution: Schema Fix – Design primary keys that distribute load uniformly, using techniques like UUIDs, key salting, or reversed primary key components. Refer to Spanner documentation for more guidance on schema design best practices.

3. Consistency Conflict: Why Bigtable Won’t Work

While alternatives like Bigtable were considered for massive scale, they are generally unsuitable for this hybrid model where the application and databases span multiple regions. Bigtable’s schema design is optimized for high-throughput, small data, recommending against storing more than 10 MiB per cell. More critically, Bigtable’s multi-region replication is eventually consistent. This eventually-consistent BLOB layer directly conflicts with Spanner’s globally externally consistent metadata. This conflict risks scenarios where a user reads a GCS URI from a Spanner replica (which guarantees the data exists) but the corresponding BLOB has not yet replicated to the local Bigtable cluster due to lag. GCS provides the necessary strong consistency for object reads, ensuring the BLOB layer matches the Spanner metadata layer’s integrity.

Things to Consider: Latency and Multi-Region

1. Performance Deep Dive: Latency Optimization

Achieving high performance involves tuning both the read and write paths, which are now split across Spanner and GCS. The total user-perceived latency is the sum of the Spanner metadata lookup and the subsequent GCS BLOB fetch.

Geographical co-location is mandatory. The application compute, Spanner leader, and GCS bucket must be in the same region to minimize network traversal and client round-trip latency.

For the write path, avoid I/O hotspots by using non-monotonic or hash-prefixed primary keys to distribute writes evenly. In high-concurrency scenarios, tune max_commit_delay to improve throughput and mitigate commit-wait contention, trading off minimal delay for better scalability. To scale global reads and reduce latency, use Spanner’s stale reads to serve data from follower replicas outside the leader region.

Finally, utilize Cloud CDN in front of the GCS bucket for global caching, this can significantly lower end-user latency and manage egress costs. And ofcource, continuous monitoring of latency metrics (P95/P99) is necessary to ensure performance consistency.

2. Multi-Region Replication

If your Spanner instance is configured as multi-region for global availability, it’s recommended (but not required) to use a multi-region or dual-region GCS bucket in the same geography to align latency and availability characteristics. GCS offers strong global consistency, ensuring that once an image is successfully uploaded and its metadata is committed in Spanner, it is immediately accessible from any location. While GCS replicates data across the configured geography for durability, it does not guarantee that reads are always served from the nearest replica without additional services like Cloud CDN.

Conclusion

Migrating a VLDB involves re-architecting how data is stored, accessed, and scaled. By isolating image BLOBs from transactional metadata, we transition from a tightly coupled monolith to a distributed, service-oriented architecture. For my customer, this approach reduced storage costs by over 93 percent and unlocks global scalability without sacrificing transactional guarantees. The critical path to consistency lies in coordinating Spanner and GCS at the application level to preserve correctness across systems. This is not just a migration but a controlled redesign for high-throughput, high-integrity workloads.

Final Note:

This post walks through the key architectural decisions behind moving from MySQL to Spanner and GCS. To keep things straightforward, I’ve left out some of the more detailed stuff such as migration tooling, governance, and all the edge-case failure handling. Those topics definitely deserve their own dedicated deep dive in a separate design document. Thank you for reading!

Source Credit: https://medium.com/google-cloud/decouple-and-save-the-hybrid-spanner-gcs-strategy-to-slash-vldb-migration-costs-2112a116b975?source=rss—-e52cf94d98af—4